Resources

Find the latest news & updates on AWS

Cloudtech Has Earned AWS Advanced Tier Partner Status

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network!

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network! This significant achievement highlights our expertise in AWS cloud modernization and reinforces our commitment to delivering transformative solutions for our clients.

As an AWS Advanced Tier Partner, Cloudtech has been recognized for its exceptional capabilities in cloud data, application, and infrastructure modernization. This milestone underscores our dedication to excellence and our proven ability to leverage AWS technologies for outstanding results.

A Message from Our CEO

“Achieving AWS Advanced Tier Partner status is a pivotal moment for Cloudtech,” said Kamran Adil, CEO. “This recognition not only validates our expertise in delivering advanced cloud solutions but also reflects the hard work and dedication of our team in harnessing the power of AWS services.”

What This Means for Us

To reach Advanced Tier Partner status, Cloudtech demonstrated an in-depth understanding of AWS services and a solid track record of successful, high-quality implementations. This achievement comes with enhanced benefits, including advanced technical support, exclusive training resources, and closer collaboration with AWS sales and marketing teams.

Elevating Our Cloud Offerings

With our new status, Cloudtech is poised to enhance our cloud solutions even further. We provide a range of services, including:

- Data Modernization

- Application Modernization

- Infrastructure and Resiliency Solutions

By utilizing AWS’s cutting-edge tools and services, we equip startups and enterprises with scalable, secure solutions that accelerate digital transformation and optimize operational efficiency.

We're excited to share this news right after the launch of our new website and fresh branding! These updates reflect our commitment to innovation and excellence in the ever-changing cloud landscape. Our new look truly captures our mission: to empower businesses with personalized cloud modernization solutions that drive success. We can't wait for you to explore it all!

Stay tuned as we continue to innovate and drive impactful outcomes for our diverse client portfolio.

What is Amazon S3, and why should it be a part of data strategy for SMBs?

Amazon S3 (Simple Storage Service) has become a cornerstone of modern data strategies, with over 400 trillion objects stored and the capacity to handle 150 million requests per second. It underpins mission-critical workloads across industries, from storage and backup to analytics and application delivery.

For small and mid-sized businesses (SMBs), Amazon S3 offers more than just scalable cloud storage. It enables centralized data access, reduces infrastructure overhead, and supports long-term agility. By integrating Amazon S3 into their data architecture, SMBs can simplify operations, strengthen security, and accelerate digital initiatives without the complexity of managing hardware.

This article explores the core features of Amazon S3, its architectural advantages, and why it plays a critical role in helping SMBs compete in an increasingly data-driven economy.

Key takeaways:

- Amazon S3 scales automatically without performance loss: Built-in request scaling, intelligent partitioning, and unlimited storage capacity allow S3 to handle large workloads with no manual effort.

- Performance can be improved with proven techniques: Strategies like randomized prefixes, multipart uploads, and parallel processing significantly increase throughput and reduce latency.

- Storage classes directly impact performance and cost: Choosing between S3 Standard, Intelligent-Tiering, Glacier, and others helps balance retrieval speed, durability, and storage pricing.

- Integrations turn S3 into a complete data platform: Using services like CloudFront, Athena, Lambda, and Macie expands S3’s role from storage to analytics, automation, and security.

- Cloudtech delivers scalable, resilient S3 implementations: Through data modernization, application integration, and infrastructure design, Cloudtech helps businesses build optimized cloud systems.

What is Amazon S3?

Amazon S3 is a cloud object storage service built to store and retrieve any amount of data from anywhere. It is designed for high durability and availability, supporting a wide range of use cases such as backup, data archiving, content delivery, and analytics.

It uses an object-based storage architecture that offers more flexibility and scalability than traditional file systems, with the following key features:

- Objects: Each file (regardless of type or size) is stored as an object, which includes the data, metadata, and a unique identifier.

- Buckets: Objects are grouped into buckets, which serve as storage containers. Each bucket must have a globally unique name across AWS.

- Keys: Every object is identified by a key, which functions like a file path to locate and retrieve the object within a bucket.

Buckets can store objects up to 5 TB in size, making it ideal for high-volume workloads such as medical imaging, logs, or backups. It allows businesses to scale storage on demand without managing servers or provisioning disk space.

For healthcare SMBs, this architecture is particularly useful when storing large volumes of imaging files, patient records, or regulatory documentation. Data can be encrypted at rest using AWS Key Management Service (AWS KMS), with versioning and access control policies to support compliance with HIPAA or similar standards.

Note: Many SMBs also use Amazon S3 as a foundation for data lakes, web hosting, disaster recovery, and long-term retention strategies. Since it integrates natively with services like Amazon CloudWatch (for monitoring), AWS Backup (for automated backups), and Amazon S3 Glacier (for archival), teams can build a full storage workflow without additional tools or manual effort.

How does Amazon S3 help SMBs improve scalability and performance?

For SMBs, especially those in data-intensive industries like healthcare, scalability and speed are operational necessities. Whether it’s securely storing patient records, streaming diagnostic images, or managing years of compliance logs, Amazon S3 offers the architecture and automation to handle these demands without requiring an enterprise-sized IT team.

The scalable, high-performance storage capabilities of Amazon S3 are backed by proven use cases that show why it needs to be a part of data strategy for SMBs:

1. Scale with growing data—no reconfiguration needed

For example, a mid-sized radiology center generates hundreds of high-resolution DICOM files every day. Instead of provisioning new storage hardware as data volumes increase, the center uses Amazon S3 to automatically scale its storage footprint without downtime or administrative overhead.

- No upfront provisioning is required since storage grows dynamically.

- Upload throughput stays consistent, even as the object count exceeds millions.

- Data is distributed automatically across storage nodes and zones.

2. Fast access to critical data with intelligent partitioning

Speed matters for business efficiency, and this is especially true for SMBs in urgent care settings. Amazon S3 partitions data behind the scenes using object key prefixes, allowing hospitals or clinics to retrieve lab results or imaging files quickly, even during peak operational hours.

- Object keys like /radiology/2025/07/CT-Scan-XYZ.dcm help structure storage and maximize retrieval speed.

- Performance scales independently across partitions, so multiple departments can access their data simultaneously.

3. Resiliency built-in: zero data loss from zone failure

Amazon S3 stores copies of data across multiple Availability Zones (AZs) within a region. For example, a healthcare SMB running in the AWS Asia Pacific (Mumbai) Region can rely on Amazon S3 to automatically duplicate files across AZs, ensuring continuity if one zone experiences an outage.

- Supports 99.999999999% (11 9s) durability.

- Eliminates the need for manual replication scripts or redundant storage appliances.

4. Lifecycle rules keep storage lean and fast

Over time, SMBs accumulate large volumes of infrequently accessed data, such as insurance paperwork or compliance archives. Amazon S3’s lifecycle policies automatically transition such data to archival tiers like Amazon S3 Glacier or S3 Glacier Deep Archive, freeing up performance-optimized storage.

For example, a diagnostic lab sets rules to transition monthly lab reports to Glacier after 90 days, cutting storage costs by up to 70% without losing access.

5. Supporting version control and rollback

In clinical research, file accuracy and traceability are paramount. S3 versioning automatically tracks every change to files, helping SMBs revert accidental changes or retrieve historical snapshots of reports.

- Researchers can compare versions of a study submitted over multiple weeks.

- Deleted objects can be restored instantly without the need for manual backups.

6. Global scalability for multi-location clinics

An expanding healthcare provider with branches across different states uses Cross-Region Replication (CRR) to duplicate key records from one region to another. This supports faster access for backup recovery and complies with future international data residency goals.

- Low-latency access for geographically distributed teams.

- Supports business continuity and audit-readiness.

Why does this matter for SMBs? Unlike legacy NAS or file server systems, Amazon S3’s performance scales automatically with usage. There is no need for manual intervention or costly upgrades. Healthcare SMBs, often constrained by limited IT teams and compliance demands, gain a resilient, self-healing storage layer that responds in real time to changing data patterns and operational growth.

Choosing the right Amazon S3 storage option

Choosing the right Amazon S3 storage classes is a strategic decision for SMBs managing growing volumes of operational, compliance, and analytical data. Each class is designed to balance access speed and cost, allowing businesses to scale storage intelligently based on how often they need to retrieve their data.

Here’s how different storage classes apply to common SMB use cases:

- Amazon S3 Standard

Amazon S3 Standard offers low latency, high throughput, and immediate access to data, ideal for workloads where performance can’t be compromised.

Best for: Active patient records, real-time dashboards, business-critical applications

Example: A healthcare diagnostics provider hosts an internal dashboard that physicians use to pull lab reports during consultations. These reports must load instantly, regardless of traffic spikes or object size. With S3 Standard, the team avoids lag and ensures service consistency, even during peak hours.

Key features:

- Millisecond retrieval time

- Supports unlimited requests per second

- No performance warm-up period

- Higher storage cost, but no retrieval fees

- Amazon S3 Intelligent-Tiering

Amazon S3 Intelligent-Tiering automatically moves data between storage tiers based on usage. It maintains high performance without manual tuning.

Best for: Patient imaging data, vendor contracts, internal documentation with unpredictable access

Example: A mid-sized healthcare SMB stores diagnostic images (X-rays, MRIs) that may be accessed intensively for a few weeks after scanning, then rarely afterwards. Intelligent tiering ensures that these files stay in high-performance storage during peak use and then move to lower-cost archival tiers, eliminating the need for IT teams to manually monitor them.

Key features:

- No retrieval fees or latency impact

- Optimizes cost over time automatically

- Ideal for compliance retention data with unpredictable access patterns

- Amazon S3 Express One Zone

Amazon S3 Express One Zone is designed for high-speed access in latency-sensitive environments and stores data in a single Availability Zone.

Best for: Time-sensitive data processing pipelines in a fixed location

Example: A healthtech company running real-time analytics for wearables or IoT-enabled patient monitors can’t afford multi-zone latency. By colocating its compute workloads with Amazon S3 Express One Zone, it reduces data transfer delays and supports near-instant response times.

Key features:

- Microsecond latency within the same AZ

- Lower cost compared to multi-zone storage

- Suitable for applications where region-level fault tolerance is not required

- Amazon S3 Glacier

Amazon S3 Glacier is built for cost-effective archival. It supports various retrieval speeds depending on business urgency.

Best for: Long-term audit data, old medical records, regulatory logs

Example: A diagnostics company stores seven years of HIPAA-regulated medical reports for compliance. They rarely need to retrieve this data—but when an audit request comes in, they can choose between low-cost bulk recovery or faster expedited retrieval based on deadlines.

- Lowest storage cost among archival options

- Retrieval times: 1 minute to 12 hours

- Best for data you must keep, but rarely access

- Amazon S3 Glacier Instant Retrieval

This storage class offers Amazon S3 Standard-level performance for archives, but at a lower cost.

Best for: Medical archives that may need quick access without full S3 Standard pricing

Example: A healthcare network archives mammogram images that may need to be retrieved within seconds if a patient returns after several months. Glacier Instant Retrieval balances cost and speed, keeping storage efficient while maintaining instant availability.

Key features:

- Millisecond access times

- Supports lifecycle rules for auto-transition from S3 Standard

- Ideal for rarely accessed data with occasional urgent retrieval needs

Before choosing a storage option, here are some factors that SMB decision-makers should consider:

Pro tip: SMBs can also work with AWS partners like Cloudtech to map data types to appropriate storage classes.

How does Amazon S3 integrate with other AWS services?

For SMBs, Amazon S3 becomes far more than a storage solution when combined with other AWS services. These integrations help streamline operations, automate workflows, improve security posture, and unlock deeper business insights.

Below are practical ways SMBs can integrate S3 with other AWS services to improve efficiency and performance:

- Faster content delivery with Amazon CloudFront

SMBs serving content to customers across regions can connect Amazon S3 to Amazon CloudFront, AWS’s content delivery network (CDN).

How it works: Amazon S3 acts as the origin, and Amazon CloudFront caches content in AWS edge locations to reduce latency.

Example: A regional telehealth provider uses Amazon CloudFront to quickly deliver patient onboarding documents stored in Amazon S3 to remote clinics, improving access speed by over 40%.

- On-demand data querying with Amazon Athena

Amazon Athena lets teams run SQL queries directly on Amazon S3 data without moving it into a database.

How it helps: No need to manage servers or build data pipelines. Just point Amazon Athena to Amazon S3 and start querying.

Example: A diagnostics lab uses Athena to run weekly reports on CSV-formatted test results stored in S3, without building custom ETL jobs or infrastructure.

- Event-driven automation using AWS Lambda

AWS Lambda can be triggered by Amazon S3 events, like new file uploads, to automate downstream actions.

Use case: Auto-processing medical images, converting formats, or logging uploads in real-time.

Example: When lab reports are uploaded to a specific Amazon S3 bucket, an AWS Lambda function instantly routes them to the right physician based on metadata.

- Centralized backup and archival with AWS Backup and S3 Glacier

Amazon S3 integrates with AWS Backup to enforce organization-wide backup policies and automate lifecycle transitions.

Benefits: Meets long-term retention requirements, such as HIPAA or regional health regulations, without manual oversight.

Example: A healthcare SMB archives historical patient data from Amazon S3 to Amazon S3 Glacier Deep Archive, retaining compliance while cutting storage costs by 40%.

- Strengthened data security with Amazon Macie and Amazon GuardDuty

Amazon Macie scans S3 for sensitive information like PHI or PII, while Amazon GuardDuty detects unusual access behavior.

How it helps: Flags risks early and reduces the chance of breaches.

Example: A health records company uses Amazon Macie to monitor Amazon S3 buckets for unencrypted PHI uploads. Amazon GuardDuty alerts the team if unauthorized access attempts are made.

These integrations make Amazon S3 a foundational service in any SMB’s modern cloud architecture. When configured correctly, they reduce operational burden, improve security, and unlock value from stored data without adding infrastructure complexity.

Pro tip: AWS Partners like Cloudtech help SMBs set up a well-connected AWS ecosystem that’s aligned with their business goals. They ensure services like Amazon S3, Amazon CloudFront, Amazon Athena, AWS Lambda, and AWS Backup are configured securely and work together efficiently. From identity setup to event-driven workflows and cost-optimized storage, they help SMBs reduce manual overhead and accelerate value, without needing deep in-house cloud expertise.

Best practices SMBs can follow to optimize Amazon S3 benefits

For small and mid-sized businesses, maximizing both time and cost efficiency is critical. Simply using Amazon S3 for storage isn’t enough. Businesses must fine-tune their approach to get the most out of it. Here are several best practices that help unlock the full potential of Amazon S3:

- Use smarter naming to avoid performance bottlenecks: Sequential file names like invoice-001.pdf, invoice-002.pdf can overload a single partition in Amazon S3, leading to request throttling. By adopting randomized prefixes or hash-based naming, businesses can distribute traffic more evenly and avoid slowdowns.

- Split large files using multipart uploads: Uploading large files in a single operation increases the risk of failure due to network instability. With multipart uploads, Amazon S3 breaks files into smaller parts, uploads them in parallel, and retries only the failed parts. This improves speed, reliability, and reduces operational frustration.

- Reduce latency for distributed users: For SMBs with global teams or customer bases, accessing data directly from Amazon S3 can introduce delays. By integrating Amazon CloudFront, data is cached at edge locations worldwide, reducing latency and improving user experience.

- Accelerate long-distance transfers: When remote offices or partners need to send large volumes of data, Amazon S3 Transfer Acceleration uses AWS’s edge infrastructure to speed up uploads. This significantly reduces transfer time, especially from distant geographies.

- Monitor usage with Amazon CloudWatch: Tracking S3 performance is essential. Amazon CloudWatch offers real-time visibility into metrics such as request rates, errors, and transfer speeds. These insights help businesses proactively resolve bottlenecks and fine-tune performance.

By applying these practices, SMBs transform Amazon S3 from a basic storage tool into a powerful enabler of performance, cost-efficiency, and scalability.

How Cloudtech helps businesses implement Amazon S3?

Cloudtech helps businesses turn Amazon S3 into a scalable, resilient, and AI-ready foundation for modern cloud infrastructure. Through its four core service areas, Cloudtech delivers practical, outcome-driven solutions that simplify data operations and support long-term growth.

- Data modernization: Data lake architectures are designed with Amazon S3 as the central storage layer, enabling scalable, analytics-ready platforms. Each engagement begins with an assessment of data volume, access patterns, and growth trends to define the right storage class strategy. Automated pipelines are built using AWS Glue, Athena, and Lambda to move, transform, and analyze data in real time.

- Infrastructure & resiliency services: S3 implementations are architected for resilience and availability. This includes configuring multi-AZ and cross-region replication, applying AWS Backup policies, and conducting chaos engineering exercises to validate system behavior under failure conditions. These measures help maintain business continuity and meet operational recovery objectives.

- Application modernization: Legacy applications are restructured by integrating Amazon S3 with serverless, event-driven workflows. Using AWS Lambda, automated actions are triggered by S3 events, such as object uploads or deletions, enabling real-time data processing without requiring server management. This modern approach improves operational efficiency and scales with demand.

- Generative AI: Data stored in Amazon S3 is prepared for generative AI applications through intelligent document processing using Amazon Textract. Outputs are connected to interfaces powered by Amazon Q Business, allowing teams to extract insights and interact with unstructured data through natural language, without requiring technical expertise.

Conclusion

Amazon S3 delivers scalable, high-performance storage that supports a wide range of cloud use cases. With the right architecture, naming strategies, storage classes, and integrations, teams can achieve consistent performance and long-term cost efficiency.

Amazon CloudFront, Amazon Athena, AWS Lambda, and other AWS services extend Amazon S3 beyond basic storage, enabling real-time processing, analytics, and resilient distribution.

Cloudtech helps businesses implement Amazon S3 as part of secure, scalable, and optimized cloud architectures, backed by AWS-certified expertise and a structured delivery process.

Contact us if you want to build a stronger cloud storage foundation with Amazon S3.

FAQ’s

1. Is AWS S3 a database?

No, AWS S3 is not a database. It is an object storage service designed to store and retrieve unstructured data. Unlike databases, it does not support querying, indexing, or relational data management features.

2. What is S3 best used for?

Amazon S3 is best used for storing large volumes of unstructured data such as backups, media files, static website assets, analytics datasets, and logs. It provides scalable, durable, and low-latency storage with integration across AWS services.

3. What is the difference between S3 and DB?

S3 stores objects, such as files and media, ideal for unstructured data. A database stores structured data with querying, indexing, and transaction support. S3 focuses on storage and retrieval, while databases manage relationships and real-time queries.

4. What does S3 stand for?

S3 stands for “Simple Storage Service.” Amazon’s cloud-based object storage solution offers scalable capacity, high durability, and global access for storing and retrieving any amount of data from anywhere.

5. What is S3 equivalent to?

S3 is equivalent to an object storage system, such as Google Cloud Storage or Azure Blob Storage. It functions as a cloud-based file repository, rather than a traditional file system or database, optimized for scalability and high availability.

A complete guide to Amazon S3 Glacier for long-term data storage

The global datasphere will balloon to 175 zettabytes in 2025, and nearly 80% of that data will go cold within months of creation. That’s not just a technical challenge for businesses. It’s a financial and strategic one.

For small and medium-sized businesses (SMBs), the question is: how to retain vital information like compliance records, backups, and historical logs without bleeding budget on high-cost active storage?

This is where Amazon S3 Glacier comes in. With its ultra-low costs, high durability, and flexible retrieval tiers, the purpose-built archival storage solution lets you take control of long-term data retention without compromising on compliance or accessibility.

This guide breaks down what S3 Glacier is, how it works, when to use it, and how businesses can use it to build scalable, cost-efficient data strategies that won’t buckle under tomorrow’s zettabytes.

Key takeaways:

- Purpose-built for archival storage: Amazon S3 Glacier classes are designed to reduce costs for infrequently accessed data while maintaining durability.

- Three storage class options: Instant retrieval, flexible retrieval, and deep archive support varying recovery speeds and pricing tiers.

- Lifecycle policy automation: Amazon S3 lifecycle rules automate transitions between storage classes, optimizing cost without manual oversight.

- Flexible configuration and integration: Amazon S3 Glacier integrates with existing Amazon S3 buckets, IAM policies, and analytics tools like Amazon Redshift Spectrum and AWS Glue.

- Proven benefits across industries: Use cases from healthcare, media, and research confirm Glacier’s role in long-term data retention strategies.

What is Amazon S3 Glacier storage, and why do SMBs need it?

Amazon Simple Storage Service (Amazon S3) Glacier is an archival storage class offered by AWS, designed for long-term data retention at a low cost. It’s intended for data that isn’t accessed frequently but must be stored securely and durably, such as historical records, backup files, and compliance-related documents.

Unlike Amazon S3 Standard, which is built for real-time data access, Glacier trades off speed for savings. Retrieval times vary depending on the storage class used, allowing businesses to optimize costs based on how soon or how often they need to access that data.

Why it matters for SMBs: For small and mid-sized businesses modernizing with AWS, Amazon S3 Glacier helps manage growing volumes of cold data without escalating costs. Key reasons for implementing the solution include:

- Cost-effective for inactive data: Pay significantly less per GB compared to other Amazon S3 storage classes, ideal for backup or archive data that is rarely retrieved.

- Built-in lifecycle policies: Automatically move data from Amazon S3 Standard or Amazon S3 Intelligent-Tiering to Amazon Glacier or Glacier Deep Archive based on rules with no manual intervention required.

- Seamless integration with AWS tools: Continue using familiar AWS APIs, Identity and Access Management (IAM), and Amazon S3 bucket configurations with no new learning curve.

- Durable and secure: Data is redundantly stored across multiple AWS Availability Zones, with built-in encryption options and compliance certifications.

- Useful for regulated industries like healthcare: Healthcare SMBs can use Amazon Glacier to store medical imaging files, long-term audit logs, and compliance archives without overcommitting to active storage costs.

Amazon S3 Glacier gives SMBs a scalable way to manage historical data while aligning with cost-control and compliance requirements.

How can SMBs choose the right Amazon S3 Glacier class for their data?

The Amazon S3 Glacier storage classes are purpose-built for long-term data retention, but not all archived data has the same access or cost requirements. AWS offers three Glacier classes, each designed for a different balance of retrieval time and storage pricing.

For SMBs, choosing the right Glacier class depends on how often archived data is accessed, how quickly it needs to be retrieved, and the overall storage budget.

1. Amazon S3 Glacier Instant Retrieval

Amazon S3 Glacier Instant Retrieval is designed for rarely accessed data that still needs to be available within milliseconds. It provides low-cost storage with fast retrieval, making it suitable for SMBs that occasionally need immediate access to archived content.

Specifications:

- Retrieval time: Milliseconds

- Storage cost: ~$0.004/GB/month

- Minimum storage duration: 90 days

- Availability SLA: 99.9%

- Durability: 99.999999999%

- Encryption: Supports SSE-S3 and SSE-KMS

- Retrieval model: Immediate access with no additional tiering

When it’s used: This class suits SMBs managing audit logs, patient records, or legal documents that are accessed infrequently but must be available without delay. Healthcare providers, for instance, use this class to store medical imaging (CT, MRI scans) for emergency retrieval during patient consultations.

2. Amazon S3 Glacier Flexible Retrieval

Amazon S3 Glacier Flexible Retrieval is designed for data that is infrequently accessed and can tolerate retrieval times ranging from minutes to hours. It offers multiple retrieval options to help SMBs manage both performance and cost, including a no-cost bulk retrieval option.

Specifications:

- Storage cost: ~$0.0036/GB/month

- Minimum storage duration: 90 days

- Availability SLA: 99.9%

- Durability: 99.999999999%

- Encryption: Supports SSE-S3 and SSE-KMS

- Retrieval tiers:

- Expedited: 1–5 minutes ($0.03/GB)

- Standard: 3–5 hours ($0.01/GB)

- Bulk: 5–12 hours (free per GB)

- Provisioned capacity (optional): $100 per unit/month

When it’s used: SMBs performing planned data restores, like IT service providers handling monthly backups, or financial teams accessing quarterly records, can benefit from this class. It's also suitable for healthcare organizations restoring archived claims data or historical lab results during audits.

3. Amazon S3 Glacier Deep Archive

Amazon S3 Glacier Deep Archive is AWS’s lowest-cost storage class, optimized for data that is accessed very rarely, typically once or twice per year. It’s designed for long-term archival needs where retrieval times of up to 48 hours are acceptable.

Specifications:

- Storage cost: ~$0.00099/GB/month

- Minimum storage duration: 180 days

- Availability SLA: 99.9%

- Durability: 99.999999999%

- Encryption: Supports SSE-S3 and SSE-KMS

- Retrieval model:

- Standard: ~12 hours ($0.0025/GB)

- Bulk: ~48 hours ($0.0025/GB)

When it’s used: This class is ideal for SMBs with strict compliance or regulatory needs but no urgency in data retrieval. Legal firms archiving case files, research clinics storing historical trial data, or any business maintaining long-term tax records can use Deep Archive to minimize ongoing storage costs.

By selecting the right Glacier storage class, SMBs can control storage spending without sacrificing compliance or operational needs.

How to successfully set up and manage Amazon S3 Glacier storage?

Setting up Amazon S3 Glacier storage classes requires careful planning of bucket configurations, lifecycle policies, and access management strategies. Organizations must consider data classification requirements, access patterns, and compliance obligations when designing Amazon S3 Glacier storage implementations.

The management approach differs significantly from standard Amazon S3 storage due to retrieval requirements and cost optimization considerations. Proper configuration ensures optimal performance while minimizing unexpected costs.

Step 1: Creating and configuring Amazon S3 buckets

S3 bucket configuration for Amazon S3 Glacier storage classes requires careful consideration of regional placement, access controls, and lifecycle policy implementation. Critical configuration parameters include:

- Regional selection: Choose regions based on data sovereignty requirements, disaster recovery strategies, and network latency considerations for retrieval operations

- Access control policies: Implement IAM policies that restrict retrieval operations to authorized users and prevent unauthorized cost generation

- Versioning strategy: Configure versioning policies that align with minimum storage duration requirements (90 days for Instant/Flexible, 180 days for Deep Archive)

- Encryption settings: Enable AES-256 or AWS KMS encryption for compliance with data protection requirements

Bucket policy configuration must account for the restricted access patterns associated with Amazon S3 Glacier storage classes. Standard S3 permissions apply, but organizations should implement additional controls for retrieval operations and related costs.

Step 2: Uploading and managing data in Amazon S3 Glacier storage classes

Direct uploads to Amazon S3 Glacier storage classes utilize standard S3 PUT operations with appropriate storage class specifications. Key operational considerations include:

- Object size optimization: AWS applies default behavior, preventing objects smaller than 128 KB from transitioning to avoid cost-ineffective scenarios

- Multipart upload strategy: Large objects benefit from multipart uploads, with each part subject to minimum storage duration requirements

- Metadata management: Implement comprehensive tagging strategies for efficient object identification and retrieval planning

- Aggregation strategies: Consider combining small files to optimize storage costs, where minimum duration charges may exceed data storage costs

Large-scale migrations often benefit from AWS DataSync or AWS Storage Gateway implementations that optimize transfer operations. Organizations should evaluate transfer acceleration options for geographically distributed data sources.

Step 3: Restoring objects and managing retrieval settings

Object restoration from Amazon S3 Glacier storage classes requires explicit restoration requests that specify retrieval tiers and duration parameters. Critical operational parameters include:

- Retrieval tier selection: Choose appropriate tiers based on urgency requirements and cost constraints

- Duration specification: Set restoration duration (1-365 days) to match downstream processing requirements

- Batch coordination: Plan bulk restoration operations to avoid overwhelming downstream systems

- Cost monitoring: Track retrieval costs across different tiers and adjust strategies accordingly

Restored objects remain accessible for the specified duration before returning to the archived state. Organizations should coordinate restoration timing with downstream processing requirements to avoid re-restoration costs.

How to optimize storage with Amazon S3 Glacier lifecycle policies?

Moving beyond basic Amazon S3 Glacier implementation, organizations can achieve significant cost optimization through the strategic configuration of S3 lifecycle policies. These policies automate data transitions across storage classes, eliminating the need for manual intervention while ensuring cost-effective data management throughout object lifecycles.

Lifecycle policies provide teams with precise control over how data is moved across storage classes, helping to reduce costs without sacrificing retention goals. For Amazon S3 Glacier, getting the configuration right is crucial; even minor missteps can result in higher retrieval charges or premature transitions that impact access timelines.

Translating strategy into measurable savings starts with how those lifecycle rules are configured.

1. Lifecycle policy configuration fundamentals

Amazon S3 lifecycle policies automate object transitions through rule-based configurations that specify transition timelines and target storage classes. Organizations can implement multiple rules within a single policy, each targeting specific object prefixes or tags for granular control and management.

Critical configuration parameters include:

- Transition timing: Objects in Standard-IA storage class must remain for a minimum of 30 days before transitioning to Amazon S3 Glacier

- Object size filtering: Amazon S3 applies default behavior, preventing objects smaller than 128 KB from transitioning to avoid cost-ineffective scenarios

- Storage class progression: Design logical progression paths that optimize costs while maintaining operational requirements

- Expiration rules: Configure automatic deletion policies for objects reaching end-of-life criteria

2. Strategic transition timing optimization

Effective lifecycle policies require careful analysis of data access patterns and cost structures across storage classes. Two-step transitioning approaches (Standard → Standard-IA → Amazon S3 Glacier) often provide cost advantages over direct transitions.

Optimal transition strategies typically follow these patterns:

- Day 0-30: Maintain objects in the Standard storage class for frequent access requirements

- Day 30-90: Transition to Standard-IA for reduced storage costs with immediate access capabilities

- Day 90+: Implement Amazon S3 Glacier transitions based on access frequency requirements and cost optimization goals

- Day 365+: Consider Deep Archive transition for long-term archival scenarios

3. Policy monitoring and cost optimization

Billing changes occur immediately when lifecycle configuration rules are satisfied, even before physical transitions complete. Organizations must implement monitoring strategies that track the effectiveness of policies and their associated costs.

Key monitoring metrics include:

- Transition success rates: Monitor successful transitions versus failed attempts

- Cost impact analysis: Track storage cost reductions achieved through lifecycle policies

- Access pattern validation: Verify that transition timing aligns with actual data access requirements

- Policy rule effectiveness: Evaluate individual rule performance and adjust configurations accordingly

What type of businesses benefit the most from Amazon S3 Glacier?

Amazon S3 Glacier storage classes are widely used to support archival workloads where cost efficiency, durability, and compliance are key priorities. Each class caters to distinct access patterns and technical requirements.

The following use cases, drawn from AWS documentation and customer case studies, illustrate practical applications of these classes across different data management scenarios.

1. Media asset archival (Amazon S3 Glacier Instant Retrieval)

Amazon S3 Glacier Instant Retrieval is recommended for archiving image hosting libraries, video content, news footage, and medical imaging datasets that are rarely accessed but must remain available within milliseconds. The class provides the same performance and throughput as Amazon S3 Standard while reducing storage costs.

Snap Inc. serves as a reference example. The company migrated over two exabytes of user photos and videos to Instant Retrieval within a three-month period. Despite the massive scale, the transition had no user-visible impact. In several regions, latency improved by 20-30 percent. This change resulted in annual savings estimated in the tens of millions of dollars, without compromising availability or throughput.

2. Scientific data preservation (Amazon S3 Glacier Deep Archive)

Amazon S3 Glacier Deep Archive is designed for data that must be retained for extended periods but is accessed infrequently, such as research datasets, regulatory archives, and records related to compliance. With storage pricing at $0.00099 per GB per month and durability of eleven nines across multiple Availability Zones, it is the most cost-efficient option among S3 classes. Retrieval options include standard (up to 12 hours) and bulk (up to 48 hours), both priced at approximately $0.0025 per GB.

Pinterest is one example of Deep Archive in practice. The company used Amazon S3 Lifecycle rules and internal analytics pipelines to identify infrequently accessed datasets and transition them to Deep Archive. This transition enabled Pinterest to reduce annual storage costs by several million dollars while meeting long-term retention requirements for internal data governance.

How Cloudtech helps SMBs solve data storage challenges?

SMBs don’t need to figure out Glacier class selection on their own. AWS partners like Cloudtech can help them assess data access patterns, retention requirements, and compliance needs to determine the most cost-effective Glacier class for each workload. From setting up automated Amazon S3 lifecycle rules to integrating archival storage into ongoing cloud modernization efforts, Cloudtech ensures that SMBs get the most value from their AWS investment.

A recent case study around a nonprofit healthcare insurer illustrates this approach. They faced growing limitations with its legacy on-premises data warehouse, built on Oracle Exadata. The setup restricted storage capacity, leading to selective data retention and delays in analytics.

Cloudtech designed and implemented an AWS-native architecture that eliminated these constraints. The new solution centered around a centralized data lake built on Amazon S3, allowing full retention of both raw and processed data in a unified, secure environment.

To support efficient data access and compliance, the architecture included:

- AWS Glue for data cataloging and metadata management

- Amazon Redshift Spectrum for direct querying from Amazon S3 without the need for full data loads

- Automated Redshift backups stored directly in Amazon S3 with custom retention settings

This minimized data movement, enabled near real-time insights, and supported healthcare compliance standards around data availability and continuity.

Outcome: By transitioning to managed AWS services, the client removed storage constraints, improved analytics readiness, and reduced infrastructure overhead. The move also unlocked long-term cost savings by aligning storage with actual access needs through Amazon S3 lifecycle rules and tiered Glacier storage classes.

Similarly, Cloudtech is equipped to support SMBs with varying storage requirements. It can help businesses with:

- Storage assessment: Identifies frequently and infrequently accessed datasets to map optimal Glacier storage classes

- Lifecycle policy design: Automates data transitions from active to archival storage based on access trends

- Retrieval planning: Aligns retrieval time expectations with the appropriate Glacier tier to minimize costs without disrupting operations

- Compliance-focused configurations: Ensures backup retention, encryption, and access controls meet industry-specific standards

- Unified analytics architecture: Combines Amazon S3 with services like AWS Glue and Amazon Redshift Spectrum to improve visibility without increasing storage costs

Whether it’s for healthcare records, financial audits, or customer history logs, Cloudtech helps SMBs build scalable, secure, and cost-aware storage solutions using only AWS services.

Conclusion

Amazon S3 Glacier storage classes, Instant Retrieval, Flexible Retrieval, and Deep Archive, deliver specialized solutions for cost-effective, long-term data retention. Their retrieval frameworks and pricing models support critical compliance, backup, and archival needs across sectors.

Selecting the right class requires alignment with access frequency, retention timelines, and budget constraints. With complex retrieval tiers and storage duration requirements, expert configuration makes a measurable difference. Cloudtech helps organizations architect Amazon S3 Glacier-backed storage strategies that cut costs while maintaining scalability, data security, and regulatory compliance.

Book a call to plan a storage solution that fits your operational and compliance needs, without overspending.

FAQ’s

1. What is the primary benefit of using Amazon S3 Glacier?

Amazon S3 Glacier provides ultra-low-cost storage for infrequently accessed data, offering long-term retention with compliance-grade durability and flexible retrieval options ranging from milliseconds to days.

2. Is the Amazon S3 Glacier free?

No. While Amazon Glacier has the lowest storage costs in AWS, charges apply for storage, early deletion, and data retrieval based on tier and access frequency.

3. How to change Amazon S3 to Amazon Glacier?

Use Amazon S3 lifecycle policies to automatically transition objects from standard classes to Glacier. You can also set the storage class during object upload using the Amazon S3 API or console.

4. Is Amazon S3 no longer global?

Amazon S3 remains a regional service. Data is stored in selected AWS Regions, but can be accessed globally depending on permissions and cross-region replication settings.

5. What is a vault in Amazon S3 Glacier?

Vaults were used in the original Amazon Glacier service. With Amazon S3 Glacier, storage is managed through Amazon S3 buckets and storage classes, rather than separate vault structures.

Getting started with AWS Athena: a comprehensive guide

Small and medium-sized businesses (SMBs) seek to derive value from their data without heavy infrastructure investment, and Amazon Athena offers a simple yet powerful solution. As a serverless query service, Athena allows teams to run SQL queries directly on data stored in Amazon S3, eliminating the need for provisioning servers, setting up databases, or managing ETL pipelines.

Many SMBs use Athena to generate fast insights from CSVs, logs, or JSON files stored in S3. For example, a healthcare provider can use Athena to analyze patient encounter records stored in S3, helping operations teams track appointment volumes, identify care gaps, or monitor billing anomalies in real time.

This guide will go deeper into how Athena works, how it integrates with AWS Glue for automated schema discovery, and how SMBs can get started quickly while keeping costs predictable and usage efficient.

Key takeaways:

- Run interactive SQL queries directly on Amazon S3 without managing any servers or infrastructure.

- Only pay for the data scanned, making it cost-effective for SMBs with varying workloads.

- Works seamlessly with AWS Glue, IAM, QuickSight, and supports multiple data formats like Parquet, JSON, and CSV.

- Ideal for log analysis, ad-hoc queries, BI dashboards, clickstream analysis, and querying archived data.

- Read-only access, no DML support, performance depends on partitioning and query optimization.

What is AWS Athena and why does it matter for SMBs?

Amazon Athena is a serverless, distributed SQL query engine built on Trino (formerly Presto) that lets businesses query data directly in Amazon S3 using standard SQL, without provisioning infrastructure or building ETL pipelines.

It runs queries in parallel across partitions of business data, which drastically reduces response times even for multi-terabyte datasets. Its schema-on-read model means SMB teams can analyze raw files like CSV, JSON, or Parquet without needing to ingest or transform them first.

Key technical benefits for SMBs include:

- Efficient querying with columnar formats: When SMBs store data in Parquet or ORC, Athena scans only the relevant columns, significantly reducing the amount of data processed and cutting query costs.

- Tight AWS integration: Athena connects to the AWS Glue Data Catalog for automatic schema discovery, versioning, and metadata management, allowing SMBs to treat their Amazon S3 data lake like a structured database.

- Federated query support: SMB teams can run SQL queries across other AWS services (e.g., DynamoDB, Redshift) and external sources (e.g., MySQL, BigQuery) using connectors through AWS Lambda, enabling cross-source analytics without centralizing all their data.

- Security and compliance: Access is controlled via IAM, and all data in transit and at rest can be encrypted. Athena logs queries and results to CloudTrail and CloudWatch, helping regulated industries meet audit requirements.

Example: A diagnostics lab stores lab results in Parquet files on Amazon S3. With Amazon Athena + AWS Glue, they create a unified SQL-accessible view without loading data into a database. Analysts query test volume by region, check turnaround time patterns, and even flag anomalies, all with no infrastructure or long reporting delays.

The bottom line? Amazon Athena gives SMBs the power of big data analytics—on-demand, with no overhead—making it ideal for lean teams that need insights fast without building a data warehouse.

How to get started with AWS Athena? step-by-step

To get started, businesses need to organize their data in Amazon S3, define schemas using AWS Glue Data Catalog, and run queries using standard SQL. With native integration into AWS services, fine-grained IAM access controls, and a pay-per-query model, Athena makes it easy to unlock insights from raw data with minimal setup. It's ideal for teams needing quick, cost-effective analytics on logs, reports, or structured datasets.

Setting up AWS Athena is relatively straightforward, even for businesses with limited technical expertise:

1. Set up an AWS account

To begin using Amazon Athena, an active AWS account is required. The user must ensure that Identity and Access Management (IAM) permissions are correctly configured. Specifically, the IAM role or user should have the necessary policies to access Athena, Amazon S3 (for data storage and query results), and AWS Glue (for managing metadata).

It's recommended to attach managed policies like AmazonAthenaFullAccess, AmazonS3ReadOnlyAccess, and AWSGlueConsoleFullAccess or create custom policies with least-privilege principles tailored to the organization’s security needs. These permissions allow users to create databases, define tables, and execute queries securely within Athena.

2. Create an S3 bucket for data storage

Before running queries with Athena, data must reside in Amazon S3, as Athena is designed to analyze objects stored there directly. This setup step includes bucket creation, data upload, and optimization for query performance.

Navigate to the Amazon S3 console and click “Create bucket.”

- Assign a globally unique name.

- Choose a region close to the workloads or users for latency optimization.

- Enable settings like versioning, encryption (e.g., SSE-S3 or SSE-KMS), and block public access if required.

Upload datasets in supported formats: Athena supports multiple formats, including CSV, JSON, Parquet, ORC, and Avro.

- For better performance and lower cost, use columnar formats like Parquet or ORC.

- Store large datasets in compressed form to reduce data scanned per query.

Organize data into partitions: Structuring data into logical partitioned folders (e.g., s3://company-data/patient-records/year=2025/month=07/) enables Athena to prune unnecessary partitions during query execution.

This improves performance and significantly reduces query costs, as Athena only scans relevant subsets of data.

Set appropriate permissions: Ensure IAM roles or users have the necessary S3 permissions to list, read, and write objects in the bucket. Fine-grained access control improves security and limits access to authorized workloads only.

3. Set up AWS Glue Data catalog (optional)

AWS Glue helps SMBs manage metadata and automate schema discovery for datasets stored in Amazon S3, making Athena queries more efficient and scalable.

- The team creates a Glue database to store metadata about the S3 datasets.

- A crawler is configured to scan the S3 bucket and automatically register tables with defined schemas.

- Once cataloged, these tables appear in Athena, enabling immediate, structured SQL queries without manual schema setup.

4. Access AWS Athena console

Once the data is in Amazon S3 and cataloged (if applicable), the team navigates to the Amazon Athena console via the AWS Management Console.

- They configure a query result location, specifying an S3 bucket where Athena will store the output of all executed queries.

- This is a mandatory setup step, as Athena requires an S3 destination for query results before any SQL can be run.

5. Run the first query

With the environment configured, the team can begin querying data:

- In the Athena console, they select the relevant database and table, automatically available if defined in AWS Glue.

- They write a query using standard SQL to analyze data directly from Amazon S3.

- On executing the query via “Run Query,” Athena scans the underlying data and returns the results in the console and the designated S3 output location.

6. Review and optimize queries

As the team begins using Athena, it’s important to ensure queries remain efficient and cost-effective:

- Implement partitioning on commonly filtered columns (e.g., date or region) to reduce the amount of data scanned.

- Limit query scope by selecting only necessary columns and applying precise WHERE clauses to minimize data retrieval.

- Use the Athena console to monitor query execution time, data scanned, and associated costs, enabling optimization and proactive cost control.

7. Explore advanced features (optional)

Once the foundational setup is complete, SMBs can begin using Amazon Athena’s more advanced capabilities:

- Optimize with efficient data formats: Use columnar formats like Parquet or ORC to reduce scan size and improve query performance for large datasets.

- Visualize with Amazon QuickSight: Connect Athena to Amazon QuickSight for interactive dashboards and business intelligence without moving data.

- Run federated queries: Extend Athena’s reach by querying external data sources, such as MySQL, PostgreSQL, or on-prem databases—via federated connectors and AWS Lambda.

These features allow teams to scale their analytics capabilities while keeping operational overhead low.

Practical use cases for Amazon Athena in business operations

Amazon Athena is more than a business tool. It is a practical engine for answering real business questions directly from raw data in S3, without the delays of setting up infrastructure or ETL pipelines.

For SMBs, it offers a fast path from storage to insight, whether you’re analyzing patient records in healthcare, transaction logs in retail, or system logs in SaaS platforms. Because it speaks standard SQL and integrates natively with AWS Glue and QuickSight, teams can start querying within minutes, with no heavyweight setup, and no data wrangling bottlenecks.

1. Log analysis and monitoring

Amazon Athena is ideal for analyzing server logs and application logs stored in Amazon S3. It allows businesses to perform real-time analysis of logs without needing to set up complex infrastructure.

Example: A retail SMB collects logs from its web servers, tracking user activity and transaction details. Using Athena, the business can query logs to identify trends such as peak shopping hours or common search queries. This data helps the company optimize its website for better user engagement and targeted promotions.

2. Data warehousing and business intelligence

Amazon Athena can be used as a lightweight data warehousing solution, particularly for SMBs that do not require the heavy infrastructure of a full-scale data warehouse. It integrates easily with Amazon QuickSight to deliver business intelligence (BI) dashboards and reports.

Example: A small e-commerce business uses Athena to run SQL queries on sales data stored in Amazon S3. The results are then visualized in Amazon QuickSight to track key performance indicators (KPIs), such as daily sales, revenue per product, and customer acquisition rates, allowing the business to make data-driven decisions.

3. Ad-hoc data queries

For businesses that need to quickly analyze large datasets without the overhead of setting up and maintaining a dedicated analytics system, Athena provides an efficient and cost-effective solution.

Example: A small marketing firm has large datasets containing customer demographics and campaign performance metrics. With Athena, the firm can run ad-hoc queries to analyze customer behavior and assess the effectiveness of different marketing campaigns in real-time, without needing a permanent infrastructure.

4. Clickstream analysis

Athena is a popular tool for analyzing clickstream data, which helps businesses understand user behavior on websites or apps. By querying clickstream data in real-time, companies can optimize user experiences and improve conversion rates.

Example: A media company collects clickstream data that tracks how users interact with its online content. Using Athena, the company can quickly query this data to identify which articles or videos are most popular, what content leads to longer engagement, and where users drop off. This allows the company to tailor content strategies to improve user retention.

5. Data archiving and backup analysis

Businesses often archive large amounts of historical data that may not be frequently accessed but still need to be searchable. Athena makes it easy to query these archives to retrieve specific data when needed.

Example: A healthcare provider stores patient records and historical treatment data in S3. Though this data is rarely accessed, it still needs to be searchable in case of audits or legal requests. Athena allows the provider to run quick queries on archived data, retrieving necessary records without the need for time-consuming data retrieval processes.

Amazon Athena’s versatility makes it an excellent tool for a wide range of use cases, from log analysis to financial reporting. Its serverless nature and cost-effective pricing model ensure that even SMBs with limited technical resources can harness the power of data analytics without the need for complex infrastructure.

How does Cloudtech help SMBs benefit from Amazon Athena?

Deploying Amazon Athena effectively requires the right data architecture, schema management, and cost governance to ensure long-term value. That’s where Cloudtech comes in. As an AWS Advanced Tier Partner focused exclusively on SMBs, Cloudtech helps them harness Athena with a strategic, scalable approach tailored to real business needs.

Here’s how Cloudtech supports the Amazon Athena project:

- Smart data lake setup: Cloudtech builds secure, well-partitioned data lakes on Amazon S3 and integrates AWS Glue for schema discovery, enabling faster, cost-efficient queries with minimal manual setup.

- Query performance optimization: By implementing best practices like columnar formats (Parquet/ORC), partitioning, and bucket strategies, Cloudtech ensures that every Athena query is fast and budget-aware.

- Secure, unified data access: Cloudtech configures federated queries across Amazon S3, on-prem, and third-party sources while enforcing fine-grained IAM policies, so teams can query broadly without compromising security.

- Insights without the overhead: Cloudtech connects Athena to Amazon QuickSight or other BI tools, enabling business teams to explore data visually, without needing SQL or data engineering support.

Whether it is a healthcare provider analyzing claims data or a fintech firm building on-demand dashboards, Cloudtech ensures that Amazon Athena is implemented not just as a tool, but as a foundation for faster, smarter, and more secure decision-making.

Conclusion

Amazon Athena offers SMBs a powerful, serverless solution to analyze data directly from Amazon S3 using standard SQL, eliminating infrastructure complexities and reducing costs. Its fast performance and seamless integration with AWS services enable businesses to gain timely insights and make data-driven decisions efficiently. By following simple setup steps, SMBs can leverage Athena for diverse use cases, from log analysis to business intelligence, enhancing operational agility and scalability.

Cloudtech’s expertise in AWS infrastructure optimization and cloud modernization complements this approach by helping businesses streamline operations and maximize cloud investments.

Talk to our cloud experts or reach us on (332) 222 7090).

FAQs

1. How can AWS Athena help small and midsize businesses reduce their data analytics costs?

AWS Athena’s serverless, pay-per-query pricing model means SMBs only pay for the data they actually scan, eliminating upfront infrastructure costs and allowing flexible, cost-efficient analytics that scale with business needs.

2. What are the key steps for SMBs to start using Athena without a large IT team?

SMBs can quickly begin by setting up an AWS account, creating an S3 bucket for data storage, configuring IAM permissions, and using the Athena console or AWS Glue Data Catalog to define schemas and run SQL queries, all without managing servers.

3. What types of data formats does AWS Athena support for querying?

Athena supports a wide range of data formats including CSV, JSON, Apache Parquet, ORC, and Avro, enabling flexible querying of structured, semi-structured, and unstructured data directly in Amazon S3.

4. Can Athena be integrated with other AWS services for enhanced analytics?

Yes, Athena integrates seamlessly with AWS Glue for metadata management, Amazon QuickSight for data visualization, and supports federated queries to access data across multiple sources, enhancing overall analytics capabilities.

5. How does Cloudtech assist SMBs in maximizing their AWS Athena deployment?

Cloudtech offers cloud modernization services including AWS infrastructure optimization, data management, and application modernization, helping businesses streamline Athena usage, improve scalability, enhance security, and control costs effectively.

An AWS cybersecurity guide for SMBs in 2025

Cyberattacks are getting more frequent and more expensive. IBM’s most recent cloud security study reports that the average cost of a data breach has reached USD 4.35 million. In response, over half of global organizations are planning to boost investments in threat detection, incident response, and cloud security tools throughout 2025.

For small and mid-sized businesses (SMBs) in sectors like healthcare and fintech, the stakes are even higher. All it takes is one misconfigured access policy or exposed resource to trigger a costly security incident or regulatory fallout.

The good news is that AWS provides a flexible foundation for implementing strong, scalable defenses if the right controls are in place. That’s why more growth-focused SMBs are working with AWS partners like Cloudtech to build secure, compliant environments from the ground up.

This article walks through the most important AWS cloud security practices for 2025, and how to implement them in a way that aligns with the risk profile, industry standards, and long-term goals.

Key takeaways:

- Security is not a one-time setup; it’s a continuous discipline: AWS provides the tools, but SMBs must define clear strategies and enforce controls as environments evolve.

- Misconfigurations are the biggest threat, and the easiest to prevent: With IAM policies, automated monitoring, and least privilege access, most breaches can be avoided before they happen.

- Smart automation is force multiplication for small teams: Tools like AWS Config, Amazon GuardDuty, and AWS Systems Manager help SMBs scale security efforts without scaling headcount.

- Cloud-native security doesn’t mean complexity; it means control: When implemented correctly, AWS makes it easier for SMBs to enforce governance, meet compliance, and respond quickly to threats.

Why do SMBs need a security-first AWS strategy?

AWS operates on a shared responsibility model, meaning Amazon secures the infrastructure, while customers are accountable for securing their data, configurations, and access policies.

Without a clear, well-documented strategy, SMBs often face preventable risks like open Amazon S3 buckets, over-permissive IAM roles, or outdated security groups. These gaps aren’t just technical oversights. They’re business liabilities that can lead to data breaches, compliance violations, and lost customer trust.

For industries like healthcare and fintech, the pressure is even greater. Regulations such as HIPAA and PCI-DSS require strict controls over data access, encryption, and audit trails. Falling short, even unintentionally, can result in heavy fines and reputational damage.

Establishing cloud security best practices from the start helps SMBs:

- Stay compliant with evolving industry regulations

- Reduce the risk of misconfiguration and unauthorized access

- Gain visibility into their cloud environment through monitoring and alerting

- Build a strong foundation that scales with business growth

For SMBs having limited IT teams or expertise, AWS partners like Cloudtech can help them implement the necessary AWS cybersecurity strategies in a way that fits their team size, risk profile, and industry requirements.

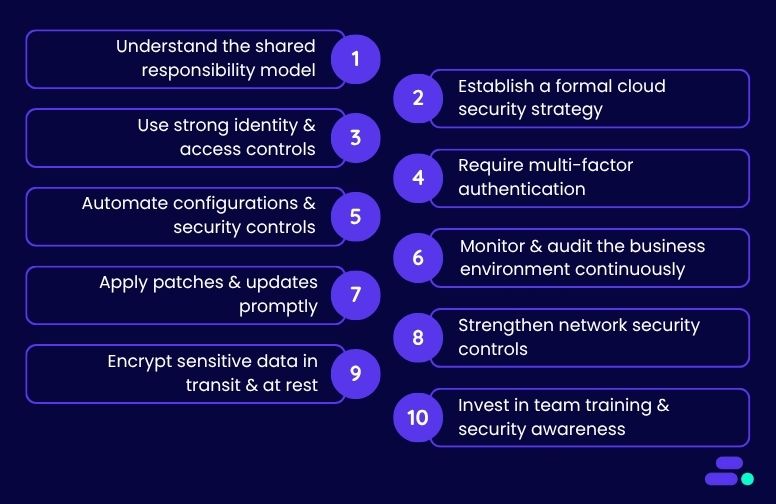

How can SMBs implement a security-first AWS strategy?

Unlike large enterprises, most SMBs don’t have the luxury of dedicated security teams or redundant systems. They move fast, manage lean teams, and prioritize innovation, especially in sectors like healthcare and fintech where demand is high and competition is fierce. Cloud platforms like AWS help level the playing field, but only if security keeps pace with growth.

In the early stages, it’s natural to focus on performance and delivery. But as environments expand with new workloads, more users, and tighter compliance expectations, security gaps can quietly emerge. Maybe a former contractor still has admin access. Maybe a key data store isn’t encrypted. They’re common side effects of scaling without a structured security plan. And when audits or incidents do arise, they tend to hit smaller businesses harder.

A security-first strategy helps SMBs get ahead of these risks. It brings clarity to what needs protecting, who needs access, and how to automate guardrails that support rather than slow down the business.

The following step-by-step strategy outlines how SMBs can achieve this using AWS-native tools, without overextending their teams or budgets.

1. Understand the shared responsibility model

Security in the cloud is a shared model. AWS secures the infrastructure of the cloud, while businesses are responsible for what they put in it. That includes user access, data classification, encryption settings, compliance configurations, and software-level protections. For SMBs in fintech and healthcare, this model directly impacts how they approach risk management, incident response, and audit readiness.

Real-world scenario: A healthcare startup launches a patient portal on Amazon EC2, assuming AWS manages encryption and access controls by default. However, without explicitly configuring IAM policies, enabling encryption at rest for EBS volumes, or setting up Amazon CloudTrail for audit logs, the environment remains exposed. This violates HIPAA and risks reputational damage in the event of a breach.

To operationalize the shared responsibility model:

- Map responsibilities by service: Use the AWS Shared Responsibility Model and align it with business architecture. For example, AWS manages Amazon RDS’s physical infrastructure. The SMB must enable encryption, rotate keys, and configure IAM policies for database access.

- Assign internal ownership: Create a responsibility matrix that maps each AWS service (e.g., Amazon S3, Amazon EC2, Amazon RDS, AWS Lambda) to a specific team or role, such as DevOps, compliance, or security engineering. This prevents accountability gaps.

- Standardize configuration baselines: Use AWS Config rules and AWS CloudFormation templates to enforce consistent security controls across environments (e.g., block public Amazon S3 buckets, enforce MFA for IAM users).

- Review regularly: As the stack evolves, review ownership and configuration at least quarterly to ensure no drift has occurred, especially after team changes, rapid scaling, or vendor integrations.

Understanding this model ensures that no one assumes AWS “has it covered” when, in reality, critical responsibilities like encryption, access control, and compliance reporting rest squarely on the business side of the fence.

2. Establish a formal cloud security strategy

Once the lines of responsibility are clear, the next priority is developing a formal cloud security strategy. Many SMBs, especially in regulated sectors like healthcare and fintech, spin up AWS environments quickly to meet demand, but without a guiding framework, risk and compliance gaps grow unnoticed.

A security strategy ensures consistency and accountability across teams, especially as infrastructure scales or regulatory pressure increases.

2. Establish a formal cloud security strategy

- Risk tolerance: Clarify how much risk the business can accept when choosing between speed, cost, and control. This informs architecture and vendor decisions.

- Compliance alignment: Map applicable standards like HIPAA, PCI-DSS, or SOC 2 to AWS configurations. For example, enable Amazon S3 encryption, enforce logging, and use private subnets for sensitive workloads.

- Team roles and ownership: Assign responsibility for identity, monitoring, key management, and remediation to avoid gaps during day-to-day ops or audits.

- Incident response workflows: Document how the team will detect and respond to threats, using AWS-native tools like Amazon GuardDuty, AWS CloudTrail, and AWS Systems Manager.

This document doesn’t need to be perfect from day one, but it does need to exist and evolve over time. Treat it as the team’s north star for scaling securely and making decisions that stand up to audits, not just deadlines.

3. Use strong identity and access controls

With a strategy in place, the next layer is precise access control. Identity and access missteps, especially overly broad permissions, are among the top causes of cloud breaches in SMB environments.

Using AWS Identity and Access Management (IAM), teams can enforce least-privilege access by:

- Defining role-based policies that grant only what’s needed per function. For example, a billing user shouldn’t have access to EC2 instances, and dev teams shouldn’t see production secrets.

- Disabling root account use for anything beyond initial setup. Instead, use federated identities or IAM roles with MFA.

- Eliminating long-lived credentials by rotating access keys. Use AWS IAM Identity Center (formerly AWS SSO) for session-based logins tied to the identity provider (e.g., Okta, Azure AD).

A healthcare SMB using shared admin credentials across AWS services may be compliant on paper but vulnerable in practice. Granular IAM policies, tied to monitored roles, help contain blast radius and support incident forensics if something goes wrong.

4. Require multi-factor authentication (MFA)

Even with tight IAM controls, stolen or reused credentials remain a top threat, especially in environments where small teams juggle multiple roles. That’s where multi-factor authentication (MFA) becomes critical.

MFA ensures that access requires something more than just a password. It should be non-negotiable for:

- Root users

- Admin and DevOps roles

- Any user interacting with production data or infrastructure

AWS makes implementation straightforward through virtual MFA apps (like Google Authenticator or Authy), hardware tokens, or integration with SSO platforms that support MFA enforcement.

For an SMB handling patient records or financial data, a single compromised credential without MFA could expose the entire AWS account. Enforcing MFA minimizes this risk and adds resilience against phishing, social engineering, or credential stuffing attempts.

5. Automate configurations and security controls

Once identity and access layers are secured, the next priority is eliminating manual configuration drift.

For SMBs, especially those with lean IT teams, automation isn’t just a time-saver. It’s a security multiplier. By using AWS-native tools, teams can standardize deployments, enforce guardrails, and respond to threats without delay.

Key tools and approaches include:

- AWS Config for real-time compliance checks against custom or managed security baselines, flagging issues like open ports or unencrypted volumes as they happen.

- AWS CloudFormation or Terraform to provision infrastructure in a secure, repeatable way, so dev teams don’t accidentally deploy with misconfigured security groups or public access.

- AWS Systems Manager and Lambda to automate patching, log reviews, and even auto-remediation for policy violations or failed audits.

In healthcare, for example, automation can help enforce HIPAA-aligned encryption and retention policies across resources. For fintech, it supports audit-readiness and reduces reliance on ad hoc scripts or manual checklists.

6. Monitor and audit the business environment continuously

Even well-automated environments need constant visibility. Threats evolve, workloads shift, and misconfigurations can creep in. For SMBs in sectors like healthcare and fintech, where downtime or data exposure can have legal and reputational consequences, real-time monitoring and auditing aren’t optional.

Fortunately, AWS offers integrated tools that make continuous oversight both achievable and scalable:

- Amazon GuardDuty delivers intelligent threat detection using machine learning to identify unusual patterns like port scanning, credential compromise, or data exfiltration attempts.

- AWS CloudTrail provides a detailed audit log of every API call, giving the team traceability for changes, critical for root cause analysis or compliance investigations.

- AWS Security Hub aggregates findings across AWS services, offering a unified dashboard to check alignment with frameworks like CIS Benchmarks, HIPAA, or PCI-DSS.

To stay effective, alerts must go beyond dashboards. Set up automated notifications for critical anomalies and establish ownership for reviewing, escalating, and responding to alerts. Small teams can also use third-party SIEM integrations or managed detection and response (MDR) services to extend coverage.

7. Apply patches and updates promptly

Security monitoring is only useful if it’s followed by action, and patching is one of the most critical. Unpatched systems remain one of the top causes of cloud breaches, especially when vulnerabilities are publicly known and easily exploitable.

For SMBs, falling behind on updates is often a bandwidth issue. But AWS provides tools to automate and streamline the process without disrupting operations:

- AWS Systems Manager Patch Manager allows teams to automate patching schedules for Amazon EC2 instances and on-premises hybrid environments.

- Compliance dashboards on AWS Systems Manager help track which systems are up to date and which require immediate attention.

- Maintenance windows on AWS Systems Manager can help apply patches during off-peak hours, minimizing service interruptions.

Make patching a priority for public-facing services, applications handling sensitive data, and any system tied to regulatory compliance. In healthcare and fintech, a missed patch could trigger an audit failure or data breach liability.

8. Strengthen network security controls

Once the workloads are patched and up to date, the next layer of defense is the network itself. Poorly configured network settings, like open ports or flat VPC architectures, can expose sensitive data to the internet or allow lateral movement if compromised.

To reduce the attack surface and enforce segmentation:

- Use Security Groups and Network ACLs to control inbound/outbound traffic at both the instance and subnet levels

- Replace public IPs with VPC endpoints to keep service traffic inside the AWS environment

- Deploy AWS WAF (Web Application Firewall) to block injection attacks, cross-site scripting (XSS), and other Layer 7 exploits

- Avoid open ports like 0.0.0.0/0 for SSH or RDP and use bastion hosts or Session Manager instead

For SMBs in healthcare or fintech, these network controls aren’t just good practice. They’re often required for regulatory compliance. Proactively auditing firewall rules, restricting access by IP or geography, and isolating workloads by function can significantly reduce the blast radius of any incident.

9. Encrypt sensitive data in transit and at rest

Encryption is a baseline requirement, not a bonus, especially for SMBs in healthcare or fintech where data privacy laws like HIPAA, PCI-DSS, or GLBA are non-negotiable. AWS makes encryption accessible, but it’s up to the team to configure and manage it correctly.

For data at rest, enable:

- SSE-S3 for server-side encryption in Amazon S3

- EBS encryption for volumes attached to EC2 instances

- RDS encryption for managed databases, ideally using Customer-Managed Keys (CMKs) via AWS Key Management Service (KMS)

For data in transit, always:

- Use TLS 1.2 or higher

- Manage certificates through AWS Certificate Manager (ACM) for secure HTTPS connections

- Avoid sending sensitive data over unencrypted channels, even between internal systems

Don’t stop at enabling encryption, also:

- Define key rotation schedules

- Enforce least-privilege policies for key access

- Monitor access logs for unusual activity around encryption resources

For SMBs, this ensures that even if other defenses fail, the most sensitive data remains unreadable and auditable, helping businesses stay compliant and protected at scale.

10. Invest in team training and security awareness

Technology alone doesn’t secure the cloud; people do. For SMBs, especially in high-stakes industries like healthcare and fintech, the weakest link is often user behavior, not tooling. A well-configured AWS environment can still be compromised if employees fall for phishing attempts or misconfigure permissions.

To build a security-first culture: