Blogs

Amazon Redshift: a comprehensive guide

From sales transactions to operational logs, businesses now handle millions of data points daily. Yet when it’s time to pull insights, most find their traditional databases too slow, rigid, or costly for complex analytics.

Without scalable infrastructure, even basic reporting turns into a bottleneck. SMBs often operate with lean teams, limited budgets, and rising compliance demands, leaving little room for overengineered systems or extended deployment cycles.

Amazon Redshift from AWS changes that. As a fully managed cloud data warehouse, it enables businesses to query large volumes of structured and semi-structured data quickly without the need to build or maintain underlying infrastructure. Its decoupled architecture, automated tuning, and built-in security make it ideal for SMBs looking to modernize fast.

This guide breaks down how Amazon Redshift works, how it scales, and why it’s become a go-to analytics engine for SMBs that want enterprise-grade performance without the complexity.

Key Takeaways

- End-to-end analytics without infrastructure burden: Amazon Redshift eliminates the need for manual cluster management and scales computing and storage independently, making it ideal for growing teams with limited technical overhead.

- Built-in cost efficiency: With serverless billing, reserved pricing, and automatic concurrency scaling, Amazon Redshift enables businesses to control costs without compromising performance.

- Security built for compliance-heavy industries: Data encryption, IAM-based access control, private VPC deployment, and audit logging provide the safeguards required for finance, healthcare, and other regulated environments.

- AWS ecosystem support: Amazon Redshift integrates with Amazon S3, Kinesis, Glue, and other AWS services, making it easier to build real-time or batch data pipelines without requiring additional infrastructure layers.

- Faster rollout with Cloudtech: Cloudtech’s AWS-certified experts help SMBs deploy Amazon Redshift with confidence, handling everything from setup and tuning to long-term optimization and support.

What is Amazon Redshift?

Amazon Redshift is built to support analytical workloads that demand high concurrency, low-latency queries, and scalable performance. It processes both structured and semi-structured data using a columnar storage engine and a massively parallel processing (MPP) architecture, making it ideal for businesses, especially SMBs, that handle fast-growing datasets.

It separates compute and storage layers, allowing organizations to scale each independently based on workload requirements and cost efficiency. This decoupled design supports a range of analytics, from ad hoc dashboards to complex modeling, without burdening teams with the maintenance of infrastructure.

Core capabilities and features of Amazon Redshift

Amazon Redshift combines a high-performance architecture with intelligent automation to support complex analytics at scale, without the burden of manual infrastructure management. From optimized storage to advanced query handling, it equips SMBs with tools to turn growing datasets into business insights.

1. Optimized architecture for analytics

Amazon Redshift stores data in a columnar format, minimizing I/O and reducing disk usage through compression algorithms like LZO, ZSTD, and AZ64. Its Massively Parallel Processing (MPP) engine distributes workloads across compute nodes, enabling horizontal scalability for large datasets. The SQL-based interface supports PostgreSQL-compatible JDBC and ODBC drivers, making it easy to integrate with existing BI tools.

2. Machine learning–driven performance

The service continuously monitors historical query patterns to optimize execution plans. It automatically adjusts distribution keys, sort keys, and compression settings—eliminating the need for manual tuning. Result caching, intelligent join strategies, and materialized views further improve query speed.

3. Serverless advantages for dynamic workloads

Amazon Redshift Serverless provisions and scales compute automatically based on workload demand. With no clusters to manage, businesses benefit from zero administration, fast start-up via Amazon Redshift Query Editor v2, and cost efficiency through pay-per-use pricing and automatic pause/resume functionality.

4. Advanced query capabilities across sources

Amazon Redshift supports federated queries to join live data from services like Amazon Aurora, RDS, and DynamoDB—without moving data. Amazon Redshift Spectrum extends this with the ability to query exabytes of data in Amazon S3 using standard SQL, reducing cluster load. Cross-database queries simplify analysis across schemas, and materialized views ensure fast response for repeated metrics.

5. Performance at scale

To maintain responsiveness under load, Amazon Redshift includes concurrency scaling, which provisions temporary clusters when query queues spike. Workload management assigns priorities and resource limits to users and applications, ensuring a fair distribution of resources. Built-in optimization engines maintain consistent performance as usage increases.

Amazon Redshift setup and deployment process

Successfully deploying Amazon Redshift begins with careful preparation of AWS infrastructure and security settings. These foundational steps ensure that the data warehouse operates securely, performs reliably, and integrates well with existing environments.

The process involves configuring identity and access management, network architecture, selecting the appropriate deployment model, and completing critical post-deployment tasks.

1. Security and network prerequisites for Amazon Redshift deployment

Before provisioning clusters or serverless workgroups, organizations must establish the proper security and networking foundation. This involves setting permissions, preparing network isolation, and defining security controls necessary for protected and compliant operations.

- IAM configuration: Assign IAM roles with sufficient permissions to manage Amazon Redshift resources. The Amazon Redshift Full Access policy covers cluster creation, database admin, and snapshots. For granular control, use custom IAM policies with resource-based conditions to restrict access by cluster, database, or action.

- VPC network setup: Deploy Amazon Redshift clusters within dedicated subnets in a VPC spanning multiple Availability Zones (AZs) for high availability. Attach security groups that enforce strict inbound/outbound rules to control communication and isolate the environment.

- Security controls: Limit access to Amazon Redshift clusters through network-level restrictions. Inbound traffic on port 5439 (default) must be explicitly allowed only from trusted IPs or CIDR blocks. Outbound rules should permit necessary connections to client apps and related AWS services.

2. Deployment models in Amazon Redshift

Once the security and network prerequisites are in place, organizations can select the deployment model that best suits their operational needs and workload patterns. Amazon Redshift provides two flexible options that differ in management responsibility and scalability:

- Amazon Redshift Serverless: It eliminates infrastructure management by auto-scaling compute based on query demand. Capacity, measured in Amazon Redshift Processing Units (RPUs), adjusts dynamically within configured limits, helping organizations balance performance and cost.

- Provisioned clusters: Designed for predictable workloads, provisioned clusters offer full control over infrastructure. A leader node manages queries, while compute nodes process data in parallel. With RA3 node types, compute and storage scale independently for greater efficiency.

3. Initial configuration tasks for Amazon Redshift

After selecting a deployment model and provisioning resources, several critical configuration steps must be completed to secure, organize, and optimize the Amazon Redshift environment for production use.

- Database setup: Each Amazon Redshift database includes schemas that group tables, views, and other objects. A default PUBLIC schema is provided, but up to 9,900 custom schemas can be created per database. Access is controlled using SQL to manage users, groups, and privileges at the schema and table levels.

- Network security: Updated security group rules take effect immediately. Inbound and outbound traffic permissions must support secure communication with authorized clients and integrated AWS services.

- Backup configuration: Amazon Redshift captures automated, incremental backups with configurable retention from 1 to 35 days. Manual snapshots support point-in-time recovery before schema changes or key events. Cross-region snapshot copying enables disaster recovery by replicating backups across AWS regions.

- Parameter management: Cluster parameter groups define settings such as query timeouts, memory use, and connection limits. Custom groups help fine-tune behavior for specific workloads without impacting other Amazon Redshift clusters in the account.

With the foundational setup, deployment model, and initial configuration complete, the focus shifts to how Amazon Redshift is managed in production, enabling efficient scaling, automation, and deeper enterprise integration.

Post-deployment operations and scalability in Amazon Redshift

Amazon Redshift offers flexible deployment options through both graphical interfaces and programmatic tools. Organizations can choose between serverless and provisioned cluster management based on the predictability of their workloads and resource requirements. The service provides comprehensive management capabilities that automate routine operations while maintaining control over critical configuration parameters.

1. Provision of resources and management functionalities

Getting started with Amazon Redshift involves selecting the right provisioning approach. The service supports a range of deployment methods to align with organizational preferences, from point-and-click tools to fully automated DevOps pipelines.

- AWS Management Console: The graphical interface provides step-by-step cluster provisioning with configuration wizards for network settings, security groups, and backup preferences. Organizations can launch clusters within minutes using pre-configured templates for everyday use cases.

- Infrastructure as Code: AWS CloudFormation and Terraform enable automated deployment across environments. Templates define cluster specs, security, and networking to ensure consistent, repeatable setups..

- AWS Command Line Interface: Programmatic cluster management through CLI commands supports automation workflows and integration with existing DevOps pipelines. It offers complete control over cluster lifecycle operations, including creation, modification, and deletion.

- Amazon Redshift API: Direct API access allows integration with enterprise systems for custom automation workflows. RESTful endpoints enable organizations to embed Amazon Redshift provisioning into broader infrastructure management platforms.

2. Dynamic scaling capabilities for Amazon Redshift workloads

Once deployed, Amazon Redshift adapts to dynamic workloads using several built-in scaling mechanisms. These capabilities help maintain query performance under heavy loads and reduce costs during periods of low activity.

- Concurrency Scaling: Automatically provisions additional compute clusters when query queues exceed thresholds. These temporary clusters process queued queries independently, preventing performance degradation during spikes.

- Elastic Resize: Enables fast adjustment of cluster node count to match changing capacity needs. Organizations can scale up or down within minutes without affecting data integrity or system availability.

- Pause and Resume: Provisioned clusters can be suspended during idle periods to save on computing charges. The cluster configuration and data remain intact and are restored immediately upon resumption.

- Scheduled Scaling: Businesses can define policies to scale resources in anticipation of known usage patterns, allowing for more efficient resource allocation. This approach supports cost control and ensures performance during recurring demand cycles.

3. Unified analytics with Amazon Redshift

Beyond deployment and scaling, Amazon Redshift acts as a foundational analytics layer that unifies data across systems and business functions. It is frequently used as a core component of modern data platforms.

- Enterprise data integration: Organizations use Amazon Redshift to consolidate data from CRM, ERP, and third-party systems. This centralization breaks down silos and supports organization-wide analytics and reporting.

- Multi-cluster environments: Teams can deploy separate clusters for different departments or applications, allowing for greater flexibility and scalability. This enables workload isolation while allowing for shared insights when needed through cross-cluster queries.

- Hybrid storage models: By combining Amazon Redshift with Amazon S3, organizations optimize both performance and cost. Active datasets remain in cluster storage, while historical or infrequently accessed data is stored in cost-efficient S3 data lakes.

After establishing scalable operations and integrated data workflows, organizations must ensure that these environments remain secure, compliant, and well-controlled, especially when handling sensitive or regulated data.

Security and connectivity features in Amazon Redshift

Amazon Redshift enforces strong security measures to protect sensitive data while enabling controlled access across users, applications, and networks. Security implementation encompasses data protection, access controls, and network isolation, all of which are crucial for organizations operating in regulated industries, such as finance and healthcare. Connectivity is supported through secure, standards-based drivers and APIs that integrate with internal tools and services.

1. Data security measures using IAM and VPC

Amazon Redshift integrates with AWS Identity and Access Management (IAM) and Amazon Virtual Private Cloud (VPC) to provide fine-grained access controls and private network configurations.

- IAM integration: IAM policies allow administrators to define permissions for cluster management, database operations, and administrative tasks. Role-based access ensures that users and services access only the data and functions for which they are authorized.

- Database-level security: Role-based access at the table and column levels allows organizations to enforce granular control over sensitive datasets. Users can be grouped by function, with each group assigned specific permissions.

- VPC isolation: Clusters are deployed within private subnets, ensuring network isolation from the public internet. Custom security groups define which IP addresses or services can communicate with the cluster.

- Multi-factor authentication: To enhance administrative security, Amazon Redshift supports multi-factor authentication through AWS IAM, requiring additional verification for access to critical operations.

2. Encryption for data at rest and in transit

Amazon Redshift applies end-to-end encryption to protect data throughout its lifecycle.

- Encryption at rest: All data, including backups and snapshots, is encrypted using AES-256 via AWS Key Management Service (KMS). Organizations can use either AWS-managed or customer-managed keys for encryption and key lifecycle management.

- Encryption in transit: TLS 1.2 secures data in motion between clients and Amazon Redshift clusters. SSL certificates are used to authenticate clusters and ensure encrypted communication channels.

- Certificate validation: SSL certificates also protect against spoofed endpoints by validating cluster identity, which is essential when connecting through external applications or secure tunnels.

3. Secure connectivity options for Amazon Redshift access

Amazon Redshift offers multiple options for secure access across application environments and user workflows.

- JDBC and ODBC drivers: Amazon Redshift supports industry-standard drivers that include encryption, connection pooling, and compatibility with a wide range of internal applications and SQL-based tools.

- Amazon Redshift Data API: This HTTP-based API allows developers to run SQL queries without maintaining persistent database connections. IAM-based authentication ensures secure, programmatic access for automated workflows.

- Query Editor v2: A browser-based interface that allows secure SQL query execution without needing to install client drivers. It supports role-based access and session-level security settings to maintain administrative control.

Integration and data access in Amazon Redshift

Amazon Redshift offers flexible integration options designed for small and mid-sized businesses that require efficient and scalable access to both internal and external data sources. From real-time pipelines to automated reporting, the platform streamlines how teams connect, load, and work with data, eliminating the need for complex infrastructure or manual overhead.

1. Simplified access through Amazon Redshift-native tools

For growing teams that need to analyze data quickly without relying on a heavy setup, Amazon Redshift includes direct access methods that reduce configuration time.

- Amazon Redshift Query Editor v2: A browser-based interface that allows teams to run SQL queries, visualize results, and share findings, all without installing drivers or maintaining persistent connections.

- Amazon Redshift Data API: Enables secure, HTTP-based query execution in serverless environments. Developers can trigger SQL operations directly from applications or scripts using IAM-based authentication, which is ideal for automation.

- Standardized driver support: Amazon Redshift supports JDBC and ODBC drivers for internal tools and legacy systems, providing broad compatibility for teams using custom reporting or dashboard solutions.

2. Streamlined data pipelines from AWS services

Amazon Redshift integrates with core AWS services, enabling SMBs to manage both batch and real-time data without requiring extensive infrastructure.

- Amazon S3 with Amazon Redshift Spectrum: Enables high-throughput ingestion from S3 and allows teams to query data in place, avoiding unnecessary transfers or duplications.

- AWS Glue: Provides visual tools for setting up extract-transform-load (ETL) workflows, reducing the need for custom scripts. Glue Data Catalog centralizes metadata, making it easier to manage large datasets.

- Amazon Kinesis: Supports the real-time ingestion of streaming data for use cases such as application telemetry, customer activity tracking, and operational metrics.

- AWS Database Migration Service: Facilitates low-downtime migration from existing systems to Amazon Redshift. Supports ongoing replication to keep cloud data current without disrupting operations.

3. Built-in support for automated reporting and dashboards

Amazon Redshift supports organizations that want fast, accessible insights without investing in separate analytics platforms.

- Scheduled reporting: Teams can automate recurring queries and export schedules to keep stakeholders updated without manual intervention.

- Self-service access: Amazon Redshift tools support role-based access, allowing non-technical users to run safe, scoped queries within approved datasets.

- Mobile-ready dashboards: Reports and result views are accessible on tablets and phones, helping teams track KPIs and metrics on the go.

Cost and operational factors in Amazon Redshift

For SMBs, cost efficiency and operational control are central to maintaining a scalable data infrastructure. Amazon Redshift offers a flexible pricing model, automatic performance tuning, and predictable maintenance workflows, making it practical to run high-performance analytics without overspending or overprovisioning.

Pricing models tailored to usage patterns

Amazon Redshift supports multiple pricing structures designed for both variable and predictable workloads. Each model offers different levels of cost control and scalability, allowing organizations to align infrastructure spending with business goals.

- Capacity-based pricing: Amazon Redshift follows a capacity-based pricing model where businesses pay for the compute capacity (measured in Redshift Processing Units or RPUs) that is provisioned.

- Reserved instance pricing: For businesses with consistent query loads, reserved instances offer savings through 1-year or 3-year commitments. This approach provides budget predictability and cost reduction for steady usage.

- Serverless pricing model: Amazon Redshift Serverless charges based on Amazon Redshift Processing Units (RPUs) consumed during query execution. Since computing pauses during idle time, organizations avoid paying for unused capacity.

- Concurrency scaling credits: When demand spikes, Amazon Redshift spins up additional clusters automatically. Most accounts receive sufficient free concurrency scaling credits to handle typical peak periods without incurring extra costs.

Operational workflows for cluster management

Amazon Redshift offers streamlined workflows for managing cluster operations, ensuring consistent performance, and minimizing the impact of maintenance tasks on business-critical functions.

- Lifecycle control: Clusters can be launched, resized, paused, or deleted using the AWS Console, CLI, or API. Organizations can scale up or down as needed without losing data or configuration.

- Maintenance schedule: Software patches and system updates are applied during customizable maintenance windows to avoid operational disruption.

- Backup and Restore: Automated, incremental backups provide continuous data protection with configurable retention periods. Manual snapshots can be triggered for specific restore points before schema changes or major updates.

- Monitoring and diagnostics: Native integration with Amazon CloudWatch enables visibility into query patterns, compute usage, and performance bottlenecks. Custom dashboards help identify resource constraints early.

Resource optimization within compute nodes

Efficient resource utilization is crucial for maintaining a balance between cost and performance, particularly as data volumes expand and the number of concurrent users increases.

- Compute and storage configuration: Organizations can choose from node types, including RA3 instances that decouple compute from storage. This allows independent scaling based on workload needs.

- Workload management policies: Amazon Redshift supports queue-based workload management, which assigns priority and resource caps to different users or jobs. This ensures that lower-priority operations do not delay time-sensitive queries.

- Storage compression: Data is stored in columnar format with automatic compression, significantly reducing storage costs while maintaining performance.

- Query tuning automation: Amazon Redshift recommends materialized views, caches common queries, and continuously adjusts query plans to reduce compute time, enabling businesses to achieve faster results with lower operational effort.

While Amazon Redshift delivers strong performance and flexibility, many SMBs require expert help to handle implementation complexity, align the platform with business goals, and ensure compliant, growth-oriented outcomes.

How Cloudtech accelerates Amazon Redshift implementation

Cloudtech is a specialized AWS consulting partner dedicated to helping businesses address the complexities of cloud adoption and modernization with practical, secure, and scalable solutions.

Many businesses face challenges in implementing enterprise-grade data warehousing due to limited resources and evolving analytical demands. Cloudtech fills this gap by providing expert guidance and hands-on support, ensuring businesses can confidently deploy Amazon Redshift while maintaining control and compliance.

Cloudtech's team of former AWS employees delivers comprehensive data modernization services that minimize risk and ensure cloud analytics support business objectives:

- Data modernization: Upgrading data infrastructures for improved performance and analytics, helping businesses unlock more value from their information assets through Amazon Redshift implementation.

- Application modernization: Revamping legacy applications to become cloud-native and scalable, ensuring seamless integration with modern data warehouse architectures.

- Infrastructure and resiliency: Building secure, resilient cloud infrastructures that support business continuity and reduce vulnerability to disruptions through proper Amazon Redshift deployment and optimization.

- Generative artificial intelligence: Implementing AI-driven solutions that leverage Amazon Redshift's analytical capabilities to automate and optimize business processes.

Conclusion

Amazon Redshift provides businesses with a secure and scalable foundation for high-performance analytics, eliminating the need to manage infrastructure. With automated optimization, advanced security, and flexible pricing, it enables data-driven decisions across teams while keeping costs under control.

For small and mid-sized organizations, partnering with Cloudtech streamlines the implementation process. Our AWS-certified team helps you plan, deploy, and optimize Amazon Redshift to meet your specific performance and compliance goals. Get in touch with us to get started today!

FAQ’s

1. What is the use of Amazon Redshift?

Amazon Redshift is used to run high-speed analytics on large volumes of structured and semi-structured data. It helps businesses generate insights, power dashboards, and handle reporting without managing traditional database infrastructure.

2. Is Amazon Redshift an ETL tool?

No, Amazon Redshift is not an ETL tool. It’s a data warehouse that works with ETL services like AWS Glue to store and analyze transformed data efficiently for business intelligence and operational reporting.

3. What is the primary purpose of Amazon Redshift?

Amazon Redshift’s core purpose is to deliver fast, scalable analytics by running complex SQL queries across massive datasets. It supports use cases like customer insights, operational analysis, and financial forecasting across departments.

4. What is the best explanation of Amazon Redshift?

Amazon Redshift is a managed cloud data warehouse built for analytics. It separates computing and storage, supports standard SQL, and enables businesses to scale performance without overbuilding infrastructure or adding operational overhead.

5. What is Amazon Redshift best for?

Amazon Redshift is best for high-performance analytical workloads, powering dashboards, trend reports, and data models at speed. It’s particularly useful for SMBs handling growing data volumes across marketing, finance, and operations.

The latest guide to AWS disaster recovery in 2025

No business plans for a disaster, but every business needs a plan to recover from one. In 2025, downtime has become not just inconvenient but also expensive, disruptive, and often public. Whether it’s a ransomware attack, a regional outage, or a simple configuration error, SMBs can’t afford long recovery times or data loss.

That’s where AWS disaster recovery comes in. With cloud-native tools like AWS Elastic Disaster Recovery, even smaller teams can access the kind of resilience once reserved for large enterprises. The goal is to keep the business running, no matter what happens.

This guide breaks down the latest AWS disaster recovery strategies, from simple backups to multi-site architectures, so you can choose the right balance of speed, cost, and protection for your organization.

Key takeaways:

- AWS disaster recovery helps SMBs reduce downtime, protect data, and recover quickly with cloud-native automation and resilience.

- AWS DRS, S3, and multi-region replication enable cost-effective, scalable DR strategies tailored to business RTO and RPO goals.

- Automation with AWS EventBridge, AWS Lambda, and AWS Infrastructure as Code ensures faster, error-free recovery during outages or disasters.

- Cloudtech helps SMBs design secure, compliant, and tested DR plans using AWS tools for continuous backup, replication, and failover.

- Regular DR testing, cost optimization, and multi-region planning make AWS disaster recovery practical and reliable for SMBs in 2025.

Why is disaster recovery mission-critical in 2025?

Disaster recovery (DR) is a core part of business continuity. The digital space has become a high-stakes environment where every minute of downtime can lead to lost revenue, damaged trust, and compliance risks.

For SMBs, these impacts are amplified by smaller teams, tighter budgets, and increasingly complex hybrid environments.

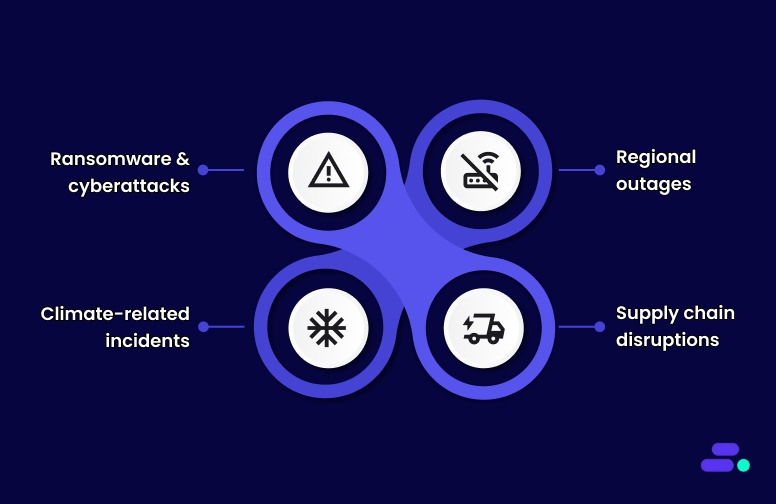

Today’s threats go far beyond hardware failures or accidental data loss. SMBs face:

- Ransomware and cyberattacks can encrypt or destroy critical systems overnight.

- Regional outages caused by power failures or connectivity disruptions.

- Climate-related incidents like floods or wildfires can take entire data centers offline.

- Supply chain disruptions that affect access to infrastructure and recovery resources.

Even short outages can trigger cascading effects, from delayed healthcare operations to missed financial transactions. The question for businesses is no longer if a disruption will happen, but how quickly they can recover when it does.

The shift toward automation and cloud-native resilience: Traditional DR methods like manual failovers, physical backups, and secondary data centers can’t meet modern recovery expectations. Businesses now need:

- Automation to eliminate human delays during failover.

- Scalability to expand recovery capacity instantly.

- Affordability to avoid idle infrastructure costs.

That’s where AWS transforms the game. With AWS Elastic Disaster Recovery (AWS DRS), organizations can continuously replicate data, recover from specific points in time, and spin up recovery instances in minutes, all using a cost-efficient, pay-as-you-go model.

Why it matters for SMBs: For smaller businesses, this evolution is an equalizer. AWS makes enterprise-level resilience achievable without massive capital investment.

By combining automation, scalability, and strong security under a single framework, AWS empowers SMBs to stay operational no matter what 2025 brings.

Suggested Read: Best practices for AWS resiliency: Building reliable clouds

A closer look at AWS’s modern disaster recovery stack

Disaster recovery on AWS has matured into a full resilience ecosystem, not just a backup strategy. It has turned what was once a complex, costly process into a flexible, automated, and affordable framework that SMBs can confidently rely on.

Businesses need continuity for entire workloads, including compute, databases, storage, and networking, with automation that responds instantly to failure events. AWS meets this challenge through an integrated DR ecosystem that combines scalable compute, low-cost storage, and intelligent automation under one cloud-native architecture.

Below is a closer look at the core AWS services powering disaster recovery in 2025:

1. AWS Elastic Disaster Recovery (AWS DRS): The core of modern DR

AWS DRS has become the cornerstone of AWS-based disaster recovery. It continuously replicates on-premises or cloud-based servers into a low-cost staging area within AWS.

- Instant recovery: In case of a disaster, AWS DRS can launch recovery instances in minutes using the latest state or a specific point-in-time snapshot.

- Cross-region replication: Data can be replicated across AWS Regions for compliance or geographic redundancy.

- Scalability and automation: Combined with Lambda or CloudFormation, recovery environments can be automatically scaled to meet post-failover demand.

For SMBs, this service eliminates the need for expensive standby infrastructure while delivering near-enterprise-grade recovery times.

2. Amazon S3, Amazon Glacier, and Amazon Glacier Deep Archive: Tiered, cost-efficient backup storage

Reliable storage remains the foundation of any disaster recovery plan. AWS provides multiple layers of durability and cost optimization through:

- Amazon S3: Ideal for frequently accessed backups and versioned data, offering 99.999999999% durability.

- Amazon S3 Glacier: Designed for infrequent access, with recovery in minutes to hours at a fraction of the cost.

- Amazon S3 Glacier Deep Archive: For long-term retention or compliance data, with recovery times measured in hours but at the lowest possible cost.

These options give SMBs fine-grained control over storage economics, protecting data affordably without sacrificing accessibility.

3. Amazon EC2 and EBS snapshots: Fast restoration for compute and data volumes

Snapshots form the operational backbone of infrastructure recovery.

- Amazon EBS snapshots capture incremental backups of volumes, enabling point-in-time restoration.

- Amazon EC2 snapshots allow entire virtual machines to be redeployed in another Region or Availability Zone.

With automation through AWS Backup or Lambda, these snapshots can be scheduled, monitored, and replicated for quick recovery from corruption or regional failure.

4. AWS CloudFormation and AWS CDK: Infrastructure as Code for recovery at scale

Manual recovery is no longer viable. AWS’s Infrastructure as Code (IaC) tools such as CloudFormation and the Cloud Development Kit (CDK) make it possible to rebuild entire production environments automatically.

- Versioned blueprints: Define compute, storage, and networking configurations once and redeploy anywhere.

- Consistency: Ensure recovery environments are identical to production, avoiding configuration drift.

- Speed: Launch full-stack environments in minutes instead of days.

IaC has become essential for SMBs seeking consistent, repeatable recovery processes without maintaining redundant infrastructure.

5. AWS Lambda and Amazon EventBridge: Automation and event-driven recovery

Recovery is no longer a manual checklist. Using AWS Lambda and Amazon EventBridge, DR processes can be fully automated:

- AWS Lambda runs recovery scripts, initiates failover, or provisions resources the moment a trigger event occurs.

- Amazon EventBridge detects failures, health changes, or compliance events and automatically executes recovery workflows.

This automation ensures that recovery isn’t delayed by human intervention, a critical factor when every second counts.

6. Amazon Route 53: Intelligent failover and traffic routing

Even the best recovery setup fails without smart routing. Amazon Route 53 handles global DNS management and automated failover by:

- Redirecting traffic from failed Regions to healthy ones.

- Supporting active-active or active-passive architectures.

- Monitoring application health continuously for instant redirection.

This ensures users always connect to the most available endpoint, even during major disruptions.

7. Amazon DynamoDB Global Tables and Aurora Global Database: Always-on data replication

Data availability is at the heart of resilience. AWS provides globally distributed data replication options for mission-critical workloads:

- Amazon DynamoDB Global Tables replicate changes in milliseconds across Regions, ensuring applications remain consistent and writable anywhere.

- Amazon Aurora Global Database replicates data with sub-second latency, allowing immediate failover with minimal data loss.

These services make multi-region architectures practical even for SMBs, enabling near-zero RPO and RTO without complex replication management.

Each service complements the others, helping SMBs build DR strategies that are both technically sophisticated and financially realistic.

Whether recovering from a cyberattack, system outage, or natural disaster, AWS gives organizations the agility to resume operations quickly, without the traditional complexity or capital expense of disaster recovery infrastructure. In short, it’s resilience reimagined for the cloud-first era.

Also Read: An AWS cybersecurity guide for SMBs in 2025

Effective strategies for disaster recovery in AWS

When selecting a disaster recovery (DR) strategy within AWS, it’s essential to evaluate both the Recovery Time Objective (RTO) and the Recovery Point Objective (RPO). Each AWS DR strategy offers different levels of complexity, cost, and operational resilience. Below are the most commonly used strategies, along with detailed technical considerations and the associated AWS services.

1. Backup and restore

The Backup and restore strategy involves regularly backing up your data and configurations. In the event of a disaster, these backups can be used to restore your systems and data. This approach is affordable but may require several hours for recovery, depending on the volume of data.

Key technical steps:

- AWS backup: Automates backups for AWS services, such as EC2, RDS, DynamoDB, and EFS. It supports cross-region backups, ideal for regional disaster recovery.

- Amazon S3 versioning: Enable versioning on S3 buckets to store multiple versions of objects, which can help recover from accidental deletions or data corruption.

- Infrastructure as code (IaC): Use AWS CloudFormation or AWS CDK to define infrastructure templates. These tools automate the redeployment of applications, configurations, and code, reducing recovery time.

- Point-in-time recovery: Use Amazon RDS snapshots, Amazon EBS snapshots, and Amazon DynamoDB backups for point-in-time recovery, ensuring that you meet stringent RPOs.

AWS Services:

- Amazon RDS for database snapshots

- Amazon EBS for block-level backups

- Amazon S3 Cross-region replication for continuous replication to a DR region

2. Pilot light

In the pilot light approach, minimal core infrastructure is maintained in the disaster recovery region. Resources such as databases remain active, while application servers stay dormant until a failover occurs, at which point they are scaled up rapidly.

Key technical steps:

- Continuous data replication: Use Amazon RDS read replicas, Amazon Aurora global databases, and DynamoDB global tables for continuous, cross-region asynchronous data replication, ensuring low RPO.

- Infrastructure management: Deploy core infrastructure using AWS CloudFormation templates across primary and DR regions, keeping application configurations dormant to reduce costs.

- Traffic management: Utilize Amazon Route 53 for DNS failover and AWS global accelerator for more efficient traffic management during failover, ensuring traffic is directed to the healthiest region.

AWS Services:

- Amazon RDS read replicas

- Amazon DynamoDB global tables for distributed data

- Amazon S3 Cross-Region Replication for real-time data replication

3. Warm standby

Warm Standby involves running a scaled-down version of your production environment in a secondary AWS Region. This allows minimal traffic handling immediately and enables scaling during failover to meet production needs.

Key technical steps

- EC2 auto scaling: Use Amazon EC2 auto scaling to scale resources automatically based on traffic demands, minimizing manual intervention and accelerating recovery times.

- Amazon Aurora global databases: These offer continuous cross-region replication, reducing failover latency and allowing a secondary region to take over writes during a disaster.

- Infrastructure as code (IaC): Use AWS CloudFormation to ensure both primary and DR regions are deployed consistently, making scaling and recovery easier.

AWS services

- Amazon EC2 auto scaling to handle demand

- Amazon Aurora global databases for fast failover

- AWS Lambda for automating backup and restore operations

4. Multi-site active/active

The multi-site active/active strategy runs your application in multiple AWS Regions simultaneously, with both regions handling traffic. This provides redundancy and ensures zero downtime, making it the most resilient and comprehensive disaster recovery option.

Key technical steps:

- Global load balancing: Utilize AWS Global Accelerator and Amazon Route 53 to manage traffic distribution across regions, ensuring that traffic is routed to the healthiest region in real-time.

- Asynchronous data replication: Implement Amazon Aurora global databases with multi-region replication for low-latency data availability across regions.

- Real-time monitoring and failover: Utilize AWS CloudWatch and AWS Application Recovery Controller (ARC) to monitor application health and automatically trigger traffic failover to the healthiest region.

AWS services:

- AWS Global Accelerator for low-latency global routing

- Amazon Aurora global databases for near-instantaneous replication

- Amazon Route 53 for failover and traffic management

Also Read: Hidden costs of cloud migration and how SMBs can avoid them

Advanced considerations for AWS disaster recovery in 2025

While AWS offers several core disaster recovery strategies, from backup and restore to multi-site active/active, modern resilience planning requires going a step further. SMBs can strengthen their DR posture by adopting advanced practices around architecture design, automation, governance, and cost optimization.

Here are some important considerations:

1. Choosing the right architecture: single-region vs. multi-region

Not every workload needs a multi-region setup, but every business needs redundancy. AWS offers multiple architectural layers to meet varying RTO/RPO goals:

- Multi-AZ redundancy for regional resilience: Replicating workloads across multiple Availability Zones (AZs) within a single AWS Region protects against localized data center outages. It’s ideal for applications that require high uptime but have data residency or regulatory constraints.

- Cross-region backups for disaster-level protection: Backing up to another AWS Region adds a safeguard against large-scale events such as natural disasters or regional power failures. Tools like AWS Backup and Amazon S3 Cross-Region Replication make this seamless and automated.

- Multi-region deployments for maximum availability: For mission-critical workloads, running active systems in multiple AWS Regions provides near-zero downtime. Services like Amazon Aurora Global Database and DynamoDB Global Tables ensure your data stays synchronized worldwide.

Tip: Choose your redundancy level based on your recovery time objective (RTO) and recovery point objective (RPO). Not every system needs multi-region replication; sometimes a hybrid of local resilience and selective replication is more cost-effective.

2. Automating recovery and testing

Automation is the backbone of successful disaster recovery. Manual steps increase both error risk and downtime, especially under pressure.

- Event-driven recovery: Use Amazon EventBridge and AWS Lambda to automate failover workflows, detect system anomalies, and trigger predefined recovery actions without manual intervention.

- Automated testing: Leverage AWS Resilience Hub and AWS Elastic Disaster Recovery (AWS DRS) to perform non-disruptive recovery tests. Regular “game days” and simulated failovers help validate that your systems can actually meet RTO and RPO targets when it counts.

- Continuous documentation updates: After each test, refine your DR runbooks, IAM roles, and escalation workflows. Resilience isn’t static—it evolves as your architecture changes.

3. Governance, compliance, and security best practices

A DR plan is only as strong as its security controls. Ensuring that recovery operations comply with data protection and industry regulations is critical.

- Secure access and encryption: Use AWS Identity and Access Management (IAM) for least-privilege access and AWS Key Management Service (KMS) for encryption key control.

- Compliance-ready backups: AWS services can help meet standards such as HIPAA, FINRA, and SOC 2 through auditable, tamper-proof data storage.

- Credential isolation: Keep backup and recovery credentials separate from production access to reduce the risk of simultaneous compromise during an incident.

4. Cost optimization for SMBs

Resilience shouldn’t break your budget. AWS enables cost-effective recovery through flexible storage tiers and on-demand infrastructure.

- Use tiered storage: Store frequently accessed backups in Amazon S3, long-term archives in Amazon Glacier, and deep archives in Amazon Glacier Deep Archive for significant cost savings.

- Automate lifecycle policies: Configure automatic transitions between storage tiers to minimize manual oversight and wasted spend.

- Selective replication: Not all data needs to be replicated across regions—focus on critical workloads first.

- Pay-as-you-go recovery: Services like AWS Elastic Disaster Recovery (DRS) allow you to maintain minimal compute during normal operations and scale up only when needed, avoiding the cost of a full secondary site.

Example: Many SMBs start with a pilot light configuration for essential systems and expand to warm standby as their business and recovery needs grow, achieving resilience in phases without large upfront investments.

By integrating automation, security, and cost awareness into your AWS disaster recovery plan, SMBs can achieve enterprise-grade resilience without enterprise-level complexity. The key isn’t just recovering quickly; it’s building a DR strategy that evolves with your business.

Challenges of automating AWS disaster recovery for SMBs (and how to solve them)

AWS disaster recovery automation empowers SMBs with multiple strategies and solutions for disaster recovery. However, SMBs must address setup complexity and ongoing costs and ensure continuous monitoring to benefit fully.

Here are some common challenges and how to solve them:

1. Complex multi-region orchestration: Coordinating automated failover across multiple AWS Regions can be intricate, risking data inconsistency and downtime.

Solution: Use AWS Elastic Disaster Recovery (AWS DRS) and AWS CloudFormation/CDK to define reproducible, automated multi-region failover processes, reducing human error and synchronization issues.

2. Cost management under strict RTO/RPO targets: Low RTOs and RPOs often require high resource usage, which can escalate costs quickly.

Solution: Implement tiered storage with Amazon S3, Amazon Glacier, and Amazon Glacier Deep Archive, and leverage on-demand DR environments rather than always-on secondary systems to optimize costs.

3. Replication latency and data lag: Cross-region replication can introduce delays, risking data inconsistency within recovery windows.

Solution: Use DynamoDB Global Tables or Aurora Global Database for near real-time multi-region replication and configure RPO tolerances according to workload criticality.

4. Maintaining compliance and security: Automated DR workflows must meet regulatory standards (HIPAA, SOC 2), requiring continuous monitoring and audit-ready reporting.

Solution: Employ AWS Backup Audit Manager, IAM roles with least-privilege access, and AWS KMS for encryption, ensuring compliance without adding manual overhead.

5. Operational overhead of testing and validation: Regular failover drills and recovery testing are resource-intensive, especially for small IT teams.

Solution: Use AWS Resilience Hub and AWS DRS non-disruptive testing to automate simulation drills, validate RTO/RPO targets, and continuously refine DR plans.

Despite these challenges, AWS remains the leading choice for SMB disaster recovery due to its extensive global infrastructure, comprehensive native services, and flexible pay-as-you-go pricing.

Also Read: A complete guide to Amazon S3 Glacier for long-term data storage

How does Cloudtech help SMBs implement AWS disaster recovery strategies?

For SMBs, building a resilient disaster recovery (DR) framework is no longer optional; it’s essential for minimizing downtime, protecting data, and ensuring business continuity. Cloudtech simplifies this process with an AWS-native, SMB-first approach that emphasizes automation, compliance, and measurable recovery outcomes.

Here’s how Cloudtech enables reliable AWS disaster recovery for SMBs:

- Cloud foundation and governance: Cloudtech sets up secure, multi-account AWS environments using AWS Control Tower, AWS Organizations, and AWS IAM. This ensures strong governance, access management, and cost visibility for DR operations from day one.

- Workload recovery and resiliency: Using AWS Elastic Disaster Recovery (AWS DRS), AWS Backup, and Amazon Route 53, Cloudtech implements structured DR plans with automated failover. This reduces disruption and maintains high availability for critical workloads during outages.

- Application recovery and modernization: Cloudtech adapts legacy applications into scalable, cloud-native architectures using AWS Lambda, Amazon ECS, and Amazon EventBridge. This enables faster recovery, efficient resource usage, and automation-driven failover for production workloads.

- Data protection and integration: Through Amazon S3, AWS Backup, and Multi-AZ configurations, Cloudtech ensures continuous data replication, backup retention, and regional redundancy, providing SMBs with secure and reliable access to their critical data.

- Automation, testing, and monitoring: Cloudtech uses EventBridge and AWS DRS automation, along with regular DR drills and validation exercises, to continuously test recovery procedures, maintain compliance, and optimize RTO/RPO targets.

Cloudtech’s proven AWS disaster recovery methodology ensures SMBs don’t just recover from outages; they modernize, automate, and scale their DR strategy securely. The result is a cloud-native, cost-efficient DR environment that protects business operations and enables growth in 2025 and beyond.

Also Read: 10 common challenges SMBs face when migrating to the cloud

Wrapping up

Effective disaster recovery is critical for SMBs to safeguard operations, data, and customer trust in an unpredictable environment. AWS provides a powerful, flexible platform offering diverse strategies, from backup and restore to multi-site active-active setups, that help SMBs balance recovery speed, cost, and complexity.

Cloudtech simplifies the complexity of disaster recovery, enabling SMBs to focus on growth while maintaining strong operational resilience. To strengthen your disaster recovery plan with AWS expertise, visit Cloudtech and explore how Cloudtech can support your business continuity goals.

FAQs

1. How does AWS Elastic Disaster Recovery improve SMB recovery plans?

AWS Elastic Disaster Recovery continuously replicates workloads, reducing downtime and data loss. It automates failover and failback, allowing SMBs to restore applications quickly without complex manual intervention, improving recovery speed and reliability.

2. What are the cost implications of using AWS for disaster recovery?

AWS DR costs vary based on data volume and recovery strategy. Pay-as-you-go pricing helps SMBs avoid upfront investments, but monitoring storage, data transfer, and failover expenses is essential to optimize overall costs.

3. Can SMBs use AWS disaster recovery without a dedicated IT team?

Yes, AWS offers managed services and automation tools that simplify DR setup and management. However, SMBs may benefit from expert support to design and maintain effective recovery plans tailored to their business needs.

4. How often should SMBs test their AWS disaster recovery plans?

Regular testing, at least twice a year, is recommended to ensure plans work as intended. Automated testing tools on AWS can help SMBs perform failover drills efficiently, reducing operational risks and improving readiness.

Guide to creating an AWS Cloud Security policy

Every business that moves its operations to the cloud faces a harsh reality: one misconfigured permission can expose sensitive data or disrupt critical services. For businesses, AWS security is not simply a consideration but a fundamental element that underpins operational integrity, customer confidence, and regulatory compliance. With the growing complexity of cloud environments, even a single gap in access control or policy structure can open the door to costly breaches and regulatory penalties.

A well-designed AWS Cloud Security policy brings order and clarity to access management. It defines who can do what, where, and under which conditions, reducing risk and supporting compliance requirements. By establishing clear standards and reusable templates, businesses can scale securely, respond quickly to audits, and avoid the pitfalls of ad-hoc permissions.

Key Takeaways

- Enforce Least Privilege: Define granular IAM roles and permissions; require multi-factor authentication and restrict root account use.

- Mandate Encryption Everywhere: Encrypt all S3, EBS, and RDS data at rest and enforce TLS 1.2+ for data in transit.

- Automate Monitoring & Compliance: Enable CloudTrail and AWS Config in all regions; centralize logs and set up CloudWatch alerts for suspicious activity.

- Isolate & Protect Networks: Design VPCs for workload isolation, use strict security groups, and avoid open “0.0.0.0/0” rules.

- Regularly Review & Remediate: Schedule policy audits, automate misconfiguration fixes, and update controls after major AWS changes.

What is an AWS Cloud Security policy?

An AWS Cloud Security policy is a set of explicit rules and permissions that define who can access specific AWS resources, what actions they can perform, and under what conditions these actions can be performed. These policies are written in JSON and are applied to users, groups, or roles within AWS Identity and Access Management (IAM).

They control access at a granular level, specifying details such as which Amazon S3 buckets can be read, which Amazon EC2 instances can be started or stopped, and which API calls are permitted or denied. This fine-grained control is fundamental to maintaining strict security boundaries and preventing unauthorized actions within an AWS account.

Beyond access control, these policies can also enforce compliance requirements, such as PCI DSS, HIPAA, and GDPR, by mandating encryption for stored data and restricting network access to specific IP ranges, including trusted corporate or VPN addresses and AWS’s published service IP ranges..

AWS Cloud Security policies are integral to automated security monitoring, as they can trigger alerts or block activities that violate organizational standards. By defining and enforcing these rules, organizations can systematically reduce risk and maintain consistent security practices across all AWS resources.

Key elements of a strong AWS Cloud Security policy

A strong AWS Cloud Security policy starts with precise permissions, enforced conditions, and clear boundaries to protect business resources.

- Precise permission boundaries based on the principle of least privilege:

Limiting user, role, and service permissions to only what is necessary helps prevent both accidental and intentional misuse of resources.

- Grant only necessary actions for users, roles, or services.

- Explicitly specify allowed and denied actions, resource Amazon Resource Names, and relevant conditions (such as IP restrictions or encryption requirements).

- Carefully scoped permissions reduce the risk of unwanted access.

- Use of policy conditions and multi-factor authentication enforcement:

Requiring extra security checks for sensitive actions and setting global controls across accounts strengthens protection for critical operations.

- Require sensitive actions (such as deleting resources or accessing critical data) only under specific circumstances, like approved networks or multi-factor authentication presence.

- Apply service control policies at the AWS Organization level to set global limits on actions across accounts.

- Layered governance supports compliance and operational needs without overexposing resources.

Clear, enforceable policies lay the groundwork for secure access and resource management in AWS. Once these principles are established, organizations can move forward with a policy template that fits their specific requirements.

How to create an AWS Cloud Security policy?

A comprehensive AWS Cloud Security policy establishes the framework for protecting businesses' cloud infrastructure, data, and operations. These specific requirements and considerations for AWS environments are necessary while maintaining practical implementation guidelines.

Step 1: Establish the foundation and scope

Define the purpose and scope of the AWS Cloud Security policy. Clearly outline the environments (private, public, hybrid) covered by the policy, and specify which departments, systems, data types, and users are included.

This ensures the policy is focused, relevant, and aligned with the business's goals and compliance requirements.

Step 2: Conduct a comprehensive risk assessment

Conduct a comprehensive risk assessment to identify, assess, and prioritize potential threats. Begin by inventorying all cloud-hosted assets, data, applications, and infrastructure, and assessing their vulnerabilities.

Categorize risks by severity and determine appropriate mitigation strategies, considering both technical risks (data breaches, unauthorized access) and business risks (compliance violations, service disruptions). Regular assessments should be performed periodically and after major changes.

Step 3: Define security requirements and frameworks

Establish clear security requirements in line with industry standards and frameworks such as ISO/IEC 27001, NIST SP 800-53, and relevant regulations (GDPR, HIPAA, PCI-DSS).

Specify compliance with these standards and design the security controls (access management, encryption, MFA, firewalls) that will govern the cloud environment. This framework should address both technical and administrative controls for protecting assets.

Step 4: Develop detailed security guidelines

Create actionable security guidelines to implement across the business's cloud environment. These should cover key areas:

- Identity and Access Management (IAM): Implement role-based access controls (RBAC) and enforce the principle of least privilege. Use multi-factor authentication (MFA) for all cloud accounts, especially administrative accounts.

- Data protection: Define encryption requirements for data at rest and in transit, establish data classification standards, and implement backup strategies.

- Network security: Use network segmentation, firewalls, and secure communication protocols to limit exposure and protect businesses' cloud infrastructure.

The guidelines should be clear and provide specific, actionable instructions for all stakeholders.

Step 5: Establish a governance and compliance framework

Design a governance structure that assigns specific roles and responsibilities for AWS Cloud Security management. Ensure compliance with industry regulations and establish continuous monitoring processes.

Implement regular audits to validate the effectiveness of business security controls, and develop change management procedures for policy updates and security operations.

Step 6: Implement incident response procedures

Develop a detailed incident response plan with four key components: preparation, detection, containment, eradication, and recovery. Define roles and responsibilities for the incident response team and document escalation procedures. AWS Security Hub or Amazon Detective is used for real-time correlation and investigation.

Automate playbooks for common incidents and ensure regular training for the response team to ensure consistent and effective responses. Store the plan in secure, highly available storage, and review it regularly to keep it up to date.

Step 7: Deploy enforcement and monitoring mechanisms

Implement tools and processes to enforce compliance with business's AWS Cloud Security policies. Use automated policy enforcement frameworks, such as AWS Config or Azure Policy, to ensure consistency across cloud resources.

Deploy continuous monitoring solutions, including SIEM systems, to analyze security logs and provide real-time visibility. Set up key performance indicators (KPIs) to assess the effectiveness of security controls and policy compliance.

Step 8: Provide training and awareness programs

Develop comprehensive training programs for all employees, from basic security awareness for general users to advance AWS Cloud Security training for IT staff. Focus on educating personnel about recognizing threats, following security protocols, and responding to incidents.

Regularly update training content to reflect emerging threats and technological advancements. Encourage certifications, like AWS Certified Security Specialty, to validate expertise.

Step 9: Establish review and maintenance processes

Create a process for regularly reviewing and updating the business's AWS Cloud Security policy. Schedule periodic reviews to ensure alignment with evolving organizational needs, technologies, and regulatory changes.

Implement a feedback loop to gather input from stakeholders, perform internal and external audits, and address any identified gaps. Use audit results to update and improve their security posture, maintaining version control for all policy documents.

Creating a clear and enforceable security policy is the foundation for controlling access and protecting the AWS environment. Understanding why these policies matter helps prioritize their design and ongoing management within the businesses.

Why is an AWS Cloud Security policy important?

AWS Cloud Security policies serve as the authoritative reference for how an organization protects its data, workloads, and operations in cloud environments. Their importance stems from several concrete factors:

- Ensures regulatory compliance and audit readiness

AWS Cloud Security policies provide the documentation and controls required to comply with regulations like GDPR, HIPAA, and PCI DSS.

During audits or investigations, this policy serves as the authoritative reference that demonstrates your cloud infrastructure adheres to legal and industry security standards, thereby reducing the risk of fines, data breaches, or legal penalties.

- Standardizes security across the cloud environment

A clear policy enforces consistent configuration, access management, and encryption practices across all AWS services. This minimizes human error and misconfigurations—two of the most common causes of cloud data breaches—and ensures security isn't siloed or left to chance across departments or teams.

- Defines roles, responsibilities, and accountability

The AWS shared responsibility model splits security duties between AWS and the customer. A well-written policy clarifies who is responsible for what, from identity and access control to incident response, ensuring no task falls through the cracks and that all security functions are owned and maintained.

- Strengthens risk management and incident response

By requiring regular risk assessments, the policy enables organizations to prioritize protection for high-value assets. It also lays out structured incident response playbooks for detection, containment, and recovery—helping teams act quickly and consistently in the event of a breach.

- Guides Secure Employee and Vendor Behavior

Security policies establish clear expectations regarding password hygiene, data sharing, the use of personal devices, and controls over third-party vendors. They help prevent insider threats, enforce accountability, and ensure that external partners don’t compromise your security posture.

A strong AWS Cloud Security policy matters because it defines how security and compliance responsibilities are divided between the customer and AWS, making the shared responsibility model clear and actionable for your organization.

What is the AWS shared responsibility model?

The AWS shared responsibility model is the foundation of any AWS security policy. AWS is responsible for the security of the cloud, which covers the physical infrastructure, hardware, software, networking, and facilities running AWS services. Organizations are responsible for security in the cloud, which includes managing data, user access, and security controls for their applications and services.

1. Establishing identity and access management foundations

Building a strong identity and access management in AWS starts with clear policies and practical security habits. The following points outline how organizations can create, structure, and maintain effective access controls.

Creating AWS Identity and Access Management policies

Organizations can create customer-managed policies in three ways:

- JavaScript Object Notation method: Paste and customize example policies. The editor validates syntax, and AWS Identity and Access Management Access Analyzer provides policy checks and recommendations.

- Visual editor method: Build policies without JavaScript Object Notation knowledge by selecting services, actions, and resources in a guided interface.

- Import method: Import and tailor existing managed policies from your account.

Policy structure and best practices

Effective AWS Identity and Access Management policies rely on a clear structure and strict permission boundaries to keep access secure and manageable. The following points highlight the key elements and recommended practices:

- Policies are JavaScript Object Notation documents with statements specifying effect (Allow or Deny), actions, resources, and conditions.

- Always apply the principle of least privilege: grant only the permissions needed for each role or task.

- Use policy validation to ensure effective, least-privilege policies.

Identity and Access Management security best practices

Maintaining strong access controls in AWS requires a disciplined approach to user permissions, authentication, and credential hygiene. The following points outline the most effective practices:

- User management: Avoid wildcard permissions and attaching policies directly to users. Use groups for permissions. Rotate access keys every ninety days or less. Do not use root user access keys.

- Multi-factor authentication: Require multi-factor authentication for all users with console passwords and set up hardware multi-factor authentication for the root user. Enforce strong password policies.

- Credential management: Regularly remove unused credentials and monitor for inactive accounts.

2. Network security implementation

Effective network security in AWS relies on configuring security groups as virtual firewalls and following Virtual Private Cloud best practices for availability and monitoring. The following points outline how organizations can set up and maintain secure, resilient cloud networks.

Security groups configuration

Amazon Elastic Compute Cloud security groups act as virtual firewalls at the instance level.

- Rule specification: Only allowed rules are supported. No inbound traffic is allowed by default; outbound traffic is allowed unless restricted.

- Multi-group association: Resources can belong to multiple security groups; rules are combined.

- Rule management: Changes apply automatically to all associated resources. Use unique rule identifiers for easier management.

Virtual Private Cloud security best practices

Securing an AWS Virtual Private Cloud involves deploying resources across multiple zones, controlling network access at different layers, and continuously monitoring network activity. The following points highlight the most effective strategies:

- Multi-availability zone deployment: Use subnets in multiple zones for high availability and fault tolerance.

- Network access control: Use security groups for instance-level control and network access control lists for subnet-level control.

- Monitoring and analysis: Enable Virtual Private Cloud Flow Logs to monitor traffic. Use Network Access Analyzer and AWS Network Firewall for advanced analysis and filtering.

3. Data protection and encryption

Protecting sensitive information in AWS involves encrypting data both at rest and in transit, tightly controlling access, and applying encryption at the right levels to meet security and compliance needs.

Encryption implementation

Encrypting data both at rest and in transit is essential to protect sensitive information, with access tightly controlled through AWS permissions and encryption applied at multiple levels as needed.

- Encrypt data at rest and in transit.

- Limit access to confidential data using AWS permissions.

- Apply encryption at the file, partition, volume, or application level as needed.

Amazon Simple Storage Service security

Securing Amazon Simple Storage Service (Amazon S3) involves blocking public access, enabling server-side encryption with managed keys, and activating access logging to monitor data usage and changes.

- Public access controls: Enable Block Public Access at both account and bucket levels.

- Server-side encryption: Enable for all buckets, using AWS-managed or customer-managed keys.

- Access logging: Enable logs for sensitive buckets to track all data access and changes.

4. Monitoring and logging implementation

Effective monitoring and logging in AWS combine detailed event tracking with real-time analysis to maintain visibility and control over cloud activity.

AWS CloudTrail configuration

Setting up AWS CloudTrail trails ensures a permanent, auditable record of account activity across all regions, with integrity validation to protect log authenticity.

- Trail creation: Set up trails for ongoing event records. Without trails, only ninety days of history are available.

- Multi-region trails: Capture activity across all regions for complete audit coverage.

- Log file integrity: Enable integrity validation to ensure logs are not altered.

Centralized monitoring approach

Integrating AWS CloudTrail with Amazon CloudWatch, Amazon GuardDuty, and AWS Security Hub enables automated threat detection, real-time alerts, and unified compliance monitoring.

- Amazon CloudWatch integration: Integrate AWS CloudTrail with Amazon CloudWatch Logs for real-time monitoring and alerting.

- Amazon GuardDuty utilization: Use for automated threat detection and prioritization.

- AWS Security Hub implementation: Centralizes security findings and compliance monitoring.

Knowing how responsibilities are divided helps create a comprehensive security policy that protects both the cloud infrastructure and your organization’s data and users.

Best practices for creating an AWS Cloud Security policy

Building a strong AWS Cloud Security policy requires more than technical know-how; it demands a clear understanding of businesses' priorities and potential risks. The right approach brings together practical controls and business objectives, creating a policy that supports secure cloud operations without slowing down the team

- AWS IAM controls: Assign AWS IAM roles with narrowly defined permissions for each service or user. Disable root account access for daily operations. Enforce MFA on all console logins, especially administrators. Conduct quarterly reviews to revoke unused permissions.

- Data encryption: Configure S3 buckets to use AES-256 or AWS KMS-managed keys for server-side encryption. Encrypt EBS volumes and RDS databases with KMS keys. Require HTTPS/TLS 1.2+ for all data exchanges between clients and AWS endpoints.

- Logging and monitoring: Enable CloudTrail in all AWS regions to capture all API calls. Use AWS Config to track resource configuration changes. Forward logs to a centralized, access-controlled S3 bucket with lifecycle policies. Set CloudWatch alarms for unauthorized IAM changes or unusual login patterns.

- Network security: Design VPCs to isolate sensitive workloads in private subnets without internet gateways. Use security groups to restrict inbound traffic to only necessary ports and IP ranges. Avoid overly permissive “0.0.0.0/0” rules. Implement NAT gateways or VPNs for secure outbound traffic.

- Automated compliance enforcement: Deploy AWS Config rules such as “restricted-common-ports” and “s3-bucket-public-read-prohibited.” Use Security Hub to aggregate findings and trigger Lambda functions that remediate violations automatically.

- Incident response: Maintain an incident response runbook specifying steps to isolate compromised EC2 instances, preserve forensic logs, and notify the security team. Conduct biannual tabletop exercises simulating AWS-specific incidents like unauthorized IAM policy changes or data exfiltration from S3.

- Third-party access control: Grant third-party vendors access through IAM roles with time-limited permissions. Require vendors to provide SOC 2 or ISO 27001 certifications. Log and review third-party access activity monthly.

- Data retention and deletion: Configure S3 lifecycle policies to transition data to Glacier after 30 days and delete after 1 year unless retention is legally required. Automate the deletion of unused EBS snapshots older than 90 days.

- Policy review and updates: Schedule formal policy reviews regularly and after significant AWS service changes. Document all revisions and communicate updates promptly to cloud administrators and security teams following approval.

As cloud threats grow more sophisticated, effective protection demands more than ad hoc controls. It requires a consistent, architecture-driven approach. Partners like Cloudtech build AWS security with best practices and the AWS Well-Architected Framework. This ensures that security, compliance, and resilience are baked into every layer of your cloud environment.

How Cloudtech Secures Every AWS Project

This commitment enables businesses to adopt AWS with confidence, knowing their environments are aligned with the highest operational and security standards from the outset. Whether you're scaling up, modernizing legacy infrastructure, or exploring AI-powered solutions, Cloudtech brings deep expertise across key areas:

- Data modernization: Upgrading data infrastructures for performance, analytics, and governance.

- Generative AI integration: Deploying intelligent automation that enhances decision-making and operational speed.

- Application modernization: Re-architecting legacy systems into scalable, cloud-native applications.

- Infrastructure resiliency: Designing fault-tolerant architectures that minimize downtime and ensure business continuity.

By embedding security and compliance into the foundation, not as an afterthought, Cloudtech helps businesses scale with confidence and clarity.

Conclusion

With a structured approach to AWS Cloud Security policy, businesses can establish a clear framework for precise access controls, minimize exposure, and maintain compliance across their cloud environment.

This method introduces consistency and clarity to permission management, enabling teams to operate with confidence and agility as AWS usage expands. The practical steps outlined here help organizations avoid common pitfalls and maintain a strong security posture.

Looking to strengthen your AWS security? Connect with Cloudtech for expert solutions and proven strategies that keep their cloud assets protected.

FAQs

1. How can inherited IAM permissions unintentionally increase security risks?

Even when businesses enforce least-privilege IAM roles, users may inherit broader permissions through group memberships or overlapping policies. Regularly reviewing both direct and inherited permissions is essential to prevent privilege escalation risks.

2. Is it possible to automate incident response actions in AWS security policies?

Yes, AWS allows businesses to automate incident response by integrating Lambda functions or third-party systems with security alerts, minimizing response times, and reducing human error during incidents.

3. How does AWS Config help with continuous compliance?

AWS Config can enforce secure configurations by using rules that automatically check and remediate non-compliant resources, ensuring the environment continuously aligns with organizational policies.

4. What role does AWS Security Hub’s Foundational Security Best Practices (FSBP) standard play in policy enforcement?

AWS Security Hub’s FSBP standard continuously evaluates businesses' AWS accounts and workloads against a broad set of controls, alerting businesses when resources deviate from best practices and providing prescriptive remediation guidance.

5. How can businesses ensure log retention and security in a multi-account AWS environment?

Centralizing logs from all accounts into a secure, access-controlled S3 bucket with lifecycle policies helps maintain compliance, supports audits, and protects logs from accidental deletion or unauthorized access.

Amazon RDS in AWS: key features and advantages

Businesses today face constant pressure to keep their data secure, accessible, and responsive, while also managing tight budgets and limited technical resources.

Traditional database management often requires significant time and expertise, pulling teams away from strategic projects and innovation.