Resources

Find the latest news & updates on AWS

Cloudtech Has Earned AWS Advanced Tier Partner Status

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network!

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network! This significant achievement highlights our expertise in AWS cloud modernization and reinforces our commitment to delivering transformative solutions for our clients.

As an AWS Advanced Tier Partner, Cloudtech has been recognized for its exceptional capabilities in cloud data, application, and infrastructure modernization. This milestone underscores our dedication to excellence and our proven ability to leverage AWS technologies for outstanding results.

A Message from Our CEO

“Achieving AWS Advanced Tier Partner status is a pivotal moment for Cloudtech,” said Kamran Adil, CEO. “This recognition not only validates our expertise in delivering advanced cloud solutions but also reflects the hard work and dedication of our team in harnessing the power of AWS services.”

What This Means for Us

To reach Advanced Tier Partner status, Cloudtech demonstrated an in-depth understanding of AWS services and a solid track record of successful, high-quality implementations. This achievement comes with enhanced benefits, including advanced technical support, exclusive training resources, and closer collaboration with AWS sales and marketing teams.

Elevating Our Cloud Offerings

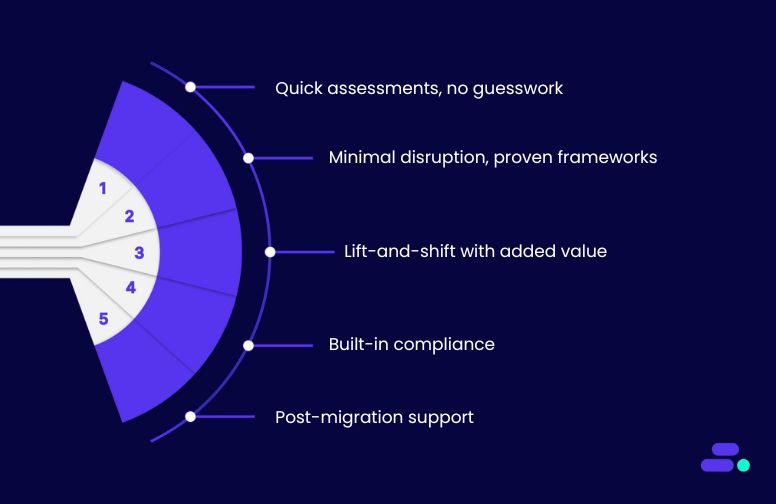

With our new status, Cloudtech is poised to enhance our cloud solutions even further. We provide a range of services, including:

- Data Modernization

- Application Modernization

- Infrastructure and Resiliency Solutions

By utilizing AWS’s cutting-edge tools and services, we equip startups and enterprises with scalable, secure solutions that accelerate digital transformation and optimize operational efficiency.

We're excited to share this news right after the launch of our new website and fresh branding! These updates reflect our commitment to innovation and excellence in the ever-changing cloud landscape. Our new look truly captures our mission: to empower businesses with personalized cloud modernization solutions that drive success. We can't wait for you to explore it all!

Stay tuned as we continue to innovate and drive impactful outcomes for our diverse client portfolio.

Serverless vs. containers: Choosing the right path for application modernization

When it comes to modernizing applications, two terms come into the picture: serverless and containers. Both promise agility, scalability, and cost savings, but they aren’t interchangeable. Think of them like choosing between ride-sharing and owning a car. One gives on-demand convenience without worrying about maintenance, while the other gives you full control and flexibility but requires more management.

For SMB leaders, picking the right approach directly impacts how fast they can innovate, how resilient their systems are, and how much value they get from the cloud. That’s why understanding where serverless and containers shine and where they fall short is critical to making the right modernization decision.

This article explores how SMBs can navigate the choice between serverless and containers, weighing scalability, cost, and agility to find the right fit for their growth journey.

Key takeaways:

- Align workloads: Serverless for event-driven, bursty tasks; containers for persistent, complex, or legacy apps.

- Manage overhead: Serverless minimizes ops; containers provide control and consistency.

- Optimize costs: Serverless suits spiky usage; containers fit continuous, predictable workloads.

- Plan for growth: Serverless boosts agility; containers support hybrid and incremental modernization.

- Utilize expertise: AWS partners like Cloudtech ensure precise, SMB-tailored modernization strategies.

What is the difference between serverless and containers?

Serverless computing, offered through services like AWS Lambda, eliminates the need to manage servers by running small, event-driven functions only when triggered. It automatically scales with demand, and businesses pay solely for execution time. For SMBs, serverless is ideal for lightweight, event-driven workloads such as APIs, chatbots, automation, or data pipelines, enabling lean IT teams to innovate without infrastructure overhead.

Containers, powered by Amazon ECS or Amazon EKS, bundle applications with all dependencies into portable units that run consistently across environments. While they require orchestration, containers offer greater control, flexibility, and compatibility with existing systems. For SMBs, they’re well-suited to modernizing monoliths, migrating legacy workloads, or running long-lived services with custom runtimes or persistent connections, delivering agility without demanding a full application rewrite.

5 key factors to consider when choosing between serverless and containers

Selecting between serverless and containers directly impacts cost, scalability, and long-term agility. Picking the wrong model can lead to wasted resources, higher operational complexity, or stalled innovation. For instance, trying to force a long-running, resource-heavy application into serverless could result in unpredictable costs and performance bottlenecks. Similarly, running simple, event-driven workloads on containers might burden lean IT teams with unnecessary infrastructure management.

In short, the wrong decision can lock SMBs into a path that drains time, budget, and focus, resources that should instead fuel growth and innovation.

These are the five critical factors to weigh before making the decision:

1. Matching workloads to the right model

When deciding between serverless and containers, the nature of the workload plays a critical role. Each model is optimized for different usage patterns and technical requirements, and AWS offers mature services to support both approaches.

Serverless (AWS Lambda, API Gateway, EventBridge, DynamoDB Streams): Serverless is built for event-driven and bursty workloads where execution is short-lived and scales instantly based on demand.

Relevant features:

- Scales automatically in response to triggers such as S3 uploads, API calls, or stream events.

- Pricing is tied directly to execution time and allocated memory, making it cost-effective for spiky or unpredictable traffic.

- Ideal for real-time data transformations, automation scripts, lightweight APIs, and asynchronous jobs.

Maximum execution duration per Lambda is 15 minutes, and workloads needing persistent connections, custom networking, or OS-level control are not well-suited.

Example: An e-commerce SMB handling unpredictable spikes during flash sales can use Lambda + API Gateway to scale checkout and order processing instantly without provisioning servers.

Containers (Amazon ECS, Amazon EKS, AWS Fargate): Containers shine in scenarios where applications need long-running processes, complex dependencies, or granular infrastructure control.

Relevant features:

- Provide a consistent runtime across environments, ensuring portability for modernized and legacy workloads.

- Support stateful services, persistent connections, and specialized runtimes not possible in Lambda.

- Well-suited for monolithic applications being broken into microservices, API backends requiring consistent performance, or real-time services like chat/messaging apps.

Unlike serverless, containers allow fine-tuning of compute, networking, and scaling policies, giving SMBs more control over performance.

Example: A logistics SMB modernizing its shipment tracking system with continuous real-time updates can use Amazon ECS on Fargate to maintain persistent connections and predictable long-running processes.

2. Meeting growth and performance demands

As SMBs grow, applications must scale reliably to handle more users, data, and transactions without compromising performance. The right choice between serverless and containers depends on whether growth is unpredictable or steady, and AWS offers services that adapt to both scenarios.

Serverless (AWS Lambda, Amazon DynamoDB, Amazon API Gateway): Serverless is optimized for elastic, demand-driven growth, making it ideal for workloads that experience sudden or uneven traffic spikes.

Relevant features:

- Scales automatically to handle thousands of concurrent executions without manual intervention.

- DynamoDB provides millisecond response times at virtually unlimited scale, supporting unpredictable usage patterns.

- No capacity planning required, as usage-based pricing means SMBs only pay for what they consume.

Best suited for flash sales, seasonal campaigns, or viral user activity where demand surges are short-lived but intense.

Example: A ticketing SMB can rely on Lambda + DynamoDB to instantly scale when thousands of users attempt to book during a major event release, avoiding downtime and overprovisioning costs.

Containers (Amazon ECS, Amazon EKS, AWS Fargate, Amazon Aurora): Containers are better suited for predictable, performance-intensive workloads that need continuous scale and stable throughput.

Relevant features:

- Enable horizontal scaling (adding more containers) or vertical scaling (tuning compute resources per container) based on workload demand.

- Amazon Aurora with ECS/EKS provides high throughput and low latency for relational data workloads.

- Support granular performance tuning for CPU, memory, and network, ensuring consistent user experience.

Ideal for always-on services, large data processing pipelines, or SaaS platforms with predictable growth.

Example: A logistics SMB running a real-time shipment tracking platform can use ECS with Aurora to maintain consistent performance as the user base grows steadily year over year.

3. Time and effort to manage infrastructure

One of the most important considerations for SMBs is how much time and expertise they can dedicate to managing infrastructure. The choice between serverless and containers often comes down to how much control an organization wants versus how much operational burden it can handle.

Serverless (AWS Lambda, API Gateway, DynamoDB): Serverless abstracts away most of the infrastructure complexity, allowing lean IT teams to stay focused on building business features rather than maintaining environments.

Relevant features:

- No servers to patch, scale, or monitor, since AWS manages the underlying infrastructure.

- Automatic scaling and high availability are built-in, reducing operational overhead.

- Simplifies DevOps pipelines since deployment often requires just code packaging and configuration.

Best suited for SMBs that want to move fast without heavy infrastructure investment.

Example: A fintech SMB building fraud-detection workflows can use Lambda + DynamoDB Streams to automate real-time checks without dedicating resources to server patching or scaling.

Containers (Amazon ECS, Amazon EKS, AWS Fargate): Containers, while more powerful, require a higher degree of management, particularly when orchestration, monitoring, and patching come into play.

Relevant features:

- Provide full visibility and control of the application environment, including networking, runtime, and scaling strategies.

- Require container orchestration (via ECS/EKS), CI/CD integration, and monitoring setup (e.g., CloudWatch, Prometheus).

- Fargate reduces some of this overhead by managing servers, but teams still need to design scaling policies and container configurations.

Better for SMBs that want fine-grained control and have or plan to build in-house DevOps expertise.

Example: A SaaS SMB delivering a multi-tenant application can use EKS with Fargate to gain control over scaling policies and runtime environments, while still offloading node management to AWS.

4. Cost efficiency at different scales

The pricing model is often a deciding factor for SMBs choosing between serverless and containers. While both approaches can be cost-effective, their efficiency depends heavily on workload patterns and scale.

Serverless (AWS Lambda, EventBridge, API Gateway): Serverless pricing is usage-based, which means SMBs only pay for what they use, down to milliseconds of execution.

Relevant features:

- No costs when functions are idle, making it ideal for sporadic or unpredictable workloads.

- Pricing is tied directly to execution time, memory, and request count.

- Eliminates the need to provision idle capacity, which helps SMBs control costs when demand is uncertain.

However, costs may scale quickly for long-running, high-volume applications due to execution limits and pricing per invocation.

Example: A marketing SMB running event-driven campaigns with bursts of API traffic can rely on Lambda + API Gateway to handle spikes cost-effectively without ongoing server costs.

Containers (Amazon ECS, Amazon EKS, AWS Fargate): Containers have a different cost profile, often becoming more efficient at steady or large scales.

Relevant features:

- Costs are based on the compute and storage resources allocated, regardless of whether the containers are fully utilized.

- More predictable for workloads with continuous or long-running demand.

- With reserved or savings plans on EC2/Fargate, SMBs can optimize for predictable workloads and reduce long-term costs.

At smaller scales, containers may introduce unnecessary fixed costs compared to serverless.

Example: A media SMB running a video processing pipeline 24/7 can achieve lower costs with ECS on EC2 Reserved Instances, rather than paying for repeated Lambda executions.

5. Balancing current complexity with future plans

The right choice between serverless and containers often depends on how an SMB balances current application complexity with future modernization goals. Both models support growth, but the starting point and trajectory matter.

Serverless (AWS Lambda, Step Functions, DynamoDB, EventBridge): Serverless is best suited for greenfield projects or modular applications that can be designed around events and AWS-managed services.

Relevant features:

- Enables faster time-to-market with minimal infrastructure overhead.

- Best for building new digital products, APIs, or automation workflows.

- Naturally aligns with event-driven architectures, making scaling and integration simpler.

Example: A fintech SMB launching a new mobile payments feature can adopt serverless to iterate quickly, integrate with third-party APIs, and scale on-demand without investing in new infrastructure.

Containers (Amazon ECS, Amazon EKS, AWS Fargate): Containers are a stronger fit for SMBs dealing with existing, complex, or legacy applications where a full rewrite to serverless isn’t practical.

Relevant features:

- Allow modernization at a controlled pace, by containerizing monolithic apps and gradually moving toward microservices.

- Provide flexibility for hybrid cloud or multi-cloud strategies.

- Offer portability for future migrations without binding entirely to serverless abstractions.

Example: A healthcare SMB with a legacy patient management system can containerize the existing application using ECS on Fargate, enabling modernization in stages while planning long-term cloud-native adoption.

Choosing between serverless and containers isn’t just a technical decision, it’s a strategic one that shapes scalability, costs, and future innovation. That is why, working with an AWS expert is essential to avoid costly missteps.

How does Cloudtech help SMBs modernize applications with precision?

Cloudtech helps SMBs modernize applications with strategies tailored to each workload and business goal. Its team of former AWS professionals depend on their deep cloud-native expertise to transform legacy systems into scalable, resilient, and cost-efficient architectures.

From breaking monoliths into microservices to adopting serverless or container-based designs, Cloudtech ensures modernization aligns with growth, performance, and operational efficiency, delivering future-ready applications without unnecessary complexity or spend.

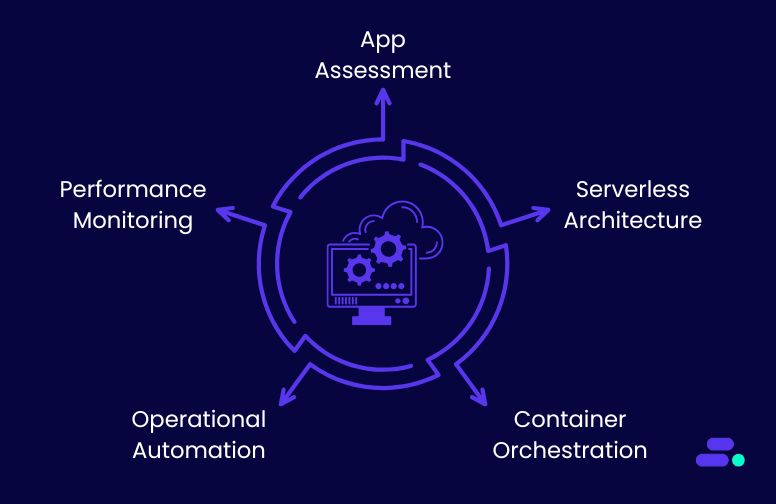

Key Cloudtech services for application modernization:

- Application assessment and modernization strategy: Cloudtech evaluates legacy applications to identify performance bottlenecks, scalability gaps, and integration challenges, then recommends modernization paths aligned with SMB business goals.

- Serverless architecture implementation: Using AWS Lambda, API Gateway, and Step Functions, Cloudtech transforms suitable workloads into event-driven functions that scale automatically, reduce operational overhead, and improve cost efficiency.

- Containerization and orchestration: Using Amazon ECS, EKS, and Fargate, Cloudtech helps SMBs migrate workloads into containerized environments, enabling microservices adoption, consistent runtime across environments, and support for long-running or stateful processes.

- Operational automation and CI/CD: Cloudtech builds automated pipelines with AWS CodePipeline, CodeBuild, and CodeDeploy, accelerating release cycles, minimizing errors, and ensuring applications are deployed consistently and reliably.

- Performance optimization and monitoring: Cloudtech continuously tunes compute, storage, and database configurations, and implements monitoring with Amazon CloudWatch, X-Ray, and Application Insights, ensuring applications run efficiently, cost-effectively, and remain highly available.

Through these capabilities, SMBs gain an AWS-architected, SMB-tailored modernization strategy. Cloudtech ensures applications are optimized for performance, scalability, and cost efficiency, while automating operational workflows, giving SMBs the confidence to innovate and grow without infrastructure bottlenecks.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Half-measures in modernization can leave SMB applications slow, costly, or hard to scale, undermining business agility. Choosing the right modernization path and following AWS best practices is no longer optional, it’s essential for resilient, future-ready operations.

With Cloudtech, SMBs can modernize applications with precision. Its team designs SMB-focused modernization strategies, optimizes workloads for performance and cost, automates operational workflows, and ensures applications scale seamlessly. The result is a cloud environment that drives innovation, supports growth, and eliminates infrastructure bottlenecks.

Connect with Cloudtech today to transform your applications into agile, scalable, and efficient assets that grow with your business.

FAQs

1. Can SMBs mix serverless and containerized architectures in a single application?

Yes. Many SMBs adopt a hybrid approach, using serverless for event-driven components (like API triggers or data processing) and containers for persistent or legacy services. AWS supports seamless integration via services like AWS Lambda invoking ECS tasks or sharing data through Amazon S3 and DynamoDB, enabling the best of both worlds.

2. How do deployment and scaling differ between serverless and containers?

Serverless automatically scales functions in response to triggers, requiring minimal management, while containers rely on orchestration tools like Amazon ECS or EKS to scale based on predefined metrics. SMBs need to evaluate how much operational overhead they can manage versus the flexibility and control containers provide.

3. Are there specific cost considerations SMBs should be aware of?

Serverless is cost-efficient for spiky or unpredictable workloads since billing is per execution, but it can become expensive for long-running or high-throughput tasks. Containers may have higher baseline costs due to always-on compute, but they offer predictable, optimized pricing for continuous workloads. Choosing the wrong model can inflate costs unnecessarily.

4. How does modernization affect existing legacy applications?

Serverless often requires refactoring or breaking down applications into microservices, which may not suit tightly coupled legacy systems. Containers allow SMBs to modernize incrementally, running legacy workloads with minimal changes while gradually adopting cloud-native practices for new components.

5. What tools can SMBs use to monitor and optimize their modernized applications?

AWS provides CloudWatch, X-Ray, and Application Insights for observability across both serverless and containerized workloads. These tools help SMBs track performance, detect bottlenecks, and optimize costs while ensuring applications meet uptime and scalability requirements.

9 AWS Cloud security best practices every SMB should know

Many businesses implementing cloud assume that the security of their hosted data and applications rests with the service provider. They forget that cloud security is a shared responsibility. Without actively implementing cloud security best practices, gaps widen, leaving critical data and applications vulnerable despite the inbuilt security controls.

For SMBs implementing AWS Cloud, the real power comes from combining its built-in protections with cloud security best practices. SMBs that embrace this shared responsibility model don’t just survive, they thrive.

This article breaks down 9 AWS cloud security best practices SMBs should implement to protect workloads, strengthen compliance, and build a resilient cloud environment.

Key takeaways:

- Cloud security requires best practices, not shortcuts: SMBs must go beyond reactive defenses with layered, automated, and scalable controls.

- Shared responsibility is key: AWS secures the cloud infrastructure, but SMBs must protect workloads, identities, and data.

- Automation reduces risk and cost: Automating compliance, monitoring, and response ensures consistent security without added overhead.

- Enterprise-grade security is within reach: With AWS-native tools, SMBs can achieve advanced protection without enterprise-level budgets.

- Cloudtech makes it airtight: As an AWS Advanced Tier Partner, Cloudtech tailors and enforces best-practice security frameworks that scale with SMB growth.

What happens if SMBs don’t follow cloud security best practices?

Ignoring cloud security best practices can expose SMBs to a range of operational, financial, and reputational risks. Unlike large enterprises with dedicated security teams, SMBs often lack the resources to detect and respond to threats quickly.

Without proactive measures, even workloads running on secure cloud infrastructure can become vulnerable to attacks, misconfigurations, and compliance failures.

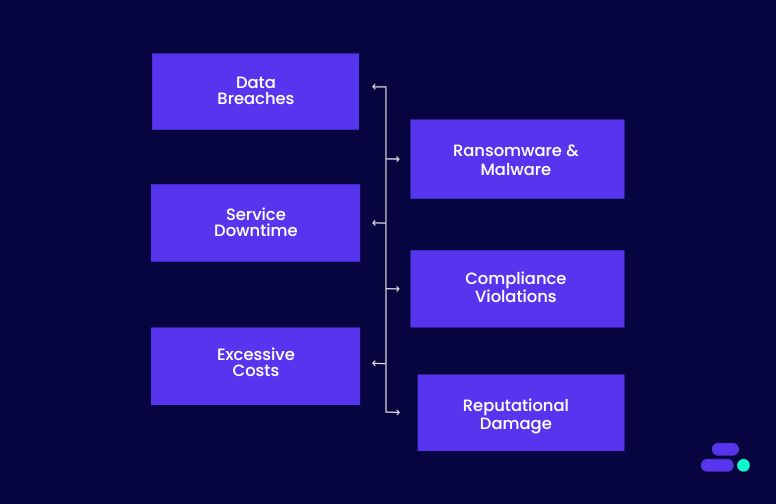

Key risks and consequences include:

- Data breaches and exfiltration: Poorly configured access controls, unsecured Amazon S3 buckets, or mismanaged IAM roles can allow attackers to steal sensitive customer, financial, or intellectual property data. Breaches can result in regulatory fines, legal liabilities, and loss of customer trust.

- Ransomware and malware attacks: Weak network segmentation, missing patch management, and lack of runtime monitoring increase the likelihood of ransomware infection or malware propagation across cloud resources, potentially locking critical workloads.

- Service disruptions and downtime: Failure to implement redundancy, automated backups, or disaster recovery can turn hardware failures, application misconfigurations, or cyberattacks into extended downtime, impacting revenue and operations.

- Compliance violations: SMBs in regulated industries like healthcare, finance, or e-commerce risk fines and penalties if logging, auditing, and encryption standards aren’t followed. Mismanaged data residency or retention policies can trigger non-compliance.

- Excessive costs and inefficiencies: Insecure or poorly optimized cloud deployments can result in runaway resource usage, repeated recovery efforts, and inefficiencies in monitoring and incident response, driving up operational expenses.

- Reputational damage: Customers and partners expect secure handling of data. A single breach or repeated security incidents can erode trust, making it harder to acquire new clients and retain existing ones.

Failing to adopt cloud security best practices multiplies risk across infrastructure, applications, and business operations. Security must be proactive, automated, and continuous, integrating identity management, network protection, monitoring, and compliance to ensure workloads remain resilient and secure in the cloud.

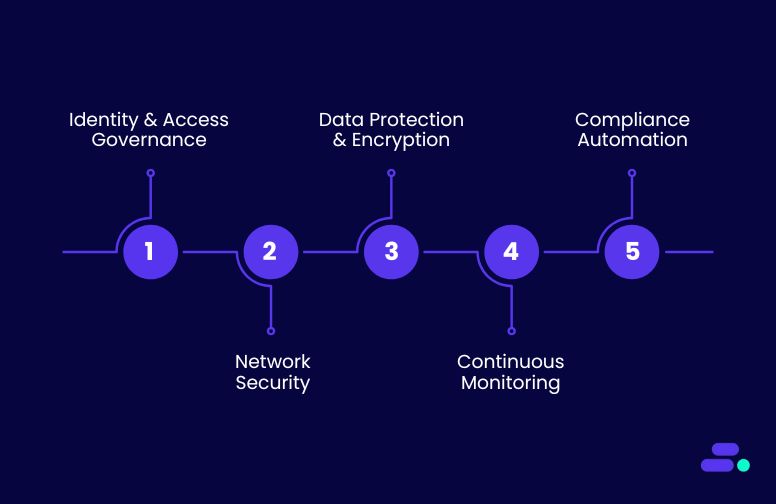

9 cloud security best practices SMBs should follow to secure their data and applications

For SMBs, adopting AWS cloud security best practices means creating a resilient, scalable framework that protects data, applications, and business operations across every layer of the cloud environment.

AWS provides a rich ecosystem of services that make these best practices achievable even for lean IT teams. Continuous monitoring, threat detection, and compliance are built into the AWS platform. AWS tools like GuardDuty, Security Hub, Inspector, and Config allow SMBs to detect anomalies, enforce policies, and maintain regulatory alignment without needing large security teams.

Integrating security early in development pipelines (“shift-left” practices) with CodePipeline and automated vulnerability scans ensures that workloads are safe before deployment. Lifecycle management, logging, and cost monitoring tools like Amazon CloudWatch and Cost Explorer help SMBs optimize operations while maintaining security.

Following these 9 best practices help SMBs to implement automated, resilient, and cost-efficient security that evolves alongside their business:

1. Strong identity and access management

Effective identity and access management (IAM) is the cornerstone of cloud-native security. For SMBs, properly managing who can access what resources ensures sensitive data, applications, and workloads remain protected, even as teams grow or workloads scale. Strong IAM practices minimize risk, support compliance, and enable secure collaboration across the organization.

How to implement using AWS:

- Define roles and permission sets in AWS IAM and IAM Identity Center, assigning access based on job functions or workloads.

- Enable multi-factor authentication (MFA) for all human users and utilize temporary credentials for applications or automation tasks.

- Regularly audit and review permissions to detect and correct privilege creep.

- Integrate logging and monitoring via AWS CloudTrail to track all access and changes.

- Use Service Control Policies (SCPs) within AWS Organizations to enforce consistent access policies across multiple accounts.

Why it matters: Strong IAM reduces the attack surface by ensuring users and applications only have the permissions they need. It helps SMBs meet regulatory requirements such as HIPAA, GDPR, and PCI DSS, and prevents operational errors caused by misconfigurations or unauthorized access. With proper IAM, even lean IT teams can maintain a secure, auditable, and resilient cloud environment.

2. Network segmentation and protection

A secure network foundation is critical for safeguarding SMB applications and data in the cloud. Poorly designed networks can expose sensitive workloads to unnecessary risk. By segmenting networks, restricting traffic, and layering defenses, SMBs can limit the blast radius of an attack and ensure each part of their infrastructure is only accessible where necessary.

How to implement using AWS:

- Create Amazon VPCs with isolated public and private subnets to separate internet-facing services from internal workloads.

- Apply Security Groups for instance-level controls and Network ACLs (NACLs) for subnet-level filtering.

- Protect web-facing applications using AWS WAF for application-layer threats and AWS Shield for DDoS mitigation.

- Use VPC Endpoints to connect securely to AWS services without traversing the public internet.

- Implement AWS Transit Gateway for centralized and secure multi-VPC or hybrid network connectivity.

- Monitor network traffic using VPC Flow Logs and integrate with Amazon GuardDuty for anomaly detection.

Why it matters: Network segmentation reduces the attack surface by ensuring workloads are only exposed where necessary. It prevents lateral movement in case of compromise and safeguards customer-facing applications against web exploits and denial-of-service attacks.

For SMBs, a strong network architecture provides enterprise-grade protection while keeping costs predictable and management simple.

3. Encryption for data in transit and at rest

Data is the most valuable asset for any SMB, and protecting it is non-negotiable. Whether at rest in storage or moving between applications, unencrypted data is an easy target for attackers. Robust encryption ensures that even if systems are breached or files intercepted, the data remains unreadable and protected.

How to implement using AWS:

- Use AWS Key Management Service (KMS) to enable encryption across Amazon S3, EBS, RDS, DynamoDB, and other storage services.

- For higher security, configure Customer-Managed Keys (CMKs) with rotation policies for sensitive workloads.

- Enforce TLS (SSL) for all data transfers between applications, databases, and APIs.

- Encrypt backups and logs automatically using AWS services like AWS Backup and CloudTrail with KMS.

- Use AWS Certificate Manager (ACM) to manage SSL/TLS certificates without the operational overhead.

- Monitor key usage and access through AWS CloudTrail and set up alerts for unusual activity.

Why it matters: Encryption ensures that sensitive customer data, financial records, and intellectual property remain secure even if infrastructure is compromised. For SMBs, it’s a cost-effective way to meet compliance requirements (HIPAA, PCI DSS, GDPR) while maintaining customer trust. Strong encryption practices reduce the risk of data leaks, safeguard against insider threats, and demonstrate a proactive commitment to security.

4. Automated security monitoring and threat detection

Cyber threats are constantly evolving, and manual monitoring can’t keep up. SMBs need real-time visibility into suspicious activity to respond before small issues escalate into major breaches. Automated security monitoring enables lean IT teams to detect anomalies, privilege escalations, and unauthorized access without heavy operational overhead.

How to implement using AWS:

- Enable Amazon GuardDuty to detect anomalous behavior such as unusual API calls, unauthorized access attempts, or data exfiltration.

- Use AWS Security Hub to centralize findings across multiple AWS accounts and services, providing a single view of compliance and threats.

- Integrate Amazon Detective to investigate suspicious activity with visualized relationships between users, resources, and IP addresses.

- Automate response workflows with AWS Lambda or AWS Systems Manager to contain threats quickly.

- Feed logs from AWS CloudTrail, VPC Flow Logs, and CloudWatch into monitoring tools for comprehensive coverage.

Why it matters: For SMBs with limited security staff, automation levels the playing field against sophisticated attackers. Instead of relying on reactive, manual reviews, AWS-native monitoring tools provide continuous coverage and actionable insights. This reduces the time to detect and respond, minimizes potential damage, and ensures that businesses can stay compliant and resilient without building a large security operations center.

5. Shift-left security in development pipelines

Modern SMBs increasingly rely on rapid software releases to stay competitive. But with speed comes the risk of pushing vulnerable code into production. Shift-left security embeds checks early in the development lifecycle within CI/CD pipelines, so vulnerabilities are caught before workloads are deployed.

This proactive approach reduces the cost and impact of fixing issues later while improving overall application security.

How to implement using AWS:

- Use AWS CodePipeline to automate build, test, and deployment stages while embedding security checks.

- Integrate Amazon Inspector to scan for vulnerabilities in EC2 instances, containers, and Lambda functions.

- Add container image scanning through Amazon ECR (Elastic Container Registry) to detect known vulnerabilities before pushing images to production.

- Apply policy-as-code with AWS Config and AWS IAM Access Analyzer to enforce compliance and prevent misconfigurations.

- Automate testing with third-party integrations (e.g., Snyk, Checkmarx) directly within CodeBuild or CodePipeline for comprehensive coverage.

Why it matters: For SMBs, catching security issues in production can lead to costly downtime, reputational damage, and compliance failures. By shifting security left, businesses create a culture of “secure by design” while accelerating safe releases. This ensures developers focus on innovation without sacrificing resilience, helping SMBs build customer trust and reduce long-term security costs.

6. Compliance and governance by design

Meeting compliance requirements isn’t just about passing audits. It's about embedding trust into every layer of the cloud environment. For SMBs in regulated industries, failing to address compliance can quickly lead to fines, legal risk, and lost credibility. Governance by design ensures that compliance is continuously enforced through automation, not as an afterthought or one-time checklist.

How to implement using AWS:

- Use AWS Config to continuously monitor configurations against compliance rules and automatically remediate violations.

- Leverage AWS Control Tower to establish a secure, multi-account landing zone with guardrails aligned to compliance frameworks.

- Automate evidence collection and reporting with AWS Audit Manager, simplifying audits for standards like HIPAA, GDPR, and PCI DSS.

- Enable AWS CloudTrail and AWS Security Hub for centralized logging, monitoring, and compliance visibility.

- Integrate compliance findings with dashboards and alerts to ensure real-time visibility for stakeholders.

Why it matters: For SMBs, manual compliance processes are often slow, error-prone, and expensive. Automating governance not only reduces human error but also strengthens trust with customers, partners, and regulators. By making compliance part of the cloud architecture itself, SMBs can scale with confidence, avoid regulatory pitfalls, and focus on growth without being slowed down by audit fatigue.

7. Backup and disaster recovery planning

Outages, ransomware, and accidental deletions can strike without warning. For SMBs with limited IT resources, a single disruption can lead to significant downtime, lost revenue, and damaged trust.

A well-designed backup and disaster recovery (DR) plan ensures business continuity, giving businesses the confidence that their data and applications can survive unexpected events.

How to implement using AWS:

- Use AWS Backup to centrally manage, automate, and monitor backups across AWS services and on-premises workloads.

- Design workloads with multi-AZ replication and consider cross-region backups for stronger disaster recovery.

- Define Recovery Point Objectives (RPOs) and Recovery Time Objectives (RTOs) based on application criticality.

- Regularly test restores using AWS Elastic Disaster Recovery (DRS) to validate recovery readiness.

- Apply lifecycle policies to optimize storage costs while maintaining compliance requirements.

Why it matters: Without a tested backup and DR strategy, downtime can escalate from hours to days, eroding customer confidence and creating financial setbacks. By planning ahead with AWS-native tools, SMBs can recover quickly, minimize disruption, and ensure their business stays resilient no matter what happens.

8. Continuous optimization and cost-aware security

Security and cost optimization often go hand in hand. SMBs need to ensure that security controls are always enforced without overspending on unused or misconfigured resources. By continuously reviewing usage patterns and aligning them with security best practices, businesses can protect their environment while staying within budget.

How to implement using AWS:

- Use AWS Cost Explorer to track spending and correlate costs with specific projects through resource tagging.

- Set up Amazon CloudWatch alarms for unusual spikes in activity that may signal security incidents or misconfigurations.

- Apply AWS Budgets and Trusted Advisor recommendations to identify unused or underutilized resources.

- Regularly audit S3 lifecycle rules, permissions, and logging to prevent unnecessary exposure and storage costs.

- Automate cleanup of unused keys, roles, and security groups to reduce the attack surface and optimize costs.

Why it matters: SMBs often operate under tight budgets, making it critical to balance robust security with cost efficiency. Continuous optimization ensures that resources remain secure, lean, and right-sized, preventing both wasted spend and security blind spots. This approach helps SMBs stay secure without sacrificing financial agility.

9. Security awareness and culture building

Even the most advanced AWS security tools can’t protect an organization if employees unknowingly open the door to threats. Phishing, weak passwords, and accidental data exposure are among the most common causes of breaches for SMBs. Building a strong security culture ensures that people, and not just technology, play an active role in protecting cloud workloads.

How to implement using AWS:

- Use AWS IAM Access Analyzer to detect unintended public or cross-account access to resources and educate teams on resolving issues.

- Enforce MFA across all user accounts using IAM Identity Center, reinforcing the habit of secure logins.

- Enable AWS CloudTrail & GuardDuty alerts and share findings with staff during regular security training sessions.

- Provide least-privilege access tied to roles, ensuring employees only interact with the resources they actually need.

Why it matters: Technology is only half of the equation. Human behavior often decides whether a breach happens or not. By instilling security awareness and good practices in daily operations, SMBs drastically reduce risks from accidental misconfigurations, phishing attempts, or insider mistakes. A culture-first approach makes every employee a security partner, not just a bystander.

Implementing these practices helps SMBs move beyond basic cloud protections to a proactive, automated, and resilient security posture, safeguarding data, applications, and business operations in the AWS cloud.

Why risk gaps in security? For SMBs, even small missteps can expose critical data. That’s where AWS experts like Cloudtech can ensure best practices are implemented correctly, compliance is automated, and cloud security scales seamlessly with the business.

How does Cloudtech help SMBs strengthen their cloud security?

Most AWS partners focus on security compliance checklists. Cloudtech goes further, designing SMB-first security architectures that balance cost, usability, and resilience. Its team of former AWS professionals combines deep technical expertise with real-world SMB challenges, ensuring security isn’t just implemented, but continuously optimized to evolve with the business.

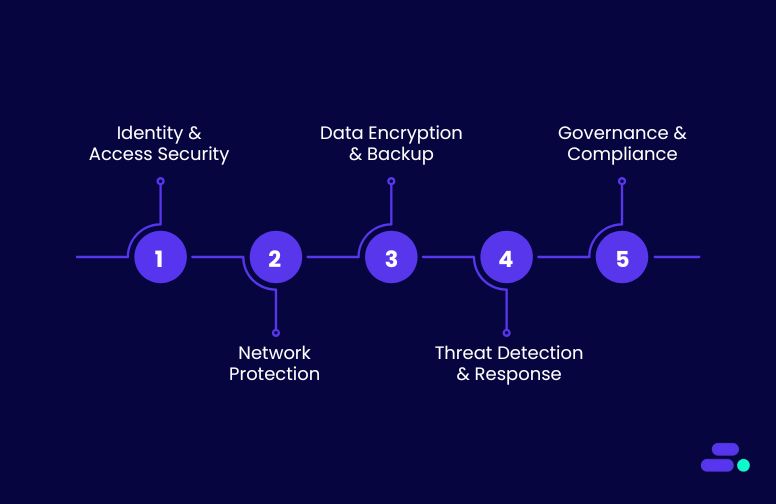

Key Cloudtech services for strengthening cloud security:

- Identity and access security: Cloudtech sets up centralized identity with AWS IAM and IAM Identity Center, enforces MFA, manages privileged accounts, and applies least-privilege policies to safeguard user and service access.

- Network segmentation and protection: Using Amazon VPC, security groups, network ACLs, AWS WAF, and AWS Shield, Cloudtech isolates workloads, blocks malicious traffic, and reduces exposure to external threats.

- Data encryption and backup security: Cloudtech secures sensitive data with AWS KMS, encrypts backups and logs, and enables automated replication across multiple Availability Zones for data integrity and resilience.

- Threat detection and incident response: With Amazon GuardDuty, CloudWatch, and AWS Security Hub, Cloudtech provides continuous threat detection, centralized alerting, and automated remediation to quickly contain risks.

- Governance, compliance, and auditing: Leveraging AWS Config, Control Tower, and Audit Manager, Cloudtech enforces policies, monitors compliance in real time, and produces audit-ready reports for regulations like HIPAA, GDPR, and PCI DSS.

Through these capabilities, SMBs don’t just “check the security box,” they gain an AWS-architected, SMB-tailored security model. Cloudtech ensures controls are not only compliant but also cost-aware, automated, and practical for lean IT teams, giving SMBs the confidence to stay secure while scaling.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Security done halfway is a hidden risk, where misconfigured controls, unmonitored activity, or weak compliance can expose critical data and stall growth. Adopting AWS best practices isn’t optional anymore. It’s the foundation for resilient, scalable operations in a threat-heavy digital landscape.

With the help of an AWS expert like Cloudtech, SMBs can implement these best practices with precision, building airtight security frameworks, enforcing least-privilege access, automating compliance, and continuously monitoring workloads. The result is a proactive, cost-aware security posture that lets leaders focus on growth while knowing their cloud is secured against evolving threats.

Connect with Cloudtech today to design a security strategy that keeps your data safe while fueling innovation.

FAQs

1. Why is cloud security different from traditional on-premise security?

Cloud environments are dynamic, elastic, and operate on a shared responsibility model. Unlike on-premise systems where IT owns everything end-to-end, in the cloud, AWS secures the infrastructure while SMBs are responsible for securing their workloads, identities, and data. This requires continuous monitoring, automated controls, and zero-trust principles to stay protected.

2. What mistakes do SMBs commonly make when setting up cloud security?

Common pitfalls include granting broad IAM permissions (like full admin access), storing sensitive data without encryption, treating cloud as if it were on-prem (leading to outdated defense models), and skipping automated logging or monitoring. These gaps often go unnoticed until an incident occurs, making proactive best practices critical.

3. How does automation improve SMB cloud security?

Manual processes are error-prone and can’t keep pace with evolving threats. By automating compliance checks, access reviews, vulnerability patching, and anomaly detection, SMBs ensure consistent, real-time enforcement of security rules. Services like AWS Config, GuardDuty, and Security Hub help eliminate human oversight while reducing operational workload.

4. Can SMBs achieve enterprise-grade security without enterprise budgets?

Yes. Cloud-native security is inherently scalable and pay-as-you-go. Tools like AWS WAF, Shield, and CloudTrail give SMBs access to enterprise-grade capabilities at manageable costs. With proper architecture and governance, SMBs can deploy multi-layered defenses affordably, getting protection once reserved for large enterprises.

5. How does Cloudtech ensure security remains effective as SMBs grow?

Cloudtech builds adaptive security frameworks aligned to AWS best practices. That means identity controls, monitoring pipelines, and compliance checks are designed to scale automatically as workloads expand. By combining automation with ongoing advisory support, Cloudtech ensures SMBs maintain a proactive, compliant, and resilient security posture at every stage of growth.

Protecting business data: The SMB's guide to AWS cloud backup

Nearly 40% of companies lose critical data in a cyberattack. This is a staggering number when considering what “critical” really means. It’s not just spreadsheets and documents at risk. It could be customer records, financial transactions, intellectual property, or operational systems that keep the business running day to day. For an SMB, such a loss can stall operations, erode customer trust, invite regulatory penalties, and in many cases, threaten the very survival of the business.

This is where AWS Cloud Backup becomes essential. By securely storing data in the cloud with built-in redundancy, encryption, and automated recovery options, SMBs can ensure that even if their systems are compromised, their data isn’t gone forever.

This article explores why SMBs, particularly in sensitive sectors like healthcare and financial services, can’t afford the risks of on-premises backups, and how AWS Cloud Backup delivers the efficiency, security, and resilience needed to safeguard growth.

Key takeaways:

- AWS Backup simplifies protection by centralizing policies across workloads, eliminating the complexity of managing multiple backup tools.

- SMBs gain enterprise-grade resilience with features like automated scheduling, lifecycle management, and multi-AZ redundancy.

- Costs stay optimized through intelligent tiering, ensuring businesses don’t overpay for long-term backup storage.

- Compliance is built-in with encryption, audit reports, and retention controls that align with industry standards.

- Cloudtech adds the SMB edge by tailoring AWS Backup setups to lean budgets, automating management, and providing ongoing support for growth.

Business data is in danger: The case for AWS Backup

Cybercriminals don’t just chase big enterprises anymore. They are increasingly targeting smaller businesses precisely because they assume they’re “too small to be noticed.” But attackers think that SMBs often lack dedicated security teams, rely on outdated backup methods, and can’t afford prolonged downtime. That makes their data a soft target.

Ransomware gangs, for example, don’t need to break into a global bank when an unpatched SMB server can yield thousands of sensitive records, and a quick payout. Add in the risk of accidental deletions, hardware failures, or natural disasters, and it’s clear. Every SMB, no matter how secure they believe themselves to be, sits on fragile ground.

Without modern cloud-based backup and recovery, a single breach or failure can mean lost customer trust, regulatory fines, or even closure.

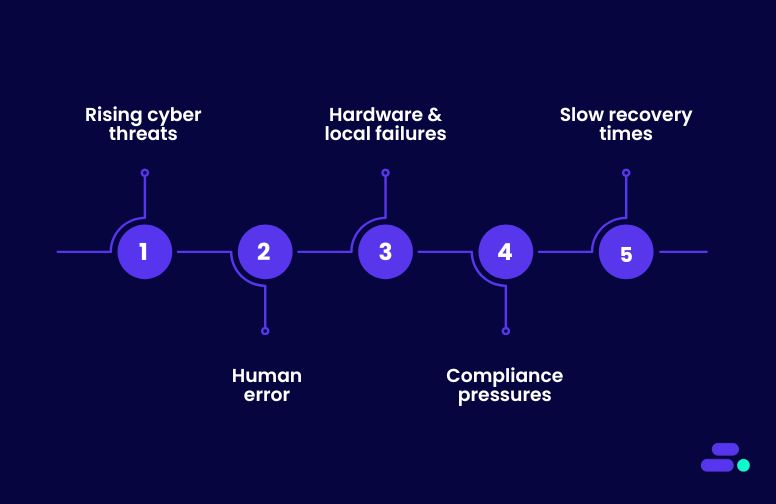

The challenges of protecting business data include:

- Rising cyber threats: Nearly 40% of companies lose critical data in a cyberattack, and SMBs are prime targets due to smaller security budgets.

- Human error: Simple mistakes like misconfigured systems or accidental deletions can wipe out essential files, often with no easy rollback.

- Hardware & local failures: On-premises servers, disks, and tape backups remain single points of failure vulnerable to wear, outages, or disasters.

- Compliance pressures: Regulations in healthcare, finance, and other industries demand strict data retention and auditability.

- Slow recovery times: Traditional backup methods can take hours or even days to restore operations, leading to costly downtime and lost productivity.

In short, relying on outdated backup strategies exposes SMBs to unacceptable risks. AWS Backup provides a modern, cloud-native alternative that is automated, scalable, and secure, so businesses can safeguard their most valuable asset: their data.

How can SMBs set up AWS Backup to protect their data?

Traditional, on-premises backup systems weigh SMBs down with costly hardware, manual upkeep, and limited scalability. They require constant patching, monitoring, and recovery testing. These are efforts that strain lean IT teams while still leaving gaps in resilience. When disaster strikes, recovery is often slow, complex, and unreliable.

AWS Backup eliminates these barriers by delivering a fully managed, cloud-native solution. Backups are automated, centrally managed, and seamlessly integrated across AWS services like EC2, RDS, DynamoDB, and EFS. With built-in encryption, isolated backup vaults, and lifecycle policies that tier data to cost-efficient storage, SMBs gain enterprise-grade protection without the burden of infrastructure management.

Most importantly, AWS Backup makes recovery fast, predictable, and scalable. Restores can be launched directly from the console, minimizing downtime and disruption. Instead of wrestling with hardware limits or complex restores, SMBs can focus on growth, knowing their data is secure, compliant, and always recoverable.

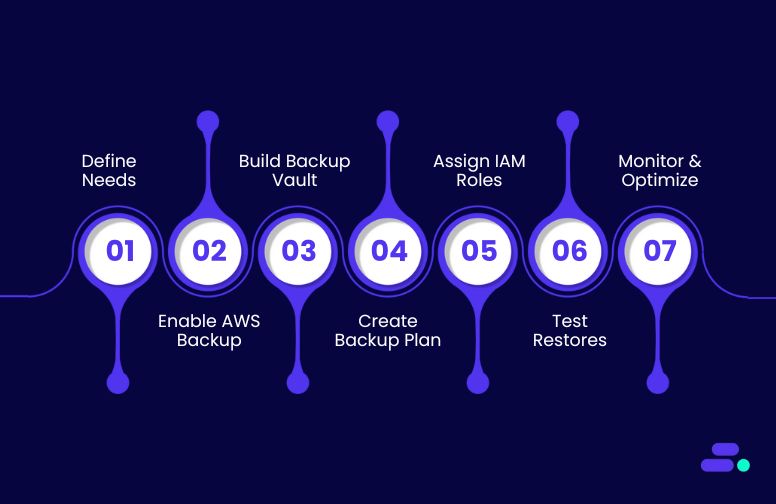

Here’s a step-by-step guide for SMBs to set up AWS Backup in a way that’s practical:

Step 1: Define business & compliance needs

Before setting up backups, SMBs need to clarify exactly what they are protecting and why. Backup strategies are only effective when aligned with business goals, customer expectations, and compliance mandates. Skipping this step often results in overpaying for storage, gaps in protection, or backups that fail when disaster strikes.

Why this step matters:

- Prevents blind spots: By identifying all critical workloads (databases, applications, file systems, VMs), businesses ensure nothing essential slips through the cracks.

- Aligns with risk tolerance: Defining RPO and RTO helps avoid both over-engineering (wasting money) and under-protecting (risking downtime).

- Meets compliance upfront: Regulations like HIPAA, GDPR, and FINRA often require specific data retention, encryption, or recovery policies.

Key AWS tools that help with this step:

- AWS Backup: Centralizes backups for EC2, RDS, DynamoDB, EFS, and even on-premises servers via Backup Gateway.

- AWS Backup Plans: Enables SMBs to set schedules, retention rules, and lifecycle policies that map directly to recovery point (RPO) and recovery time (RTO) objectives.

- AWS Backup Audit Manager: Continuously checks backups against compliance standards such as HIPAA, GDPR, or FINRA.

- AWS Organizations & Control Tower: Extend governance across multiple accounts, ensuring consistent enforcement of backup policies.

Together, these tools ensure the backup strategy is not just technical, but also aligned with regulatory requirements and long-term business goals.

Step 2: Enable AWS Backup in the console

Once the backup requirements are defined, the next step is to activate the backup so businesses can begin protecting workloads across their environment. This step essentially “switches on” the service, giving them access to centralized policies, automation, and visibility into backups.

Why this step matters:

- Centralized control: AWS Backup unifies backup management across services like EC2, RDS, DynamoDB, EFS, and even on-prem workloads.

- Multi-region flexibility: Enabling it in all operating regions ensures business continuity, even if one region experiences disruptions.

- Foundation for automation: Without enabling the service, SMBs can’t create backup plans, enforce retention rules, or automate policies.

Steps in the AWS Management Console:

- Log in → Open the AWS Management Console and search for AWS Backup.

- Select regions → Choose the regions where critical workloads (e.g., EC2, RDS, DynamoDB, EFS) are hosted.

- Enable the service → Turn on AWS Backup in those regions to allow local policy creation and backup management.

Once enabled, AWS Backup is ready to connect with workloads and apply the backup policies that will be defined in the following steps.

Step 3: Build a backup vault

With backup enabled, the next step is to set up a backup vault, a secure container where all backup copies will be stored. Think of it as the digital equivalent of a locked safe: every backup a business creates will be organized, encrypted, and isolated here, ensuring data integrity and security.

Why this step matters:

- Secure storage: Backup vaults are always encrypted, protecting sensitive business data against unauthorized access.

- Logical separation: Different vaults can be created for production, testing, or compliance workloads, reducing the risk of accidental mix-ups.

- Compliance-ready: Vault encryption keys can be AWS-managed or customer-managed (KMS), giving SMBs flexibility to meet industry regulations like HIPAA or GDPR.

Steps in the AWS Backup Console:

- Navigate to Backup Vaults → From the AWS Backup console, select Create Backup Vault.

- Name the vault → Enter a clear, descriptive name (e.g., smb-prod-backups) for easy identification.

- Set encryption → Use the AWS-managed KMS key for simplicity, or opt for a customer-managed key if compliance frameworks (HIPAA, FINRA, GDPR, etc.) require stricter control.

- Create vault → Once finalized, this vault becomes the secure destination where all backup data will be stored.

With the vault in place, SMBs can confidently move to defining backup plans that connect workloads to this secure repository.

Step 4: Create a backup plan

Once the vault is ready, the next step is to build a backup plan. A backup plan defines the rules for when, how often, and how long the data is backed up, removing manual work and ensuring consistency. This step turns backup from a one-off task into a repeatable, automated process.

Why this step matters:

- Automation reduces risk: Eliminates human error by ensuring backups always run on schedule.

- Cost optimization: Lifecycle rules automatically move older backups to low-cost storage or expire them.

- Business continuity: Critical workloads (databases, applications, file systems) are always protected without daily manual effort.

Steps in the AWS Backup Console:

- Navigate to Backup Plans → Select Create Backup Plan.

- Choose a starting point → Use a pre-built AWS template for common workloads, or build a custom plan for specific business needs.

- Set schedules & windows → Define backup frequency (e.g., daily at midnight) and choose backup windows during off-peak hours.

- Apply lifecycle policies → Example: transition to cold storage after 30 days, delete after 1 year.

- Assign resources → Attach workloads such as EC2, RDS, DynamoDB, EFS, or S3.

Once created, AWS Backup executes the plan automatically, helping SMBs reduce manual IT tasks, maintain compliance, and ensure reliable data protection.

Step 5: Assign IAM roles & policies

Even the best backup plan is only as strong as the access controls protecting it. By configuring IAM roles and policies, SMBs ensure AWS Backup has the right permissions to perform its job while minimizing risks of misuse or unauthorized access.

Why this step matters:

- Security first: Backups often contain sensitive customer and business data, making them a prime target.

- Controlled access: Only authorized users and services should be able to view, modify, or restore backups.

- Risk reduction: Enforcing least-privilege access reduces insider threats and accidental mishandling of data.

Steps in the AWS Console:

- Create a service role → Go to IAM → Roles and add a role with the managed policy AWSBackupServiceRolePolicyForBackup so AWS Backup can perform backups and restores.

- Define user policies → Decide which team members can initiate restores, view vaults, or modify backup plans.

- Apply least privilege → Grant only the specific permissions needed for each role to reduce security risks.

- Enable logging for compliance → Use AWS CloudTrail to record every action taken on backups for auditing and regulatory reporting.

With IAM roles and CloudTrail logging in place, SMBs can maintain strong access control while ensuring backup activities remain auditable and compliant.

Step 6: Test restores (don’t skip this!)

Too many SMBs assume backups will “just work” when disaster strikes, but that assumption can be costly. The real measure of a backup strategy isn’t storing data, it’s how quickly and reliably SMBs can restore it when needed. Testing restores ensures their safety net is strong and ready.

Why this step matters:

- Backups only matter if they can be restored. Regular test restores confirm that data is intact, recovery processes work as expected, and the backup system can be trusted during a real incident.

- Successful restores ensure that critical applications and datasets can come back online within defined Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs). This reduces downtime risk and keeps day-to-day operations running with minimal disruption.

- Documented restore tests provide concrete evidence for compliance audits, regulatory reviews, and internal governance. This not only satisfies industry mandates (HIPAA, FINRA, GDPR, etc.) but also demonstrates proactive risk management to stakeholders.

Steps in the AWS Backup console:

- Select a backup job → Open the Backup console, navigate to the backup job, and choose Restore.

- Restore to a new resource → Always direct restores to a new resource (e.g., a fresh EC2 volume or test RDS instance) instead of overwriting production.

- Validate the recovery → Confirm that data integrity holds and applications dependent on the restored resource run smoothly.

- Document the process → Record steps, outcomes, and any issues. This helps train teams and provides evidence for compliance audits.

By practicing restores regularly, SMBs not only build confidence in recovery but also strengthen both resilience and compliance posture.

Step 7: Monitor & optimize

A backup strategy is never “set it and forget it.” For SMBs, ongoing monitoring and optimization ensure that backups remain compliant, cost-effective, and reliable as business needs evolve. Treat this step as continuous maintenance. Small adjustments now can prevent major headaches later.

Why this step matters:

- Compliance assurance: Proves to regulators and auditors that data retention rules are being followed.

- Cost efficiency: Prevents overspending by optimizing storage classes and retention schedules.

- Operational resilience: Catches failures early, reducing downtime risk.

Key steps in AWS:

- Enable AWS Backup Audit Manager → Continuously track adherence to defined backup policies (e.g., frequency, retention) and generate audit-ready reports for regulators or stakeholders.

- Monitor costs with AWS Cost Explorer → Analyze spending patterns to identify optimization opportunities, such as moving older backups to cold storage (S3 Glacier or Deep Archive) or adjusting retention periods.

- Set real-time alerts with Amazon CloudWatch → Receive notifications for failed, delayed, or missed backup jobs, allowing quick remediation before data exposure risks arise.

- Review lifecycle rules regularly → Revisit backup frequency, retention, and storage class transitions to maintain the right balance between availability, compliance, and cost control.

Combining compliance automation, proactive monitoring, and smart cost management allows SMBs to ensure backups remain secure, efficient, and audit-proof over time.

Final Outcome: SMBs gain a centralized, automated, and compliant backup system on AWS that cuts down on manual IT work, reduces downtime risk, and protects against data loss.

Data backup isn’t enough. SMBs also need resilience, compliance, and cost control. As an AWS Advanced Tier Partner, Cloudtech helps SMBs unlock the full potential of AWS Backup, combining certified expertise with SMB-focused strategies to ensure workloads stay protected, recoverable, and audit-ready.

How does Cloudtech help SMBs set up and maintain AWS Backup?

Protecting business-critical data in the cloud isn’t just about turning on backups, it’s about designing a resilient, compliant, and cost-efficient protection strategy. Cloudtech, as an AWS Advanced Tier Partner, helps SMBs implement AWS Backup with proven blueprints, automated policies, and ongoing monitoring that align with business goals. This ensures backups don’t just exist, but actually safeguard operations while meeting budget and compliance needs.

Key Cloudtech services for AWS Backup setup and management:

- Backup vault and plan design: Cloudtech configures vaults, lifecycle policies, and schedules that balance cost control with data retention and recovery needs.

- Role-based access and compliance: Using AWS IAM and CloudTrail, Cloudtech sets least-privilege access and audit trails, ensuring backups are secure and industry regulations are met.

- Automated monitoring and reporting: With AWS Backup Audit Manager and CloudWatch alerts, Cloudtech ensures issues are detected early and compliance reports are always audit-ready.

- Recovery testing and validation: Regular restore drills are carried out to verify backup integrity, train teams, and strengthen business continuity.

- Cost optimization: Cloudtech helps SMBs minimize storage costs by applying lifecycle policies, using cold storage for long-term data, and using AWS Cost Explorer for ongoing savings insights.

Through these services, SMBs gain not just data backups, but a resilient and compliant backup strategy that scales with their business, reduces risk, and ensures they can recover quickly when it matters most.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Partnering with an AWS expert like Cloudtech ensures this isn’t just a basic backup setup, but a fully managed, compliant, and cost-optimized strategy. Cloudtech designs backup environments tailored to SMB needs, automates policies, validates recovery, and provides continuous oversight so data is always protected and always recoverable.

With Cloudtech, SMBs can move beyond fragile, expensive backup systems and embrace a cloud foundation that strengthens resilience, ensures compliance, and frees teams to focus on growth.

Connect with Cloudtech today to build a backup strategy that’s as dynamic as your business.

FAQs

1. Can AWS Backup support both cloud-native and hybrid workloads?

Yes. AWS Backup natively protects AWS resources, but it can also extend to on-premises workloads through AWS Storage Gateway, giving SMBs a unified backup strategy across hybrid environments.

2. How does AWS Backup handle data encryption?

All backups are encrypted at rest using AWS Key Management Service (KMS). This ensures that sensitive business data remains secure and compliant with industry standards.

3. What’s the difference between AWS Backup and manual snapshotting?

Snapshots require manual setup and tracking, while AWS Backup automates scheduling, retention, and lifecycle policies. This reduces human error, improves consistency, and saves operational time.

4. How can SMBs avoid paying for unnecessary backup storage?

By setting lifecycle policies, older backups can automatically transition to lower-cost storage tiers like Amazon S3 Glacier or Glacier Deep Archive, optimizing spend without sacrificing compliance.

5. What kind of monitoring and reporting does AWS Backup provide?

Through AWS Backup Audit Manager, businesses get compliance dashboards, audit-ready reports, and detailed logs to demonstrate adherence to regulations and internal policies.

Countering new cyber threats with cloud-native security

Ransomware isn’t slowing down. In 2024 alone, global ransomware incidents surged by 37%, making up 44% of all data breaches, according to the Verizon 2025 Data Breach Investigations Report. These numbers reveal a hard truth: traditional, perimeter-based defenses are no longer enough.

To stay resilient, businesses must adopt security models designed for modern, distributed environments. This is where cloud-native security comes in, an approach that embeds protection directly into applications, workloads, and infrastructure, ensuring threats are contained, compliance is maintained, and sensitive data is safeguarded in real time.

This article explains how cloud-native security provides the agility, automation, and resilience needed to stay ahead of modern cyber threats.

Key takeaways:

- Cloud-native security is critical for SMBs: Containers, serverless, and microservices require adaptive, automated protection beyond traditional perimeter security.

- AWS offers scalable, integrated tools: Amazon GuardDuty, AWS Security Hub, AWS IAM, and AWS Audit Manager enable real-time monitoring, threat detection, and automated response.

- Automation minimizes risk and overhead: Continuous compliance checks, vulnerability scanning, and policy enforcement reduce manual effort.

- Security supports agile development: Cloud-native security integrates with CI/CD pipelines, keeping development fast and safe.

- Cloudtech adds expert value: SMBs gain a managed, scalable, and compliant security framework that protects workloads while enabling growth.

What is cloud-native security? A detailed overview

Cloud-native security is the practice of embedding security directly into applications and infrastructure that are built and operated in cloud environments. It ensures that protection is integrated across every stage of the application lifecycle from development and deployment to runtime operations.

It addresses the dynamic, distributed, and API-driven nature of modern workloads, so that security is scalable, automated, and resilient.

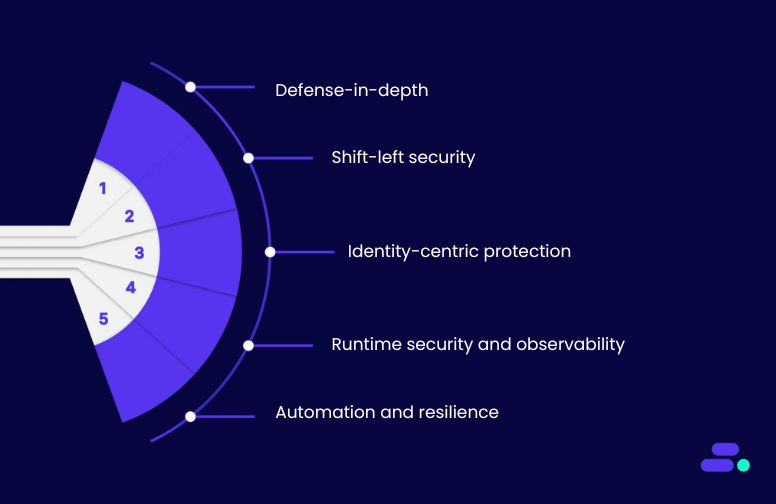

Core principles of cloud-native security:

- Defense-in-depth: Security is layered across compute, storage, networking, data, and APIs. Encryption, workload isolation, and continuous monitoring are standard practices to prevent unauthorized access and data breaches.

- Shift-left security: Security is implemented early in the software development lifecycle. Vulnerability scanning, policy validation, and automated checks are built into CI/CD pipelines to catch risks before deployment.

- Identity-centric protection: Identity and access management (IAM) becomes the new security perimeter. Short-lived credentials, role-based access control, and multi-factor authentication replace static credentials, aligning with Zero Trust.

- Runtime security and observability: Applications are continuously monitored for anomalous behavior, privilege escalation attempts, and suspicious traffic. Service meshes, intrusion detection, and real-time logging enhance visibility at runtime.

- Automation and resilience: Cloud-native environments rely on automation for patching, scaling, and recovery. Security policies are codified and enforced automatically, while integrated backup and disaster recovery solutions ensure resilience.

How cloud-native security differs from legacy models: Traditional security emphasizes fixed perimeters and manual auditing. Cloud-native security, on the other hand, is adaptive and built around distributed workloads such as containers, serverless functions, and microservices.

It prioritizes continuous monitoring, automated policy enforcement, and the ability to respond dynamically to threats in real time.

Why cloud-native security matters for SMBs? It provides scalability, cost efficiency through pay-as-you-go security services, built-in compliance automation for regulated industries, and resilience by tightly integrating with cloud backup and disaster recovery strategies.

How does AWS help SMBs establish cloud-native security for their infrastructure?

AWS embeds protection at the core of its infrastructure. Security controls span compute, storage, networking, and databases, ensuring every workload inherits a consistent baseline of protection.

With the industry’s clearest Shared Responsibility Model, SMBs know exactly which areas AWS secures (global infrastructure, data centers, and services) and which remain in their control (applications and data). This clarity helps lean teams avoid misconfigurations while focusing on business-critical needs.

But what truly sets AWS apart is the breadth and depth of its cloud-native security services. From identity and access management (IAM) to continuous threat detection (GuardDuty, Detective) to compliance automation (Config, Audit Manager), AWS offers enterprise-grade security without requiring complex third-party integrations.

Combined with automatic scalability, global compliance certifications, and seamless integration with DevOps pipelines, AWS enables SMBs to adopt “security as code” while staying cost-efficient. For growing businesses, this means faster innovation backed by security that evolves as they do.

The key features of AWS’s cloud-native security include:

1. Strong identity and access controls

Identity and access management forms the backbone of cloud-native security. In AWS, services like IAM and IAM Identity Center provide granular, least-privilege access to ensure that users and applications only get the permissions they need, and nothing more.

By combining this with features like multi-factor authentication (MFA), temporary credentials, and federated identity integration, SMBs can eliminate the risks of static credentials while maintaining agility as they scale.

How it secures modern applications:

- Minimizes attack surface by enforcing least-privilege policies across all users and workloads.

- Strengthens authentication through MFA and short-lived credentials, making account compromise far more difficult.

- Supports hybrid and multi-cloud use cases with federated identity, ensuring consistent, secure access across environments.

Use case: A financial services SMB runs a customer-facing loan processing platform on AWS. Instead of using long-term static keys, the development team authenticates via IAM Identity Center with MFA enabled.

Each developer gets time-limited credentials tied to specific roles, meaning they can only access approved resources for the duration of their work session. If an account is ever exposed, the attacker cannot escalate privileges or persist in the system, protecting both customer data and compliance posture.

2. Secure network architecture

A secure network foundation is critical for protecting cloud-native applications. AWS enables SMBs to build Virtual Private Clouds (VPCs) with isolated subnets, controlled routing, and fine-grained security groups, ensuring workloads are segmented and protected from unauthorized access.

Integrated services like AWS WAF and AWS Shield defend against external threats, including DDoS attacks, while allowing the network perimeter to adapt dynamically as workloads scale.

How it secures modern applications:

- Limits exposure by isolating sensitive workloads in private subnets and enforcing strict traffic rules.

- Protects against external threats using WAF for application-layer filtering and Shield for DDoS mitigation.

- Supports scalable, resilient architectures with centralized gateways, NAT, and routing that automatically adapt to changing workloads.

Use case: A healthcare SMB hosts patient records and appointment scheduling applications in AWS. Each application runs in a separate VPC with private subnets for sensitive databases and public subnets for web interfaces.

AWS WAF blocks malicious requests, while Shield automatically mitigates potential DDoS attacks. The team confidently scales their services, knowing sensitive data remains isolated and protected from both internal and external threats.

3. Data protection by default

Protecting data at all times is a cornerstone of cloud-native security. AWS services such as Amazon S3, Amazon RDS, and Amazon DynamoDB provide built-in encryption both at rest and in transit using AWS Key Management Service (KMS).

SMBs can use AWS-managed keys for simplicity or opt for customer-managed keys to meet strict regulatory requirements, ensuring data remains secure without complicating operations.

How it secures modern applications:

- Prevents unauthorized access by encrypting data automatically, reducing the risk of breaches.

- Supports regulatory compliance through customizable key management and auditing capabilities.

- Protects data in motion and at rest across storage, databases, and backups, ensuring end-to-end security.

Use case: A fintech SMB stores sensitive transaction records in Amazon RDS and customer documents in S3. Using AWS KMS with customer-managed keys, the team encrypts all data at rest, while TLS ensures encryption in transit.

Even when accessing data for reporting or analytics, encryption remains active, giving the SMB confidence that customer information is secure and compliant with PCI-DSS standards.

4. Always-on monitoring and threat detection

Continuous monitoring is essential for cloud-native security. AWS provides services like Amazon GuardDuty, AWS Security Hub, and Amazon Detective that give SMBs real-time visibility into their security posture.

These tools detect unusual behavior, surface actionable insights, and enable rapid response, without the need for large, dedicated security teams.

How it secures modern applications:

- Detects threats proactively by analyzing logs, network activity, and API calls to identify suspicious patterns.

- Provides centralized visibility across accounts and workloads, helping SMBs maintain a holistic security posture.

- Enables rapid response with automated alerts and integration with remediation tools, reducing potential damage from incidents.

Use case: A healthcare SMB runs patient management and telehealth applications on AWS. GuardDuty continuously monitors for anomalous API calls, while Security Hub aggregates alerts and Detective traces potential breaches.

When a suspicious login attempt is detected, the team is immediately notified and can remediate before any sensitive patient data is compromised, maintaining compliance with HIPAA standards.

5. Built-in compliance and governance

Compliance is a continuous process, not a one-time checklist. AWS provides services such as AWS Config, AWS Audit Manager, and AWS Control Tower to help SMBs maintain alignment with industry standards like HIPAA, GDPR, and PCI-DSS.

These tools automate policy enforcement, continuously monitor configurations, and generate audit-ready reports, reducing manual overhead and human error.

How it secures modern applications:

- Ensures ongoing compliance by automatically checking configurations against regulatory frameworks.

- Simplifies audits with pre-built reporting and documentation, saving SMBs time and resources.

- Integrates governance into operations so security and compliance are enforced continuously, not just during periodic reviews.

Use case: A fintech SMB operates multiple AWS accounts across development, testing, and production environments. Using AWS Control Tower, the team enforces consistent policies across all accounts.

Config continuously monitors changes in resource configurations, while Audit Manager produces compliance reports for PCI-DSS and GDPR. This enables the SMB to confidently run applications and handle sensitive customer data without worrying about non-compliance or manual audit burdens.

6. Workload and application protection

Modern applications often use containers, serverless functions, and microservices, which require specialized security approaches. AWS provides tools like Amazon Inspector, AWS Fargate security features, and Amazon EKS integrations to automatically detect vulnerabilities, enforce best practices, and protect workloads without disrupting development workflows.

How it secures modern applications:

- Detects vulnerabilities proactively in containers, serverless functions, and orchestrated workloads.

- Integrates seamlessly with DevOps pipelines, ensuring security checks happen continuously without slowing releases.

- Enforces runtime protection to safeguard applications while they operate, reducing the risk of exploitation.

Use case: An SMB developing a SaaS analytics platform deploys microservices on Amazon EKS and serverless APIs with AWS Lambda. Amazon Inspector scans container images and Lambda functions for known vulnerabilities before deployment. AWS Fargate enforces runtime isolation and security controls.

This allows the development team to iterate rapidly while ensuring that each service remains secure, compliant, and resilient against attacks.

By combining these capabilities, AWS enables SMBs to move beyond perimeter-based models and adopt security as code—automated, adaptive, and deeply integrated into every workload.

Partnering with an AWS expert like Cloudtech can accelerate SMBs’ cloud-native security journey by combining technical know-how with practical experience. An AWS partner helps design robust architectures, implement best-practice controls, and navigate compliance requirements, reducing misconfigurations, operational overhead, and risk.

How does Cloudtech help SMBs implement and maintain cloud-native security?

Securing modern applications in the cloud isn’t just about enabling individual controls. It’s about building a resilient, automated, and compliant security posture across all workloads. Cloudtech, as an AWS Advanced Tier Partner, helps SMBs implement cloud-native security using AWS best practices, tools, and automation, ensuring applications remain protected while supporting business growth.

Key Cloudtech services for cloud-native security:

- Identity and access governance: Cloudtech configures AWS IAM, IAM Identity Center, and federated identities to enforce least-privilege access, MFA, and break-glass procedures, minimizing internal and external risks.