Resources

Find the latest news & updates on AWS

Cloudtech Has Earned AWS Advanced Tier Partner Status

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network!

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network! This significant achievement highlights our expertise in AWS cloud modernization and reinforces our commitment to delivering transformative solutions for our clients.

As an AWS Advanced Tier Partner, Cloudtech has been recognized for its exceptional capabilities in cloud data, application, and infrastructure modernization. This milestone underscores our dedication to excellence and our proven ability to leverage AWS technologies for outstanding results.

A Message from Our CEO

“Achieving AWS Advanced Tier Partner status is a pivotal moment for Cloudtech,” said Kamran Adil, CEO. “This recognition not only validates our expertise in delivering advanced cloud solutions but also reflects the hard work and dedication of our team in harnessing the power of AWS services.”

What This Means for Us

To reach Advanced Tier Partner status, Cloudtech demonstrated an in-depth understanding of AWS services and a solid track record of successful, high-quality implementations. This achievement comes with enhanced benefits, including advanced technical support, exclusive training resources, and closer collaboration with AWS sales and marketing teams.

Elevating Our Cloud Offerings

With our new status, Cloudtech is poised to enhance our cloud solutions even further. We provide a range of services, including:

- Data Modernization

- Application Modernization

- Infrastructure and Resiliency Solutions

By utilizing AWS’s cutting-edge tools and services, we equip startups and enterprises with scalable, secure solutions that accelerate digital transformation and optimize operational efficiency.

We're excited to share this news right after the launch of our new website and fresh branding! These updates reflect our commitment to innovation and excellence in the ever-changing cloud landscape. Our new look truly captures our mission: to empower businesses with personalized cloud modernization solutions that drive success. We can't wait for you to explore it all!

Stay tuned as we continue to innovate and drive impactful outcomes for our diverse client portfolio.

Harnessing cloud native application development for faster innovation

Why do traditional applications struggle to keep up with modern business demands? The main reason is scaling, which requires adding more servers or hardware manually. Innovation is costly and time-consuming. Cloud native application development changes this using the cloud’s flexibility, automation, and distributed architecture.

Consider a small fintech startup managing a legacy payment platform. Deploying new features takes weeks, and scaling to handle spikes in transactions risks outages or performance issues. With cloud native development, applications are built as modular, containerized services that scale automatically, update seamlessly, and integrate with advanced tools like AI or analytics. This allows teams to deliver new features faster, maintain high reliability, and respond to market changes without heavy IT overhead.

This article explores why cloud native application development is essential for SMBs seeking speed, agility, and scalable innovation in today’s digital-first world.

Key takeaways:

- Cloud-native applications help SMBs innovate faster with built-in scalability, security, and automation.

- Modern app development enables seamless integration with AI, analytics, and legacy systems.

- Pay-as-you-go cloud models reduce upfront investment and align costs with actual usage.

- With cloud apps, SMBs can focus on business growth instead of infrastructure management.

- Partnering with an AWS expert like Cloudtech ensures compliant, resilient, and future-ready applications.

From monoliths to microservices: Understanding cloud application development

Traditional applications are typically built as monoliths, where all components are bundled together in a single codebase. While this structure may have worked in the past, it creates several technical bottlenecks.

Deploying a small change often requires rebuilding and redeploying the entire application, scaling a single component (like payment processing or patient record retrieval) means scaling the whole system, and a failure in one module can bring down the entire app. For SMBs, this translates to longer release cycles, higher maintenance costs, and greater downtime risk.

Cloud native development breaks applications into microservices, with independently deployable modules, each responsible for a specific business function. These microservices communicate via lightweight APIs and can be containerized using tools like Docker and orchestrated with Kubernetes or Amazon ECS/EKS.

The benefits include:

- Independent scaling: Each service can scale based on demand without affecting others, saving costs and improving performance.

- Faster updates and deployment: Teams can release, test, and rollback individual services independently, reducing downtime and speeding up innovation.

- Resilience: If one microservice fails, the rest of the application continues to function, improving reliability and uptime.

- Tech stack flexibility: Different services can use the most suitable programming languages or frameworks, letting SMBs experiment and adopt new technologies faster.

Transitioning from a monolithic to a microservices architecture allows SMBs to achieve operational agility, cost efficiency, and faster time-to-market.

How can SMBs develop cloud native applications using AWS?

Cloud native application development lets SMBs build applications specifically for the cloud using modular, containerized services that scale independently, deploy rapidly, and integrate effortlessly. This approach reduces downtime, simplifies updates, and enables automation, resiliency, and observability. These are benefits that apply to any application, from healthcare to fintech.

Take the example of developing a fintech app designed to handle digital payments, manage customer accounts, and provide real-time financial insights. This platform would enable users to transfer funds, track balances, generate transaction reports, and receive personalized analytics, all while ensuring security, compliance, and high availability.

Step 1: Define application requirements and goals

Before building the fintech app, the SMB must clearly define its functional and non-functional requirements, performance expectations, and compliance obligations.

Key considerations include:

- Core functionality: The platform should enable secure online payments, account management, transaction history tracking, and reporting dashboards for both users and administrators.

- Regulatory compliance: The application must adhere to PCI DSS for payment data, GDPR for personal data protection, and any relevant local financial regulations.

- Scalability targets: The system should support an initial user base of 10,000+, with seamless scaling during peak usage or rapid growth.

- Availability and resilience: Uptime targets (e.g., 99.9% SLA) and disaster recovery requirements, including multi-AZ deployment, should be established.

- Security and monitoring: Logging, auditing, and threat detection protocols should be defined to protect sensitive financial data.

AWS tools to use:

- AWS Well-Architected Tool: Evaluates cloud architecture against five pillars: security, reliability, performance efficiency, cost optimization, and operational excellence. It highlights risks, suggests improvements, and helps SMBs align applications with best practices for scalable and maintainable solutions.

- AWS Cloud Adoption Framework (CAF): Guides organizations through cloud adoption by mapping business and technical capabilities to six perspectives: business, people, governance, platform, security, and operations. It identifies skill gaps, governance needs, and compliance requirements for a holistic migration and development strategy.

- AWS Trusted Advisor: Provides real-time recommendations on cost optimization, performance, security, fault tolerance, and service limits. SMBs can use it to fix misconfigurations, reduce overspending, improve resiliency, and optimize workloads before deployment or scaling.

Defining these requirements allows the SMB to establish a strong foundation, minimizing risks and ensuring a scalable, compliant, and efficient cloud-native application.

Step 2: Architect the app as microservices

Once requirements are defined, the SMB designs the application using a microservices architecture, breaking the platform into modular, independently deployable components. Each service focuses on a specific business capability, which improves scalability, maintainability, and fault isolation.

Core microservices might include:

- Payment processing service: Handles all transactions securely, integrates with payment gateways, and manages transaction validation.

- Account management service: Maintains user profiles, authentication, and authorization workflows.

- Analytics service: Collects and analyzes usage patterns, detects potential fraud, and provides actionable insights for decision-making.

AWS tools to implement microservices:

- Amazon ECS/Amazon EKS: Run containerized microservices in a fully managed, scalable environment. ECS provides simple container orchestration, while EKS leverages Kubernetes for advanced orchestration, enabling SMBs to deploy, scale, and manage services efficiently.

- AWS Lambda: Executes serverless functions for lightweight, event-driven tasks such as real-time fraud detection, notifications, or data transformations. It eliminates the need to manage servers and scales automatically with demand.

- Amazon API Gateway: Offers secure, fully managed APIs for communication between microservices and external clients. It supports request throttling, authentication, and monitoring, ensuring reliable and controlled access.

- Amazon SQS/SNS/EventBridge: Provide asynchronous messaging and event-driven communication. SQS queues messages for processing, SNS broadcasts notifications, and EventBridge routes events across services, decoupling components and enhancing reliability.

Decomposing the fintech platform into microservices and using AWS services enables SMBs to update, scale, and deploy features independently, reducing downtime and accelerating innovation.

Step 3: Build and containerize services

After architecting the fintech platform, each microservice is developed and packaged independently to enable agile development and seamless deployment. This ensures that updates to one service do not disrupt others, while maintaining consistent performance and reliability.

Examples include:

- Payment processing service: Packaged in a Docker container to ensure portability and consistent runtime across environments.

- Analytics service: Encapsulates Python code and ML models within a container for automated data processing and fraud detection.

- Testing pipelines: Each microservice has its own testing workflow, ensuring quality and isolating issues before deployment.

AWS tools for containerization and CI/CD:

- AWS CodeBuild: Provides fully managed build services to compile source code, run tests, and produce container images for each microservice, ensuring fast, repeatable, and isolated builds.

- AWS CodeArtifact: Acts as a secure artifact repository that stores, versions, and shares dependencies across teams, preventing conflicts and ensuring compliance with governance policies.

- AWS CodePipeline: Automates the end-to-end CI/CD workflow, integrating with CodeBuild, testing stages, and deployment targets so each microservice can be released independently and reliably.

Containerizing services and establishing CI/CD pipelines with AWS tools allows SMBs to release updates faster, reduce operational risk, and maintain high availability for their fintech platform.

Step 4: Set up CI/CD and automation

To ensure updates are deployed reliably and safely, the fintech startup implements automated CI/CD pipelines and deployment strategies. This allows the team to test and release new features without impacting live services, maintaining high availability for end users.

Examples include:

- Isolated testing: Payment features and other critical updates are tested independently before deployment, reducing the risk of bugs affecting the platform.

- Blue/Green deployments: Critical services like payment processing leverage blue/green strategies to switch traffic seamlessly between environments, minimizing downtime and operational risk.

AWS tools for CI/CD and automation:

- AWS CodePipeline + CodeDeploy: Automates build, test, and deployment workflows, using blue/green or canary strategies to update microservices with minimal downtime and controlled rollouts.

- AWS CloudFormation/AWS CDK: Enables infrastructure as code, allowing teams to define, version, and consistently provision AWS resources across environments with repeatability and governance.

- AWS X-Ray: Provides distributed tracing to track requests through microservices, helping pinpoint performance bottlenecks, errors, and latency issues for faster debugging and root cause analysis.

Combining automated CI/CD pipelines with AWS’s deployment and tracing tools allows SMBs to safely roll out updates, scale confidently, and maintain uninterrupted service for their fintech platform.

Step 5: Implement observability and monitoring

For a fintech platform, maintaining real-time visibility into operations is critical. SMBs must detect and respond to errors, latency issues, or suspicious activity immediately to protect both users and business reputation. Key practices include:

- Transaction and service monitoring: Track payment processing errors, service response times, and API failures to ensure smooth operations.

- Alerts and notifications: Configure alerts for failed jobs, unusual transaction patterns, or potential fraud, enabling rapid response.

AWS services for observability and monitoring:

- Amazon CloudWatch: Collects and monitors metrics, logs, and events across microservices, enabling real-time visibility, custom dashboards, and automated alarms.

- AWS X-Ray: Provides distributed tracing to visualize request flows, identify latency hotspots, and diagnose errors across interconnected services.

- Amazon SNS: Delivers instant notifications or alerts to operations teams when thresholds or anomalies are detected, ensuring rapid incident response.

Implementing comprehensive observability with AWS tools enables SMBs to maintain high reliability, detect problems early, and ensure a secure, seamless fintech experience for users.

Step 6: Ensure security and compliance

Safeguarding sensitive financial data is critical. Security isn’t optional, but foundational to trust and regulatory compliance.

Key practices include:

- Data protection: Encrypt all user data both at rest and in transit to prevent unauthorized access.

- Access control: Apply least privilege policies so that users and services can only access what they absolutely need.

- Continuous auditing: Regularly audit configurations and monitor compliance with financial regulations like PCI DSS and GDPR.

AWS tools for security and compliance:

- AWS Identity and Access Management (IAM): By enforcing the principle of least privilege, IAM ensures each user or service has only the access needed to perform its tasks, reducing the risk of unauthorized access.

- AWS Key Management Service (KMS): SMBs can encrypt sensitive data at rest and in transit across databases, S3 buckets, and microservices, ensuring regulatory compliance and data confidentiality.

- AWS Shield & AWS WAF: AWS Shield provides managed protection against DDoS attacks, while AWS WAF allows SMBs to define custom rules to block malicious traffic at the application layer. Together, they safeguard fintech applications from external threats without impacting performance.

- AWS Config & Security Hub: AWS Config tracks configuration changes, while Security Hub aggregates alerts and provides actionable insights, helping SMBs maintain security posture and meet audit requirements efficiently.

These tools ensure that the cloud-native fintech applications are secure, compliant, and resilient without excessive manual overhead.

Step 7: Scale and optimize

After deploying the fintech application, SMBs need to ensure it can handle growth, spikes in traffic, and changing workloads efficiently.

Scaling and optimization involve both performance management and cost control:

- Dynamic scaling: Automatically adjust compute resources for microservices like payment processing or analytics based on real-time demand. This ensures the app remains responsive even during peak transaction periods.

- Cost optimization: Mix on-demand and spot instances for non-critical workloads, such as analytics or batch processing, to reduce operational costs without impacting performance.

- Resource Efficiency: Continuously monitor usage patterns and optimize infrastructure to avoid overprovisioning.

AWS services for scaling and optimization:

- Auto Scaling Groups: Dynamically adjust Amazon EC2 capacity based on demand, ensuring applications remain performant while minimizing costs.

- ECS/EKS Service Auto Scaling: Scales containerized workloads automatically using service-level metrics, maintaining reliability during traffic spikes or drops.

- AWS Lambda: Executes event-driven functions that scale seamlessly with workload volume, eliminating the need for manual provisioning.

- AWS Cost Explorer & Trusted Advisor: Provide visibility into usage patterns, cost forecasting, and actionable recommendations to optimize performance and reduce unnecessary spend.

Implementing these AWS tools allows SMBs to maintain high availability, ensure consistent user experience, and control costs while growing their cloud-native fintech application efficiently.

Step 8: Continuous improvement

Building a cloud-native fintech application is not a one-time effort. Continuous improvement ensures the platform evolves with customer needs, regulatory changes, and technological advances. SMBs can adopt iterative enhancements while keeping the app reliable and secure.

Key practices for continuous improvement:

- AI and machine learning: Integrate predictive features like fraud detection or credit risk scoring using Amazon SageMaker, enabling smarter, automated decision-making.

- Workflow automation: Streamline repetitive tasks such as payment reconciliation, notifications, or report generation with AWS Step Functions to reduce manual errors and operational overhead.

- Data-driven insights: Build dynamic dashboards and analytics reports with Amazon QuickSight to visualize user behavior, transaction trends, and operational KPIs, guiding strategic decisions.

These AWS cloud-native services allow SMBs to continuously enhance their cloud application, stay competitive, and deliver a better customer experience without the friction of traditional software update cycles.

Outcomes for the SMB:

- New payment features are deployed weekly instead of monthly.

- Transactions are processed reliably even during spikes.

- Data security and compliance are built in from day one.

- Operational costs are optimized, and infrastructure scales automatically.

Reaching such outcomes is easy for SMBs from regulated sectors with the guidance of a specialized AWS partner like Cloudtech. Beyond technical know-how, a partner ensures security and compliance are integrated from the start, architectures scale predictably with demand, and operational costs stay optimized.

How does Cloudtech help SMBs build and scale cloud-native applications?

Developing cloud applications is about designing systems that are scalable, secure, and built to evolve with business needs. This ensures faster releases, reduced operational overhead, and applications that perform reliably at scale.

Cloudtech’s strength lies in its SMB-first approach. It helps teams design apps that balance lean budgets with high performance, automates deployment pipelines to accelerate time-to-market, and embeds compliance and resilience into the application lifecycle.

Beyond launch, Cloudtech provides ongoing support so SMBs can continuously innovate and compete effectively with larger players.

Key Cloudtech services for cloud application development:

- Application assessment & strategy: Cloudtech reviews existing applications and identifies opportunities to modernize with AWS-native services, ensuring architectures align with business goals.

- Serverless & microservices design: Using AWS Lambda, ECS/EKS, and event-driven patterns, Cloudtech builds applications that scale seamlessly while reducing infrastructure overhead.

- CI/CD automation: With tools like AWS CodePipeline and CodeBuild, Cloudtech sets up automated pipelines for faster, more reliable deployments.

- Integrated security & compliance: From IAM best practices to data encryption, Cloudtech embeds security controls and regulatory compliance directly into applications.

- Resilience & performance optimization: Applications are architected with multi-AZ redundancy, monitoring, and auto-scaling to ensure high availability and smooth user experiences.

Through this approach, SMBs gain the ability to launch new features quickly, scale confidently, and deliver secure, high-performing applications, all while keeping costs under control and staying focused on growth.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

With the right architecture, automation, and security, applications become more than tools; they become drivers of growth, resilience, and customer satisfaction. Partnering with an AWS expert like Cloudtech helps SMBs bypass the steep learning curve, avoid costly missteps, and accelerate their journey from idea to production-ready applications.

By combining AWS best practices with an SMB-first mindset, Cloudtech ensures that every application is designed to scale, adapt, and deliver lasting value.

Now is the time to modernize your applications and future-proof your business—Cloudtech can help you get there.

FAQs

1. How long does it typically take to build a cloud-native application for an SMB?

Timelines vary depending on complexity, but many SMBs see a minimum viable product (MVP) within weeks. Cloud-native services and serverless components accelerate delivery compared to traditional development.

2. Can cloud applications integrate with existing legacy systems?

Yes. Cloud-native apps can be designed with APIs and event-driven architectures that connect seamlessly to on-prem or older systems, enabling gradual modernization instead of a disruptive overhaul.

3. What security measures are built into cloud application development?

Cloud apps are designed with encryption, identity and access management (IAM), compliance controls, and continuous monitoring baked in from the start, ensuring protection of sensitive customer and financial data.

4. How do SMBs control costs when developing on the cloud?

Using a pay-as-you-go model, SMBs only pay for the resources they use. Cost optimization tools like AWS Trusted Advisor and Cost Explorer help keep budgets on track while avoiding over-provisioning.

5. Do SMBs need in-house cloud expertise to maintain cloud applications?

Not necessarily. With managed services, automated scaling, and the support of an AWS partner like Cloudtech, SMBs can run and evolve their applications without needing large in-house cloud teams.

Is cloud analytics a smarter investment than legacy BI?

For decades, business intelligence (BI) meant heavy servers, long data refresh cycles, and reports that were outdated by the time they reached decision-makers. But today, cloud analytics is changing the game.

Unlike legacy BI locked to hardware limits and delayed refreshes, cloud analytics delivers real-time insights with elastic computing power. It integrates data from CRMs, ERPs, and even IoT devices into one view without costly servers or complex maintenance. SMBs pay only for what they use while gaining faster decisions, smarter forecasting, and enterprise-grade security.

Simply put, cloud analytics replaces static reports with live intelligence that drives agility and growth. This blog explores how it differs from legacy BI, the key benefits it brings to SMBs, and why it’s emerging as the smarter investment for long-term ROI.

Key takeaways:

- Faster insights: Cloud analytics delivers real-time dashboards and automated pipelines, enabling SMBs to act on data instantly.

- Lower total cost of ownership: Pay-as-you-go pricing reduces capital expenses and IT overhead compared to legacy BI.

- Elastic scalability: Cloud analytics adapts seamlessly to growing datasets, users, and workloads without costly infrastructure upgrades.

- AI-driven intelligence: Integrated machine learning and predictive analytics help SMBs anticipate trends, detect anomalies, and optimize decisions.

- Enhanced collaboration: Centralized, secure dashboards allow distributed teams to access the same live data, ensuring consistent, data-driven decision-making.

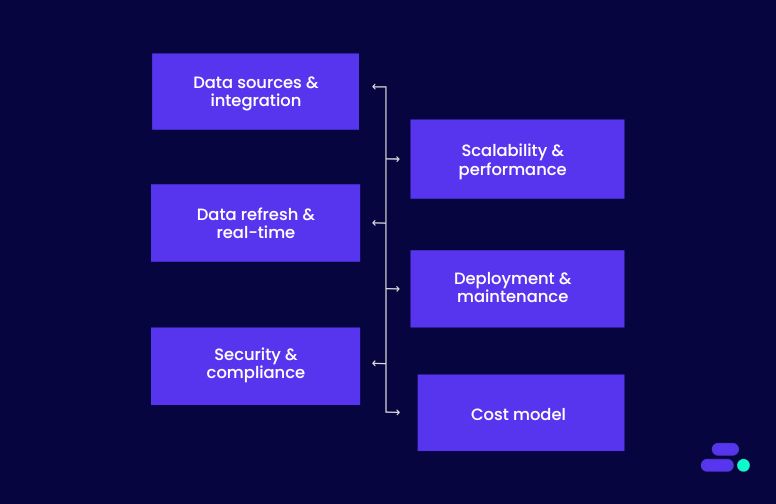

How is cloud analytics different from legacy BI?

Traditional business intelligence (BI) was designed for a world where data lived primarily on-premises. Reports were generated through batch processes, relying on structured data pulled from relational databases.

While this approach worked in the past, it now struggles under the weight of modern requirements, including massive data volumes, real-time decision-making, and seamless integration with diverse data sources. Cloud analytics reshapes this landscape by re-architecting how data is collected, stored, processed, and visualized.

When comparing legacy BI with cloud analytics, the differences become clear across integration, scalability, performance, and cost:

Cloud analytics fundamentally redefines BI by making it scalable, real-time, and cost-efficient, while legacy BI remains rigid, hardware-bound, and slow to adapt.

In what ways does cloud analytics outperform traditional BI?

Cloud analytics empowers SMBs to compete at enterprise scale by combining real-time data pipelines, serverless processing, and elastic infrastructure. Instead of waiting on static reports, decision-makers can query live data, run advanced analytics, and visualize results instantly.

On AWS, services like Amazon Redshift, Athena, Glue, and QuickSight work together to streamline ingestion, transformation, and reporting, removing the bottlenecks that once limited smaller organizations. The result is faster insights, lower costs, and the ability to pivot strategies based on what the data says in the moment.

There are multiple ways in which cloud analytics creates measurable advantages over traditional BI for SMBs:

1. Lower total cost of ownership

Every dollar spent on technology needs to justify itself in business outcomes. Traditional BI tools come with a hidden cost burden, including expensive servers, perpetual licenses, and high IT overhead. Cloud analytics shifts this model to a lean, predictable framework, making advanced insights accessible without draining budgets.

How cloud analytics reduces TCO compared to legacy BI:

- No upfront CapEx: Legacy BI often requires six-figure investments in physical servers, data storage systems, and proprietary software. Cloud analytics removes this capital expense entirely, replacing it with subscription or usage-based pricing.

- Reduced IT labor costs: On-prem BI environments require teams for system monitoring, upgrades, and troubleshooting. With cloud analytics, infrastructure maintenance is handled by providers like AWS, allowing SMB IT teams to focus on strategy, not upkeep.

- Pay for what is used: Traditional BI locks organizations into fixed hardware and licensing costs, regardless of usage. Cloud analytics services charge based on actual consumption, ensuring costs scale proportionally with business activity.

Transitioning from traditional BI to lower-TCO cloud analytics on AWS:

- Replace hardware with cloud warehousing: Move from costly, license-heavy databases to Amazon Redshift, which delivers fast analytics with no physical infrastructure.

- Cut ETL tooling costs: Retire expensive, on-prem data prep tools by adopting AWS Glue, which runs ETL jobs serverlessly and only incurs cost while jobs are running.

- Avoid dashboard licensing fees: Replace per-user BI tools with Amazon QuickSight, which charges per session, not per seat, reducing costs for teams with variable usage.

Modernizing BI on AWS gives access to enterprise-grade analytics without the financial baggage of legacy infrastructure, providing true cost efficiency that legacy BI simply cannot match.

2. Faster time to insights

In fast-moving markets, decisions delayed are opportunities lost. Legacy BI systems require months of setup, manual data integration, and batch-based reporting that leaves leaders working with stale insights.

Cloud analytics flips this model by enabling rapid deployment, real-time dashboards, and automated pipelines, giving SMBs intelligence at the speed of business.

How cloud analytics accelerates time to insights compared to legacy BI:

- Rapid deployment: Traditional BI requires lengthy hardware provisioning and software configuration. Cloud analytics launches in days or weeks, with services ready to scale instantly.

- Real-time processing: Legacy BI refreshes data in overnight or weekly cycles. Cloud analytics ingests and analyzes streaming data continuously, powering live dashboards.

- Automated workflows: Manual ETL processes in legacy BI slow reporting cycles. With cloud analytics, automation replaces human intervention, reducing delays and errors.

Transitioning from traditional BI to faster insights on AWS:

- Enable real-time ingestion: Replace batch data transfers with Amazon Kinesis for streaming data pipelines that process events as they happen.

- Automate data prep: Use AWS Glue to schedule or trigger ETL jobs that run serverlessly, eliminating bottlenecks from manual processing.

- Deliver instant dashboards: Deploy Amazon QuickSight for interactive dashboards that auto-refresh with live data sources, ensuring decision-makers always see the latest view.

Cloud analytics allows SMBs to shorten the gap between data collection and decision-making, moving from hindsight to real-time foresight that legacy BI simply cannot deliver.

3. Scalability without limits

As SMBs grow, their data and user demands can surge unpredictably. Traditional BI platforms struggle under these spikes, requiring costly hardware upgrades or capacity planning that often leads to wasted resources.

Cloud analytics eliminates these constraints by offering elastic scaling, allowing organizations to handle increasing data volumes, users, and workloads seamlessly.

How cloud analytics delivers scalable performance compared to legacy BI:

- Elastic compute and storage: Legacy BI needs new servers or storage to scale. Cloud analytics automatically expands capacity to meet demand without manual intervention.

- Support for more users and workloads: Adding new teams or applications to legacy BI often slows the system. Cloud analytics accommodates additional users and concurrent queries with no performance drop.

- Instant onboarding of data sources: Legacy BI integrations are slow and resource-intensive. Cloud analytics can quickly ingest and process new structured or unstructured data from multiple sources.

Transitioning from traditional BI to scalable cloud analytics on AWS:

- Adopt Amazon Redshift: Use a fully managed data warehouse that automatically scales compute and storage based on query volume and dataset size.

- Utilize Amazon Athena: Query large datasets in Amazon S3 without provisioning infrastructure, paying only for what businesses use.

- Integrate with AWS Glue: Seamlessly prepare and catalog new data sources, allowing pipelines to scale without downtime or complex reconfiguration.

AWS cloud analytics gives the flexibility to grow without worrying about infrastructure limits, ensuring insights remain fast and reliable regardless of business scale.

4. Smarter, AI-powered capabilities

Traditional BI focuses on descriptive reporting, such as what happened in the past. Cloud analytics, however, integrates AI and machine learning to deliver predictive insights, detect anomalies, and surface trends automatically. This enables SMBs to move from reactive decision-making to proactive strategy, uncovering opportunities and risks before they escalate.

How cloud analytics leverages AI better than legacy BI:

- Predictive insights: Legacy BI relies on historical reports; cloud analytics uses services like Amazon SageMaker to forecast sales, demand, or operational trends.

- Anomaly detection: Cloud platforms automatically flag outliers or unusual patterns in real time, reducing the risk of overlooked issues.

- Automated recommendations: AI models embedded in analytics pipelines can suggest actions, such as optimizing inventory or marketing campaigns, without manual analysis.

Transitioning from traditional BI to AI-powered cloud analytics on AWS:

- Train and deploy models with Amazon SageMaker: Use historical and live data to build machine learning models that enhance reporting with predictions.

- Integrate AI into dashboards: Connect Amazon SageMaker insights to Amazon QuickSight dashboards, providing visualized, actionable intelligence.

- Automate alerts and workflows: Combine predictions with AWS Lambda and AWS Step Functions to trigger automated responses or notifications for anomalies.

Adopting AI-enhanced cloud analytics allows SMBs to anticipate trends, act on insights faster, and gain a competitive edge that legacy BI systems cannot deliver.

5. Stronger collaboration and accessibility

In today’s distributed work environment, decision-making requires real-time access to the same data across teams and locations. Traditional BI often delivers static reports that are shared manually, creating delays and inconsistencies. Cloud analytics ensures that all stakeholders from finance to operations work from a single source of truth, improving transparency and enabling coordinated, data-driven decisions.

How cloud analytics enhances collaboration compared to legacy BI:

- Shared, live dashboards: Legacy BI reports are static and emailed, causing version control issues. Cloud analytics platforms like Amazon QuickSight provide live, interactive dashboards accessible to all authorized users.

- Role-based access: Teams can see relevant data without compromising security. AWS tools such as IAM and Lake Formation ensure proper permissions while maintaining centralized governance.

- Seamless cross-department access: Cloud analytics allows multiple teams to query, visualize, and act on the same datasets simultaneously, eliminating silos and improving consistency.

Transitioning from traditional BI to collaborative cloud analytics on AWS:

- Centralize datasets in Amazon S3 and Amazon Redshift: Create a single source of truth that multiple teams can access in real time.

- Use Amazon QuickSight for interactive dashboards: Deploy dashboards that update automatically and support multi-user collaboration across locations.

- Enforce governance and security: Apply IAM policies and AWS Lake Formation rules to control access, ensuring compliance while enabling collaboration.

Moving to cloud analytics empowers distributed teams to make consistent, transparent, and faster data-driven decisions, something legacy BI struggles to achieve.

Cloud infrastructure offers SMBs the scalability, resilience, and agility on-premises can’t, but success requires strategy. Cloudtech, an AWS Advanced Tier Partner, pairs certified expertise with SMB-focused solutions to deliver secure, cost-efficient, and high-performing cloud analytics.

How does Cloudtech help SMBs realize the benefits of cloud analytics?

Adopting cloud analytics isn’t just about moving dashboards online. It’s about transforming how SMBs collect, process, and act on data. Partnering with an AWS expert like Cloudtech provides certified guidance, proven frameworks, and cost-optimized strategies that align analytics infrastructure with business goals, ensuring faster insights, lower TCO, and scalable performance.

Cloudtech’s SMB-first approach focuses on solutions that fit lean budgets while enabling growth. It automates pipelines, integrates AI-powered analytics, and embeds security and compliance from the start, enabling businesses to turn data into actionable intelligence efficiently.

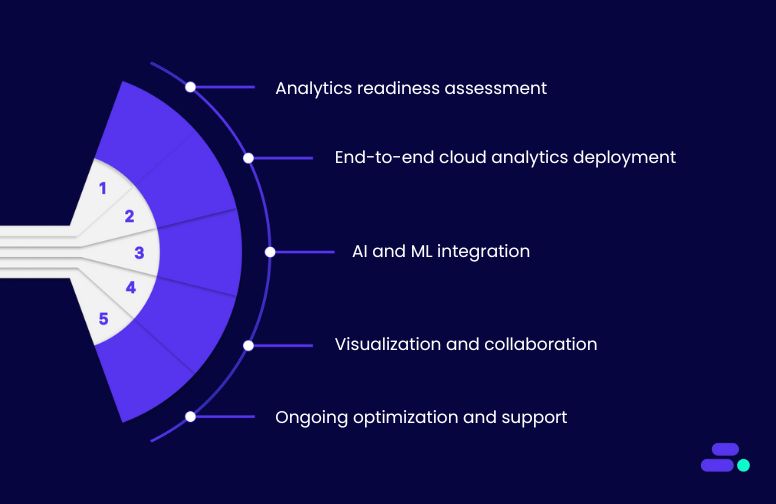

Key Cloudtech services for cloud analytics:

- Analytics readiness assessment: Cloudtech evaluates current BI systems, identifies bottlenecks, outdated workflows, and data silos, and recommends optimized cloud-based architectures.

- End-to-end cloud analytics deployment: Using AWS tools such as Amazon Redshift, Amazon Athena, and AWS Glue, Cloudtech sets up scalable, high-performance data pipelines and storage.

- AI and ML integration: SMBs can utilize Amazon SageMaker for predictive analytics, anomaly detection, and trend forecasting, delivering insights beyond traditional reporting.

- Visualization and collaboration: Interactive dashboards built on Amazon QuickSight provide real-time insights accessible to multiple teams, enabling data-driven decision-making across the organization.

- Ongoing optimization and support: Cloudtech continuously monitors pipelines, refines AI models, and ensures costs and performance remain optimized, allowing SMBs to focus on growth rather than infrastructure.

With these services, SMBs gain a cloud analytics environment that is scalable, intelligent, and cost-efficient.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Modern SMBs need insights that are fast, accurate, and accessible across teams, which are capabilities legacy BI struggles to provide. In contrast, cloud analytics delivers real-time intelligence, scalable infrastructure, and AI-powered tools that drive smarter decision-making and lower total cost of ownership.

Partnering with an AWS expert like Cloudtech ensures analytics adoption is strategic, not just a migration. Cloudtech helps SMBs implement cloud-native data pipelines, predictive models, and interactive dashboards that are secure, compliant, and tailored to business goals. They gain a cloud analytics environment that turns data into actionable insights, accelerates decisions, and provides a competitive edge.

Connect with Cloudtech today and achieve smarter, faster, and more cost-efficient analytics!

FAQs

1. What types of SMB data can be analyzed with cloud analytics?

Cloud analytics can process structured data from ERP and CRM systems, semi-structured data from CSV or JSON files, and unstructured data like emails, social media feeds, and IoT device streams. This versatility allows SMBs to generate comprehensive insights from all relevant sources.

2. How does cloud analytics support real-time decision-making?

Unlike traditional BI, cloud analytics can ingest streaming data from multiple sources and update dashboards continuously. SMBs can monitor sales, inventory, or operational metrics in near real time, enabling faster, data-driven responses.

3. Can SMBs start small with cloud analytics and scale later?

Yes. Cloud analytics platforms on AWS allow SMBs to pay only for the storage and compute they use. Teams can start with core datasets and dashboards, then scale to more users, workloads, or AI-driven analytics as business needs grow.

4. How secure is cloud analytics for sensitive SMB data?

Cloud analytics leverages encryption at rest and in transit, identity-based access controls (IAM), and audit logging. With AWS services like Lake Formation and CloudTrail, SMBs can maintain compliance with industry regulations while keeping data secure.

5. How does AI enhance cloud analytics compared to legacy BI?

AI and machine learning enable predictive modeling, anomaly detection, and automated recommendations. SMBs can anticipate trends, identify risks, and uncover growth opportunities without relying solely on historical reporting.

Cloud technology for business: Why SMBs can’t afford to stay on-prem?

Running IT infrastructure on-premises is costly and unreliable, especially for highly regulated sectors like healthcare providers that must keep patient data secure, compliant, and always accessible. By shifting to the cloud, SMBs gain scalability, resilience, and built-in compliance that outdated servers can’t provide.

Consider a small clinic relying on on-prem servers for electronic health records. When systems crash, staff can’t access files, appointments are delayed, and compliance risks grow. But with the cloud, records remain secure and available anytime, downtime is minimized, and regulatory controls are streamlined, freeing staff to focus on patient care instead of server maintenance.

This article explores why SMBs, especially in critical sectors like healthcare, can’t afford to stay on-prem and how cloud technology enables efficiency, security, and growth.

Key takeaways:

- On-premises is costly and rigid: High capital expenses and slow scalability limit SMB growth.

- Cloud unlocks flexibility: AWS delivers pay-as-you-go scalability that matches evolving business needs.

- Security and compliance are stronger in the cloud: Continuous monitoring and built-in governance reduce risk.

- Resilience is built-in: Multi-AZ architecture and automated backups ensure uptime and fast recovery.

- Cloudtech makes the shift seamless: With AWS expertise and an SMB-first approach, Cloudtech ensures adoption is efficient, secure, and ROI-driven.

Why is on-prem no longer suitable for SMBs?

On-premises infrastructure may have once provided SMBs with control and predictability, but in 2025, it is increasingly becoming a liability. Maintaining physical servers requires capital-intensive investments, constant upkeep, and dedicated expertise.

These are resources that most SMBs simply don’t have. Beyond the cost, the inflexibility of on-prem systems leaves smaller businesses struggling to keep pace with digital-first competitors.

The challenges of staying on-prem:

- High operational costs: Hardware procurement, data center power, cooling, and maintenance quickly eat into tight budgets, with no pay-as-you-go flexibility.

- Limited scalability: Expanding capacity often takes weeks of procurement and configuration, while underutilized resources still drain expenses.

- Security gaps: With threats evolving rapidly, small IT teams often can’t keep up with regular patching, monitoring, and compliance requirements.

- Downtime risks: Hardware failures, outages, or local disasters can halt operations, and recovery from on-prem backups may take days.

- Slow innovation: Reliance on legacy infrastructure makes it harder to adopt modern tools like AI, analytics, or automation that competitors are leveraging.

In short, on-prem environments are no longer a competitive advantage for SMBs, but a bottleneck. The businesses that continue to rely solely on them risk higher costs, weaker security, and slower growth in an increasingly cloud-driven world.

5 ways the cloud outperforms on-prem for modern SMBs

The debate between cloud and on-prem is no longer about ‘if’ but ‘when.’ Traditional infrastructure locks businesses into rigid, costly, and resource-heavy models that can’t keep up with modern demands. Cloud technology, by contrast, gives SMBs the ability to operate with enterprise-grade resilience, security, and agility, without the overhead of managing physical servers.

Adopting the cloud isn’t just a technical upgrade; it’s a shift in how businesses build, scale, and compete. With on-demand resources, automated security, and seamless integrations, SMBs can focus on growth instead of maintenance. Cloud adoption also opens the door to advanced capabilities like AI, analytics, and automation that are impractical on legacy systems.

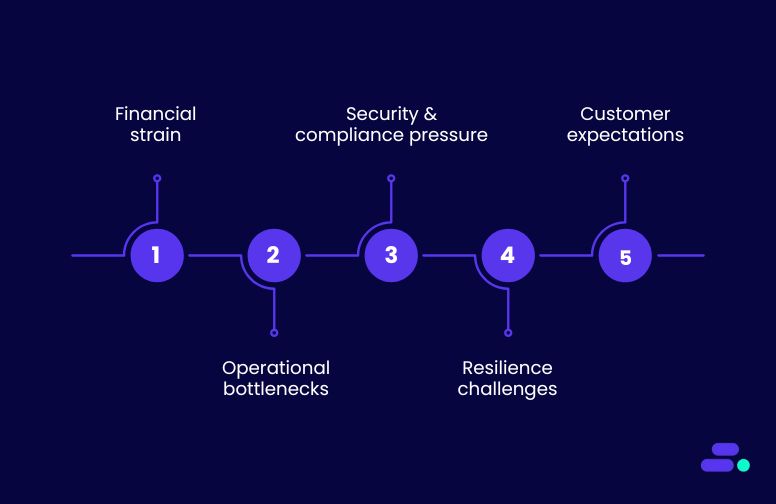

Along with these benefits of the cloud over on-prem infrastructure, there are some key reasons why SMBs are making their cloud transition:

1. Financial strain: “Owning” IT hardware can become a cost trap

For SMBs, on-prem infrastructure often feels like a financial anchor. Beyond the initial purchase of servers and hardware, maintaining an on-prem environment demands continuous capital outlay. Equipment refresh cycles, licensing renewals, and energy costs stack up, making it difficult for smaller businesses to predict and control IT expenses. What starts as a “one-time investment” quickly turns into an ongoing financial liability.

How the financial strain manifests:

- Capital-heavy spending: Hardware, storage arrays, backup systems, and cooling facilities require upfront investment and frequent refresh cycles.

- Unpredictable maintenance costs: Repairs, vendor support contracts, and emergency replacements consume budgets unexpectedly.

- Low ROI on idle capacity: Businesses must overprovision to prepare for peak loads, but much of that infrastructure sits underutilized during normal operations.

How AWS helps overcome this strain: AWS replaces unpredictable capital expenditures with pay-as-you-go pricing, enabling SMBs to pay only for the compute, storage, or networking resources they actually use.

Services like Amazon EC2 allow businesses to scale capacity up or down in minutes without overprovisioning. Amazon S3 eliminates expensive storage arrays by providing secure, virtually unlimited cloud storage, while AWS Cost Explorer helps track and optimize usage, ensuring spend directly aligns with business demand.

Use case example: A regional logistics SMB struggles with rising costs of maintaining on-prem servers for its shipment tracking platform. The company spends heavily on storage upgrades, cooling, and downtime recovery.

After migrating to Amazon EC2, RDS, and S3, it shifts from large upfront purchases to predictable monthly operating expenses. Idle capacity is eliminated, uptime improves, and the IT budget is freed up to fund digital initiatives like real-time tracking and customer experience improvements.

2. Operational bottlenecks: On-prem can slow SMB agility and flexibility

On-premises systems often act as roadblocks to agility. Unlike the cloud, scaling on-prem resources is tied to physical processes, ordering, shipping, installing, and configuring hardware. These delays introduce downtime, stall projects, and limit an SMB’s ability to respond quickly to market demands. In industries where speed translates directly to competitiveness, the operational drag of on-prem infrastructure becomes a serious liability.

How the bottlenecks manifest:

- Slow scaling cycles: Expanding capacity requires hardware procurement, which can take weeks or months, leading to stalled projects.

- Unavoidable downtime: System upgrades, storage expansions, and migrations often require maintenance windows that disrupt business operations.

- Innovation delays: Developers and business teams must wait for infrastructure availability, preventing quick experimentation or deployment of new solutions.

How AWS removes these bottlenecks: With AWS, scaling is elastic and near-instant. Amazon EC2 Auto Scaling provisions new compute capacity automatically as workloads grow, while Amazon S3 provides virtually unlimited storage without manual intervention.

AWS Lambda allows teams to run code serverlessly without worrying about infrastructure provisioning at all. This means SMBs can launch pilots, expand workloads, and pivot strategies in days, not months.

Use case example: A healthcare SMB running an on-prem patient management system faces constant delays when expanding storage for patient records and analytics. Each upgrade involves procurement cycles and scheduled downtime, frustrating clinicians and IT alike.

After migrating to AWS using Amazon S3 for storage and EC2 Auto Scaling for compute, the company can instantly expand capacity as records grow. Downtime is eliminated, developers roll out new features faster, and the organization keeps pace with patient demands without IT bottlenecks.

3. Security and compliance pressure: On-prem can leave SMBs exposed to threats

SMBs today operate in a regulatory environment that is becoming more complex by the year. This can be HIPAA for healthcare, PCI DSS for payments, GDPR for data protection, and industry-specific mandates. On-premises systems often struggle to keep up because every patch, update, and compliance control has to be handled manually.

For SMBs without large security teams, this means increased risk exposure, audit failures, and potential breaches that could damage both finances and trust.

How the pressure manifests:

- Patch management gaps: Security patches for servers, databases, and firewalls often lag due to limited staff capacity, leaving vulnerabilities open.

- Audit complexity: Proving compliance requires extensive logs and reports, which on-prem systems rarely generate automatically.

- Limited expertise: SMB IT teams often lack specialized compliance and cybersecurity skills, creating blind spots that attackers can exploit.

How AWS strengthens security and compliance: AWS provides built-in security and compliance frameworks, with over 100 certifications including HIPAA, SOC, and GDPR readiness. Tools like AWS Config and AWS Audit Manager automate compliance tracking, while Amazon GuardDuty and AWS Security Hub provide continuous monitoring and threat detection.

Managed services like AWS Backup and IAM (Identity and Access Management) enforce policies without requiring SMBs to hire large teams of security specialists.

Use case example: A regional financial services SMB relying on on-prem servers faces constant stress preparing for audits. Their small IT team struggles to track user access logs and manually patch systems, often missing deadlines.

After migrating to AWS, they use AWS Config for continuous compliance monitoring and CloudTrail for automated audit logs. Security is no longer reactive but proactive, auditors get real-time evidence, and the firm meets regulatory requirements without expanding its IT headcount.

4. Resilience challenges: On-prem leaves SMBs vulnerable to hardware failures

Downtime is no longer just an inconvenience. It directly impacts revenue, customer trust, and business continuity. For SMBs running on-prem systems, resilience depends on physical servers, local data centers, and manual backup routines.

A single hardware failure, power outage, or natural disaster can disrupt operations for days. The cost of building redundant infrastructure across multiple sites is prohibitive, leaving many SMBs exposed to risks they can’t afford.

How the challenges manifest:

- Single point of failure: One failed server or storage device can bring operations to a standstill.

- Costly redundancy: Setting up a secondary on-prem site for disaster recovery requires major capital investment.

- Slow recovery: Manual backups and recovery processes delay restoration, risking data loss and extended downtime.

How AWS enables resilience: AWS offers multi-AZ (Availability Zone) and multi-region replication, ensuring workloads remain available even if one site fails. Services like Amazon S3 with cross-region replication and AWS Backup provide secure, automated recovery options.

With Elastic Disaster Recovery (AWS DRS), SMBs can achieve near-instant failover without building secondary data centers, making enterprise-grade resilience accessible at SMB budgets.

Use case example: A regional healthcare provider relies on on-prem servers to store patient records. When a storm knocks out their data center, operations freeze for two days, affecting appointments and compliance obligations.

After migrating to AWS, they use Amazon S3 with automated backups and AWS Elastic Disaster Recovery to replicate workloads across regions. When another outage occurs, failover happens in minutes, ensuring uninterrupted access to patient data and continuity of care.

5. Customer expectations: On-prem can’t deliver modern experiences to users

Today’s customers, clients, and partners expect businesses to be always on, digitally connected, and instantly responsive. Whether it’s checking order status, accessing financial dashboards, or booking appointments, digital experiences have become the default benchmark.

For SMBs relying on on-prem systems, meeting these expectations is increasingly difficult. Legacy infrastructure struggles to integrate with modern applications, lacks real-time data capabilities, and often results in inconsistent user experiences.

How the challenges manifest:

- Limited integrations: On-prem software stacks are often siloed, making it hard to connect with CRMs, e-commerce platforms, or third-party APIs.

- Delayed insights: Without real-time analytics, SMBs can’t provide customers with up-to-the-minute updates or personalized interactions.

- Downtime frustration: Scheduled maintenance or outages mean customers face delays, hurting trust and satisfaction.

How AWS bridges the gap: AWS enables SMBs to deliver real-time, seamless customer experiences with services like Amazon API Gateway (for smooth integrations), Amazon Kinesis (for real-time streaming data), and Amazon RDS/Aurora (for high-performance, always-available databases).

Amazon CloudFront ensures fast, global content delivery, while AWS Lambda supports event-driven responses to customer interactions without lag. Together, these cloud-native services allow SMBs to provide enterprise-level customer experiences without enterprise-level budgets.

Use case example: A mid-sized logistics SMB runs its shipment tracking platform on on-prem servers. Customers often face delays in getting real-time delivery updates due to slow batch data processing.

After moving to AWS, the company leverages Amazon Kinesis for streaming data and CloudFront for rapid delivery, enabling customers to track shipments in real time. The improved digital experience boosts customer satisfaction and strengthens the company’s competitive position.

Cloud infrastructure gives SMBs the scalability, resilience, and agility on-prem systems lack, but success depends on more than tools. Cloudtech, as an AWS Advanced Tier Partner, combines certified expertise with SMB-focused solutions to balance performance, security, and cost, enabling businesses to overcome on-prem limitations.

How does Cloudtech help SMBs move from on-prem to the cloud?

Migrating to the cloud isn’t just about shifting servers, but about rethinking how technology supports growth. Partnering with an AWS expert like Cloudtech gives SMBs access to proven migration frameworks, cost-optimized architectures, and strategies aligned with business goals. This ensures a move that minimizes disruption while unlocking scalability, security, and resilience.

Cloudtech’s edge lies in its SMB-first focus. It builds cloud environments that match lean budgets and dynamic growth needs, automates infrastructure to reduce overhead, and embeds compliance and resiliency from day one. Beyond migration, Cloudtech provides ongoing support so SMBs can innovate faster and stay ahead of larger competitors.

Key Cloudtech services for cloud migration and modernization:

- Infrastructure and workload assessment: Cloudtech evaluates current on-prem setups to pinpoint cost drains, bottlenecks, and risks, then maps out the most efficient migration paths to AWS.

- Seamless migration frameworks: Using AWS tools like Migration Hub and Application Migration Service, Cloudtech ensures workloads move securely and with minimal downtime, whether it’s databases, applications, or storage.

- Cloud-native integration: Once migrated, systems are connected with modern services such as analytics, AI, and automation, enabling SMBs to unlock value beyond simple “lift-and-shift.”

- Resilience and compliance by design: Cloudtech architects environments with multi-AZ redundancy, automated backups, and compliance controls, giving SMBs enterprise-grade reliability without enterprise costs.

Through these services, SMBs gain scalable infrastructure, reduced overhead, and the agility to innovate faster, all while staying focused on business growth.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Modern business demands speed, scalability, and resilience. These are qualities on-prem infrastructure struggles to deliver. By moving to the cloud, SMBs can cut costs, scale instantly, and unlock advanced tools for security, analytics, and innovation.

Working with an AWS partner like Cloudtech ensures the transition isn’t just a lift-and-shift, but a strategic modernization. Cloudtech helps SMBs design lean, cloud-native architectures that are secure, compliant, and built to grow with the business.

With Cloudtech, SMBs gain the freedom to move beyond costly, rigid infrastructure and embrace a cloud foundation that fuels efficiency and competitiveness. Connect with Cloudtech today!

FAQs

1. How quickly can an SMB see benefits after moving to the cloud?

Most SMBs begin to see cost savings and performance improvements within weeks of migration, especially when workloads are re-architected for scalability and efficiency instead of simply “lifting and shifting.”

2. Is cloud migration disruptive to daily operations?

With the right strategy, migration can be phased to minimize downtime. AWS and partners like Cloudtech use automation, replication, and cutover planning to ensure business continuity throughout the process.

3. How does the cloud support future growth that SMBs can’t predict today?

Cloud platforms are elastic by design. SMBs can start small and scale resources up or down instantly, without the need for upfront hardware investment or long procurement cycles.

4. What about industries with strict compliance needs like healthcare or finance?

AWS offers services aligned with frameworks such as HIPAA, GDPR, and FINRA. Cloudtech ensures compliance requirements are built into the architecture so SMBs can innovate confidently without regulatory risk.

5. Can cloud adoption help SMBs attract and retain talent?

Yes. Modern cloud environments allow developers and IT teams to work with cutting-edge tools, automate routine tasks, and focus on innovation. This makes SMBs more appealing to skilled professionals compared to legacy-bound competitors.

Eliminating manual errors with intelligent document processing for SMBs

Handling documents manually is slow, frustrating, and full of mistakes, especially for businesses dealing with invoices, contracts, and forms from multiple sources. That’s where intelligent document processing (IDP) comes in. By using AI to automatically read, classify, and validate documents, IDP turns messy data into accurate, ready-to-use information.

Take a small accounting firm processing hundreds of client invoices every day. Staff spend hours typing in amounts, dates, and client details, and mistakes inevitably happen. Payments get delayed, clients get frustrated, and valuable time is wasted. With IDP, AI-powered optical character recognition (OCR) and automated checks capture and organize invoice data instantly, cutting errors and freeing the team to focus on meaningful work.

This article looks at why reducing manual errors is so important for SMBs and explores practical IDP strategies that make document workflows faster, smoother, and more reliable.

Key takeaways:

- Intelligent document processing automates extraction, classification, and routing, drastically reducing manual errors and saving time.

- AI-powered OCR and validation ensure accurate data capture from diverse document formats, including handwritten or scanned files.

- Integration with ERP, CRM, and accounting systems eliminates duplicate entry and maintains consistent workflows across the business.

- Continuous learning models improve accuracy over time, adapting to new document types and evolving business needs.

- Cloudtech helps SMBs implement IDP efficiently, providing hands-on setup, workflow orchestration, and governance to make data reliable and actionable.

Why is reducing manual errors important for SMBs?

Manual errors in document handling are more than just minor inconveniences. They can ripple across business operations, impacting finances, compliance, and customer trust.

For SMBs, which often operate with lean teams and tight margins, the consequences of mistakes are magnified. One misentered invoice, mislabeled contract, or incorrect client record can lead to payment delays, regulatory penalties, or reputational damage.

The stakes for SMBs:

- Financial impact: Inaccurate data in invoices, purchase orders, or expense reports can result in underpayments, overpayments, or missed revenue. These errors directly affect cash flow and profitability.

- Operational inefficiency: Staff spend extra hours correcting mistakes, manually cross-checking documents, or re-entering data, reducing time available for strategic tasks.

- Compliance and audit risk: Industries like finance, healthcare, and logistics require accurate documentation for audits and regulatory reporting. Errors in records can trigger penalties or compliance breaches.

- Customer and partner trust: Repeated errors in contracts, shipments, or billing can erode confidence, leading to lost clients or strained supplier relationships.

Reducing manual errors through IDP enables SMBs not only to prevent costly mistakes but also streamline workflows, improve decision-making, and free employees to focus on higher-value activities.

Intelligent document processing strategies for reducing manual errors

As volumes of invoices, contracts, forms, and customer records increase, the risk of mistakes multiplies, slowing operations and creating costly downstream errors. IDP provides a structured approach to automate these repetitive tasks, ensuring that data is captured accurately, organized consistently, and ready for analytics or business workflows.

Following IDP strategies isn’t just about technology, it’s about embedding reliability into daily operations. By standardizing how documents are processed, validating information automatically, and integrating systems end-to-end, SMBs can scale without proportionally increasing labor or error rates. This creates a foundation for faster decision-making, higher customer trust, and more efficient growth.

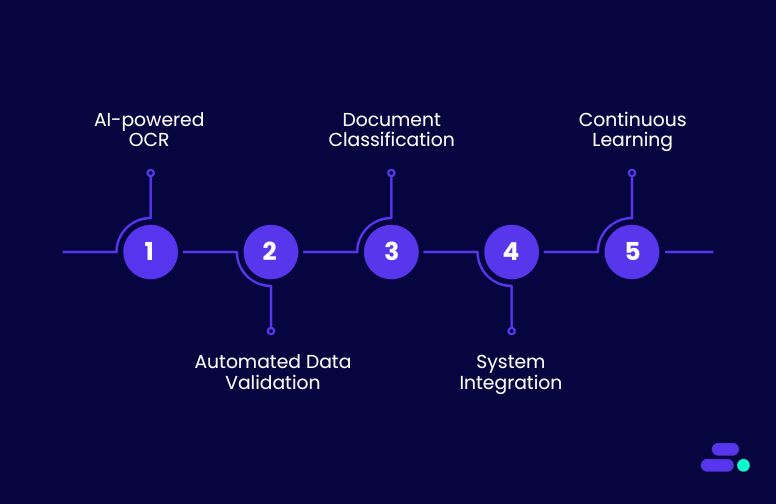

1. AI-powered optical character recognition (OCR)

AI-driven OCR leverages machine learning to accurately extract text from scanned documents, PDFs, and images, going beyond the limitations of traditional rule-based OCR. Modern AI models can handle handwriting, complex layouts, and multi-language documents, making it ideal for SMBs processing diverse document types at scale.

By converting unstructured content into structured, machine-readable data, organizations can immediately feed information into downstream systems without manual re-entry.

How this reduces mistakes:

- Eliminates manual transcription errors: By automatically reading and digitizing text, human typos or misinterpretations are avoided, especially for critical fields like invoice numbers, account IDs, or dates.

- Preserves contextual accuracy: AI models recognize tables, forms, and headers, ensuring that numbers, labels, and metadata remain correctly associated and reducing misalignment errors.

- Handles variability in documents: Machine learning adapts to different formats, fonts, and handwriting styles, preventing the common errors that occur when manually interpreting diverse document layouts.

Application: Tools like Amazon Textract enable SMBs to automatically detect and extract structured elements from invoices, purchase orders, contracts, or handwritten forms. This structured output can then be routed into ERP, accounting, or CRM systems, eliminating the need for repetitive manual data entry and reducing error propagation across business processes.

2. Automated data validation and verification

Automated validation ensures that extracted data is checked in real time against predefined rules, business logic, or trusted external datasets. This step is critical for SMBs processing high volumes of invoices, forms, or customer records, where even small errors can cascade into compliance issues, accounting discrepancies, or operational inefficiencies.

By embedding verification into the workflow, businesses can catch mistakes immediately before they affect downstream systems.

How this reduces mistakes:

- Prevents propagation of incorrect data: Validation rules immediately flag entries that don’t meet expected formats or ranges, stopping errors from entering ERP, CRM, or reporting systems.

- Cross-checks against authoritative sources: Integrating with external databases or internal master records ensures fields like tax IDs, account numbers, or pricing match trusted references.

- Reduces human intervention errors: Automating verification removes the need for repetitive manual checks, lowering the risk of oversight or fatigue-related mistakes.

Application: AWS services like AWS Lambda can run lightweight validation functions in real time, while Amazon DynamoDB can serve as a high-speed reference store for master data.

For example, when processing invoices, Lambda functions can validate vendor tax IDs, product codes, and total amounts against DynamoDB or external APIs, automatically flagging discrepancies for review. This ensures that only accurate, verified data flows into financial and operational systems, saving time and minimizing costly errors.

3. Document classification and intelligent routing

Intelligent document classification organizes incoming documents such as invoices, contracts, or customer forms into categories automatically and routes them to the appropriate team or workflow.

For SMBs handling diverse document types, this reduces bottlenecks, prevents misplacement, and ensures operational efficiency. By using AI to interpret content, businesses eliminate reliance on manual judgment and accelerate document processing.

How this reduces mistakes:

- Prevents misrouting: Automatically assigns documents to the correct team or workflow based on content, avoiding delays and errors from human sorting.

- Maintains consistency: Standardized classification ensures similar documents are treated uniformly, reducing variance in processing outcomes.

- Minimizes human oversight: Eliminates errors caused by fatigue, misreading, or inconsistent categorization by staff.

Application: AWS services such as Amazon Comprehend can perform natural language understanding to detect document types, keywords, and context, while Amazon Step Functions orchestrates workflow automation to route each document to the correct processing queue.

For example, incoming vendor invoices are classified and automatically routed to accounts payable, ensuring timely approval and accurate financial records, without requiring staff to manually review and sort hundreds of documents daily.

4. Integration with business systems

Connecting intelligent document processing pipelines directly to ERP, CRM, or accounting systems ensures that extracted and validated data flows seamlessly into the tools SMBs rely on daily. This prevents repetitive manual data entry, reduces reconciliation work, and ensures information remains consistent across all systems.

By embedding integration into the IDP workflow, businesses achieve faster, more reliable operations and minimize errors caused by human handling.

How this reduces mistakes:

- Eliminates duplicate entry: Automated transfer of validated data prevents repeated typing or copy-paste errors.

- Ensures consistency across systems: Integrated pipelines maintain uniform data formats and values in ERP, CRM, or accounting software.

- Reduces reconciliation efforts: Fewer mismatches between systems mean less manual intervention and correction, freeing teams for higher-value tasks.

Application: AWS tools such as Amazon AppFlow enable low-code integration to sync processed documents directly with platforms like Salesforce, QuickBooks, or SAP. Alternatively, custom APIs can be developed to push validated data into legacy or specialized systems.

For example, once an invoice is processed via Amazon Textract and validated with Lambda functions, AppFlow automatically updates the corresponding record in the accounting system, ensuring accurate financial reporting and eliminating hours of manual data entry.

5. Continuous learning and feedback loops

Intelligent document processing systems become more accurate when they learn from past errors and user corrections. By incorporating feedback loops, AI models can adapt to new document formats, handwriting styles, or exceptions, reducing repeated mistakes over time.

This approach ensures SMBs’ IDP pipelines remain effective even as business needs and document types evolve.

How this reduces mistakes:

- Adapts to new formats: Models update automatically to handle new invoice layouts, forms, or content types.

- Minimizes repeated errors: Corrections made by users feed back into the system, preventing the same mistakes from recurring.

- Enhances classification and extraction accuracy: Continuous retraining improves the precision of text extraction, data validation, and document routing.

Application: AWS Amazon SageMaker allows SMBs to retrain machine learning models on corrected datasets. For instance, if an extracted invoice field is flagged as incorrect, the corrected data can be fed back into SageMaker to refine the OCR and classification models. Over time, the system requires less human intervention, ensures higher data accuracy, and accelerates document processing workflows.

AWS tools provide the foundation for accurate and efficient document processing, but real-world success requires experience and strategy. Cloudtech, as an AWS Advanced Tier Partner, combines certified expertise with SMB-focused solutions to ensure IDP pipelines extract, validate, and deliver data reliably.

How does Cloudtech help SMBs implement intelligent document processing?

Working with an AWS partner brings more than access to cloud tools. It provides certified expertise, proven frameworks, and guidance tailored to business needs. For SMBs implementing intelligent document processing, this means faster deployment, fewer errors, and smoother integration with existing systems.

Cloudtech stands out by combining deep AWS knowledge with an SMB-first approach. It designs lean, scalable IDP solutions that fit tight budgets and evolving workflows, ensures every pipeline is secure and compliant, and provides ongoing support to refine AI models over time.

Key Cloudtech services for IDP:

- IDP workflow assessment: Reviews document-heavy workflows to identify where manual errors occur, what documents need automation, and the most impactful areas to target for AI-driven extraction and validation.

- Document extraction and classification pipelines: Uses Amazon Textract for OCR, Amazon Comprehend for content understanding, and AWS Step Functions for orchestrating extraction, validation, and routing, ensuring documents are processed accurately and consistently.

- Integration with business systems: Connects automated pipelines to ERP, CRM, and accounting platforms via Amazon AppFlow or custom APIs, eliminating duplicate entry and ensuring validated data flows seamlessly into business applications.

- AI model training and continuous improvement: Prepares datasets and retrains AI models in Amazon SageMaker, allowing the system to learn from corrections, adapt to new formats, and continually improve extraction and classification accuracy.

With these services, Cloudtech enables SMBs to minimize manual errors, improve operational efficiency, and generate accurate, actionable insights from their documents, all without requiring large IT teams or enterprise-grade overhead.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Intelligent document processing is all about creating reliable, error-free workflows that let SMBs handle critical documents at scale. By combining AI-driven extraction, smart validation, and seamless system integration, businesses can ensure that data is accurate, actionable, and ready for analytics or decision-making.

Partnering with an AWS expert like Cloudtech adds real value. Cloudtech helps SMBs implement IDP solutions that are tailored, scalable, and easy to manage, ensuring pipelines stay efficient and compliant while adapting to evolving business needs.

With Cloudtech, SMBs gain a trusted framework for transforming document-heavy operations into precise, automated processes. Connect with Cloudtech today!

FAQs

1. What types of documents can SMBs process with intelligent document processing?

IDP can handle a wide variety of document formats including invoices, receipts, contracts, forms, and handwritten notes. By automating extraction and classification, SMBs can process documents consistently regardless of source or structure.

2. How does intelligent document processing adapt to evolving business needs?

Modern IDP systems use machine learning models that improve over time, learning from corrections and exceptions. This enables SMBs to handle new document templates, formats, or content types without reconfiguring workflows.

3. Can small teams manage IDP without dedicated IT staff?

Yes. With prebuilt AWS services like Textract, Comprehend, and Step Functions, SMBs can implement IDP pipelines with minimal coding. Cloudtech further simplifies deployment by providing hands-on setup, monitoring, and training tailored for lean teams.

4. Does intelligent document processing support compliance and auditing?

IDP can be configured to track document changes, capture processing logs, and maintain audit trails. This helps SMBs meet regulatory requirements, ensure traceability, and reduce risk in industries like finance, healthcare, and legal services.

5. How does IDP improve collaboration across departments?

By automatically classifying and routing documents to the right teams, IDP ensures the right stakeholders get access to accurate information quickly. This reduces miscommunication, eliminates manual handoffs, and accelerates workflows.

From raw data to reliable insights: Ensuring data quality at every stage

In any industry, decisions are only as strong as the data behind them. When information is incomplete, inconsistent, or outdated, the consequences can range from lost revenue and compliance issues to reputational damage. For some sectors, however, the stakes are far higher.

In healthcare, the cost of poor data quality isn’t just financial, it can directly affect patient safety. Imagine a clinic relying on electronic health records (EHR) where patient allergy information is outdated or inconsistent across systems. A doctor prescribing medication based on incomplete data could trigger a severe allergic reaction, leading to emergency intervention.

This article explores why data quality matters, the risks of ignoring it, and how to build a framework that maintains accuracy from raw ingestion to AI-ready insights.

Key takeaways:

- Data quality directly impacts business insights, compliance, and operational efficiency.

- Poor-quality data in legacy or fragmented systems can lead to costly errors post-migration.

- AWS offers powerful tools to clean, govern, and monitor data at scale.

- Embedding quality checks and security into pipelines ensures long-term trust in analytics.

- Cloudtech brings AWS-certified expertise and an SMB-focused approach to deliver clean, reliable, and future-ready data.

Why is clean data important for SMB growth?

Growth often hinges on agility, making quick, confident decisions and executing them effectively. But agility without accuracy is a gamble. Clean, reliable data ensures that every strategy, campaign, and operational move is based on facts, not assumptions.

When data is riddled with errors, duplicates, or outdated entries, it not only skews decision-making but also wastes valuable resources. From missed sales opportunities to flawed forecasts, the ripple effect can slow growth and erode customer trust.

Key reasons clean data fuels SMB growth:

- Better decision-making: Accurate data allows leaders to spot trends, forecast demand, and allocate budgets with confidence.

- Improved customer relationships: Clean CRM data means personalized, relevant communication that strengthens loyalty.

- Operational efficiency: Fewer errors reduce time spent on manual corrections, freeing teams to focus on growth activities.

- Regulatory compliance: Clean, well-governed data helps SMBs meet industry compliance standards without last-minute scrambles.

- Stronger AI and analytics outcomes: For SMBs using predictive models or automation, clean data ensures reliable, bias-free insights.