This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

Modernize your cloud. Maximize business impact.

Consider a healthcare provider developing a predictive patient care tool. However, appointment data lives in one system, lab results in another, and patient history in a third. Because these systems are disconnected, the AI model only receives part of the patient’s story. Maybe it knows when the patient last visited but not the latest lab results or underlying conditions.

With this fragmented view, the model misses critical correlations between symptoms, test results, and medical history. Those gaps lead to less accurate predictions, forcing care teams to manually cross-check multiple records. The result is slower decision-making, higher workloads, and, ultimately, a risk to patient outcomes.

This article explains why data integration is the backbone of successful AI initiatives for SMBs, the key challenges to overcome, and how AWS-powered integration strategies can turn fragmented data into a high-value, innovation-ready asset.

Key takeaways:

- Unified data is the foundation of reliable AI: Consolidating historical, transactional, and real-time datasets ensures models see the full picture.

- Standardization improves accuracy: Consistent formats, schemas, and definitions reduce errors and speed up AI training.

- Automation accelerates insights: Automated ingestion, transformation, and synchronization save time and maintain data quality.

- Integrated data drives smarter decisions: Blending live and historical datasets enables timely, actionable business insights.

- SMB-focused solutions scale safely: Cloudtech’s AWS-powered pipelines and GenAI services help SMBs build AI-ready data ecosystems without complexity.

The role of data integration in building more reliable AI models

AI models thrive on diversity, volume, and quality of data, three elements that are often compromised when datasets are scattered. Siloed information not only reduces the range of features available for training, it also introduces inconsistencies in formats, timestamps, and naming conventions. These inconsistencies force the model to learn from a distorted version of reality.

Data integration addresses this at multiple layers:

- Schema alignment: Matching field names, units, and data types so the AI sees “age” or “revenue” in the same way across all sources.

- Entity resolution: Reconciling duplicates or mismatches (e.g., “Robert Smith” in one system, “Rob Smith” in another) to create a unified record.

- Temporal synchronization: Ensuring time-series data from different systems aligns to the same reference points, preventing false correlations.

- Quality control: Applying cleaning, validation, and enrichment steps before data enters the training pipeline.

For example, if a healthcare AI model integrates appointment logs, lab results, and historical diagnoses, schema alignment ensures “blood glucose” readings are in the same units, entity resolution ensures each patient’s record is complete, and temporal synchronization ensures lab results match the right appointment date. Without these steps, the model may misinterpret data or fail to link cause and effect, producing unreliable predictions.

Why data integration matters for AI reliability:

- Complete input for training: Integrated data ensures models receive all relevant attributes, increasing their ability to capture complex relationships.

- Improved accuracy: When no key variables are missing, the AI can make predictions that reflect real-world conditions.

- Reduced bias: Data gaps often skew results toward incomplete or non-representative patterns. Integration helps mitigate this risk.

- Operational efficiency: Teams spend less time reconciling mismatched datasets and more time analyzing insights.

- Stronger compliance: A single source of truth helps maintain regulatory accuracy, especially in sensitive industries like finance or healthcare.

In short, data integration isn’t just about combining sources. It’s about making sure every piece of information fits together in a way that AI can truly understand. That’s the difference between a model that “sort of works” and one that consistently delivers actionable insights.

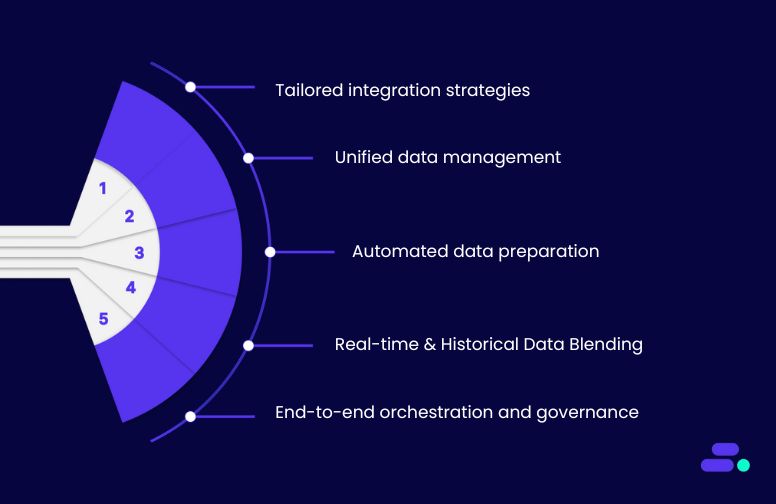

Five data integration strategies to supercharge AI initiatives

In the race to adopt AI, many organizations jump straight to model development, only to hit a wall when results fall short of expectations. The culprit is often not the algorithm, but the data feeding it. AI models are only as strong as the information they learn from, and when that information is scattered, inconsistent, or incomplete, no amount of tuning can fix the foundation.

That’s why the smartest AI journeys start before a single line of model code is written, with a robust data integration strategy. By unifying data from multiple systems, standardizing formats, and ensuring quality at the source, organizations give their AI models the complete and accurate inputs they need to detect patterns, uncover correlations, and generate reliable predictions.

1. Centralize data with a unified repository

AI models thrive on complete, coherent datasets. A centralized data repository (such as a cloud-based data lake or data warehouse) consolidates these disparate datasets into one governed, accessible source of truth. This ensures that every training cycle begins with a consistent and comprehensive dataset, dramatically reducing the “garbage in, garbage out” problem in AI.

How it strengthens AI models:

- Eliminates blind spots by ensuring all relevant datasets, like structured, semi-structured, and unstructured are brought together.

- Enforces consistent formats and schema alignment, reducing preprocessing effort and minimizing the risk of feature mismatches.

- Enables faster experimentation by making new data sources immediately available to data scientists without long integration cycles.

How to do it with AWS:

- Amazon S3: Serve as the foundation for a scalable, secure data lake to store raw and processed data from multiple sources.

- AWS Glue: Automate ETL (extract, transform, load) workflows to clean, standardize, and catalog datasets before loading them into the central repository.

- Amazon Redshift: Store and query structured data at scale for analytics and AI feature engineering.

- AWS Lake Formation: Manage fine-grained access controls, enforce governance, and simplify data sharing across teams.

- Amazon Kinesis Data Firehose: Stream real-time operational data into S3 or Redshift for near-instant availability.

Use case example: A retail company builds a demand forecasting AI without centralizing data, where sales transactions remain in the POS system, inventory lives in ERP, and marketing spend is trapped in spreadsheets. The AI model fails to detect that a recent ad campaign caused regional stockouts because marketing data never intersects with inventory trends.

After implementing an AWS-powered centralized repository, data is continuously ingested from POS, ERP, and CRM systems via Amazon Kinesis, stored in Amazon S3, transformed and cataloged with AWS Glue, and queried directly from Amazon Redshift for AI model training. All datasets are aligned, time-synced, and accessible in one place. The same model is retrained and now detects promotion-driven demand spikes, improving forecast accuracy and enabling proactive stock replenishment before shortages occur.

2. Standardize data formats and definitions

Inconsistent data formats and ambiguous definitions are silent AI killers. If one system logs dates as “MM/DD/YYYY” and another as “YYYY-MM-DD,” or if “customer” means different things across departments, the model may misinterpret or ignore key features. Standardizing formats, data types, and business definitions ensures that AI models interpret every field correctly, preserving data integrity and model accuracy.

How it strengthens AI models:

- Prevents feature mismatch errors by enforcing consistent schema across all datasets.

- Improves model interpretability by ensuring fields mean the same thing in every dataset.

- Reduces time spent on cleansing and reconciliation, allowing data scientists to focus on modeling and optimization.

How to do it with AWS:

- AWS Glue Data Catalog: Maintain a centralized schema registry and automatically detect inconsistencies during ETL jobs.

- AWS Glue DataBrew: Perform low-code, visual data preparation to enforce format consistency before ingestion.

- Amazon Redshift Spectrum: Query standardized datasets in S3 without copying data, ensuring consistency across analytical workloads.

- AWS Lake Formation: Apply uniform governance and metadata tagging so every dataset is understood in the same context.

Use case example: A global e-commerce company trains a product recommendation AI where customer purchase dates, stored differently across regional databases, cause time-based features to be calculated incorrectly. The model misaligns buying patterns, recommending seasonal products out of sync with local trends.

After implementing an AWS-based standardization pipeline, all incoming sales, inventory, and marketing datasets are normalized using AWS Glue DataBrew, cataloged in AWS Glue, and enforced with AWS Lake Formation policies. Dates, currencies, and product codes follow a single global standard. When the same model is retrained, seasonal recommendations now align perfectly with local demand cycles, increasing click-through rates and boosting regional sales during peak periods.

3. Automate data ingestion and synchronization

Manual data ingestion is slow, error-prone, and a bottleneck for AI readiness. AI models perform best when training datasets are consistently refreshed with accurate, up-to-date information. Automating ingestion and synchronization ensures that data from multiple sources flows into your central repository in near real time, eliminating stale insights and keeping models relevant.

How it strengthens AI models:

- Reduces model drift by ensuring fresh, synchronized data is always available for training and retraining.

- Minimizes human error and delays that occur in manual data collection and uploads.

- Enables near real-time analytics, empowering AI systems to adapt to changing business conditions faster.

How to do it with AWS:

- Amazon Kinesis Data Streams: Capture and process real-time data from applications, devices, and systems.

- Amazon Kinesis Data Firehose: Automatically deliver streaming data into Amazon S3, Redshift, or OpenSearch without manual intervention.

- AWS Glue: Orchestrate and automate ETL pipelines to prepare new data for immediate use.

- Amazon Data Migration Service (DMS): Continuously replicate data from on-prem or other cloud databases into AWS.

- AWS Step Functions: Coordinate ingestion workflows, error handling, and retries with serverless orchestration.

Use case example: A ride-hailing company builds a dynamic pricing AI model but uploads driver location data and trip histories only once per day. By the time the model runs, traffic patterns and demand surges have already shifted, leading to inaccurate fare recommendations.

After deploying an AWS-driven ingestion and sync solution, GPS pings from drivers are streamed via Amazon Kinesis Data Streams into Amazon S3, transformed in real time with AWS Glue, and immediately accessible to the AI engine. Historical trip data is kept continuously synchronized using AWS DMS. The retrained model now responds to live traffic and demand spikes within minutes, improving fare accuracy and boosting driver earnings and rider satisfaction.

4. Implement strong data quality checks

Even the most advanced AI models will fail if trained on flawed data. Inconsistent, incomplete, or inaccurate datasets introduce biases and errors that reduce model accuracy and reliability. Embedding automated, ongoing data quality checks into your pipelines ensures that only clean, trustworthy data is used for AI training and inference.

How it strengthens AI models:

- Prevents “garbage in, garbage out” by filtering out inaccurate, duplicate, or incomplete records before they reach the model.

- Improves model accuracy and generalization by ensuring features are reliable and consistent.

- Reduces bias and unintended drift caused by faulty or outdated inputs by ensuring that all training and inference datasets remain accurate, current, and representative of real-world conditions

How to do it with AWS:

- AWS Glue DataBrew: Profile, clean, and validate datasets using no-code, rule-based transformations.

- AWS Glue: Build ETL jobs that incorporate validation rules (e.g., null checks, schema matching, outlier detection) before loading data into the repository.

- Amazon Deequ: Use this open-source library (built on Apache Spark) for automated, scalable data quality verification and anomaly detection.

- Amazon CloudWatch: Monitor data pipelines for failures, delays, or anomalies in incoming datasets.

- AWS Lambda: Trigger automated remediation workflows when data fails quality checks.

Use case example: A healthcare startup develops a patient risk prediction AI model, but occasional CSV imports from partner clinics contain corrupted date fields and missing patient IDs. The model begins producing unreliable predictions, and clinicians lose trust in its recommendations.

After implementing AWS-based quality controls, incoming clinic data is first validated in AWS Glue DataBrew for completeness and schema accuracy. Amazon Deequ automatically flags anomalies like missing IDs or invalid dates, while AWS Lambda routes flagged datasets to a quarantine bucket for review. Clean, validated records are then loaded into the central data lake in Amazon S3. The retrained model shows a boost in predictive accuracy, restoring trust among healthcare providers.

5. Integrate historical and real-time data streams

AI models achieve peak performance when they can learn from the past while adapting to the present. Historical data provides context and patterns, while real-time data ensures predictions reflect the latest conditions. Integrating both streams creates dynamic, context-aware models that can respond immediately to new information without losing sight of long-term trends.

How it strengthens AI models:

- Enables continuous learning by combining long-term trends with up-to-the-moment events.

- Improves prediction accuracy for time-sensitive scenarios like demand forecasting, fraud detection, or predictive maintenance.

- Allows real-time AI inference while retaining the ability to retrain models with updated datasets.

How to do it with AWS:

- Amazon S3: Store and manage large volumes of historical datasets in a cost-effective, durable repository.

- Amazon Kinesis Data Streams/Kinesis Data Firehose: Capture and deliver real-time event data (transactions, IoT signals, clickstreams) directly into storage or analytics platforms.

- Amazon Redshift: Combine and query historical and streaming data for advanced analytics and AI feature engineering.

- AWS Glue: Automate the transformation and joining of historical and live data into AI-ready datasets.

- Amazon SageMaker Feature Store: Maintain and serve consistent features built from both real-time and historical inputs for training and inference.

Use case example: A regional power utility’s outage prediction AI is trained only on historical maintenance logs and weather patterns. It fails to anticipate sudden failures caused by unexpected equipment surges during heatwaves. This leads to unplanned downtime and costly emergency repairs.

By integrating decades of maintenance history stored in Amazon S3 with real-time sensor readings from substations streamed via Amazon Kinesis, the utility gains a live operational view. AWS Glue merges ongoing IoT telemetry with past failure patterns, and Amazon SageMaker Feature Store delivers enriched features to the prediction model.

The updated AI now detects anomalies minutes after they begin, allowing maintenance teams to take preventive action before outages occur, reducing downtime incidents and cutting emergency repair costs.

So, effective AI isn’t just about choosing the right algorithms. It’s about making sure the data behind them is connected, consistent, and complete. With the help of Cloudtech and its AWS-powered data integration approach, SMBs can break down silos and unify every relevant dataset into a single, governed ecosystem.

How does Cloudtech help SMBs turn disconnected data into a unified AI-ready asset?

Disconnected data limits AI potential. Historical, transactional, and real-time datasets must flow seamlessly into a unified, governed environment for models to deliver accurate predictions. Cloudtech, an AWS Advanced Tier Services Partner focused exclusively on SMBs, solves this by combining robust data integration with practical GenAI solutions.

- GenAI Proof of Concept (POC): Cloudtech rapidly tests AI use cases with integrated datasets, delivering a working model in four weeks. SMBs gain actionable insights quickly without large upfront investments.

- Intelligent Document Processing (IDP): Integrated data from forms, invoices, and contracts is automatically extracted, classified, and merged into ERP or DMS systems. This reduces manual effort, eliminates errors, and accelerates document-heavy workflows.

- AI Insights with Amazon Q: By using integrated datasets, Cloudtech enables natural-language queries, conversational dashboards, and executive-ready reports. SMBs can make faster, data-driven decisions without needing specialized analytics teams.

- GenAI Strategy Workshop: Cloudtech helps SMBs identify high-impact AI use cases and design reference architectures using unified data. This ensures AI initiatives are grounded in complete, accurate, and accessible datasets.

- GenAI Data Preparation: Clean, structured, and harmonized data is delivered for AI applications, improving model accuracy, speeding up training, and reducing errors caused by inconsistent or incomplete information.

By combining AWS-powered data integration with these GenAI services, Cloudtech turns fragmented datasets into a strategic asset, enabling SMBs to build reliable AI models, accelerate innovation, and extract tangible business value from their data.

See how other SMBs have modernized, scaled, and thrived with Cloudtech’s support →

Wrapping up

Data integration is the foundation for AI, analytics, and smarter decision-making in SMBs. When data is unified, standardized, and continuously synchronized, businesses gain clarity, improve efficiency, and unlock actionable insights from every dataset.

Cloudtech, with its AWS-certified expertise and SMB-focused approach, helps organizations build robust integration pipelines that are automated, scalable, and reliable. It ensures AI models and analytics tools work with complete, accurate information.

With Cloudtech’s AWS-powered data integration solutions, SMBs can transform scattered, siloed data into a strategic asset, fueling smarter predictions, faster decisions, and sustainable growth. Explore how Cloudtech can help your business unify its data and power the next generation of AI-driven insights—connect with the Cloudtech team today.

FAQs

1. How can SMBs measure the ROI of a data integration initiative?

Cloudtech advises SMBs to track both direct and indirect benefits, such as reduced manual reconciliation time, faster AI model training, improved forecast accuracy, and enhanced decision-making speed. By setting measurable KPIs during the integration planning phase, SMBs can quantify cost savings, productivity gains, and revenue impact over time.

2. Can data integration help SMBs comply with industry regulations?

Yes. Integrating data into a governed, centralized repository allows SMBs to enforce access controls, maintain audit trails, and ensure data lineage. Cloudtech leverages AWS tools like Lake Formation and Glue to help businesses maintain compliance with standards such as HIPAA, GDPR, or FINRA while supporting analytics and AI initiatives.

3. How do SMBs prioritize which datasets to integrate first?

Cloudtech recommends starting with high-value data that drives immediate business impact, whether it is customer, sales, and operational datasets, while considering dependencies and integration complexity. Prioritizing in this way ensures early wins, faster ROI, and a solid foundation for scaling integration efforts to more complex or less structured data.

4. What role does metadata play in effective data integration?

Metadata provides context about the datasets, including origin, structure, and usage patterns. Cloudtech uses tools like AWS Glue Data Catalog to manage metadata, making it easier for SMBs to track data quality, enforce governance, and enable AI models to consume data accurately and efficiently.

5. Can integrated data improve collaboration between teams in SMBs?

Absolutely. When datasets are centralized and accessible, different teams like sales, marketing, finance, and operations can work from the same trusted source. Cloudtech ensures data is not only integrated but discoverable, empowering cross-functional teams to make consistent, data-driven decisions and reducing silos that slow growth.

Get started on your cloud modernization journey today!

Let Cloudtech build a modern AWS infrastructure that’s right for your business.