This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

Modernize your cloud. Maximize business impact.

Data is the backbone of all business decisions, especially when organizations operate with tight margins and limited resources. For SMBs, having data scattered across spreadsheets, apps, and cloud folders can hinder efficiency.

According to Gartner, poor data quality costs businesses an average of $12.9 million annually. SMBs cannot afford such inefficiency. This is where an Amazon Data Lake proves invaluable.

It offers a centralized and scalable storage solution, enabling businesses to store all their structured and unstructured data in one secure and searchable location. It also simplifies data analysis. In this guide, businesses will discover 10 practical best practices to help them build an AWS data lake that aligns with their specific goals.

What is an Amazon data lake, and why is it important for SMBs?

An Amazon Data Lake is a centralized storage system built on Amazon S3, designed to hold all types of data, whether it comes from CRM systems, accounting software, IoT devices, or customer support logs. Businesses do not need to convert or structure the data beforehand, which saves time and development resources. This makes data lakes particularly suitable for SMBs that gather data from multiple sources but lack large IT teams.

Traditional databases and data warehouses are more rigid. They require pre-defining data structures and often charge based on compute power, not just storage. A data lake, on the other hand, flips that model. It gives businesses more control, scales with growth, and facilitates advanced analytics, all without the high overhead typically associated with traditional systems.

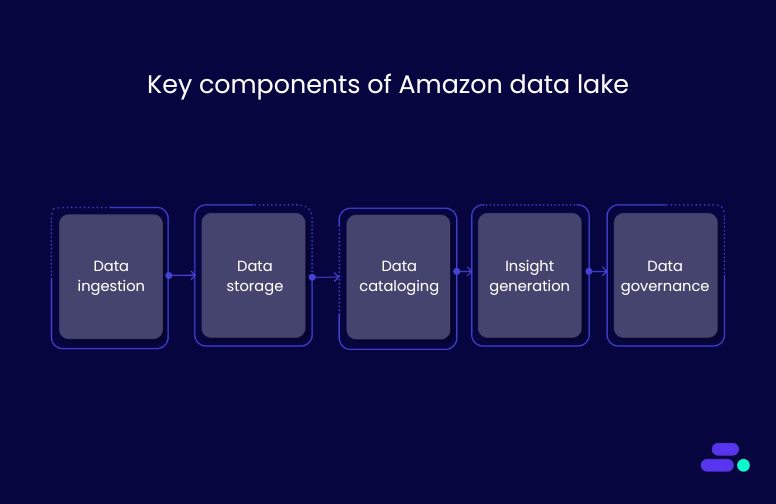

To understand how an Amazon data lake works, it helps to know the five key components that support data processing at scale:

- Data ingestion: Businesses can bring in data from both cloud-based and on-premises systems using tools designed to efficiently move data into Amazon S3.

- Data storage: All data is stored in Amazon S3, a highly durable and scalable object storage service.

- Data cataloging: Services like AWS Glue automatically index and organize data, making it easier for businesses to search, filter, and prepare data for analysis.

- Data analysis and visualization: Data lakes can be connected to tools like Amazon Athena or QuickSight, enabling businesses to query, visualize, and uncover insights directly without needing to move data elsewhere.

- Data governance: Built-in controls such as access permissions, encryption, and logging help businesses manage data quality and security. Amazon S3 access logs can track user actions, and permissions can be enforced using AWS IAM roles or AWS Lake Formation.

Why an Amazon data lake matters for business

- Centralized access: Businesses can store all their data from product inventory to customer feedback in one place, accessible by teams across departments.

- Flexibility for all data types: Businesses can keep JSON files, CSV exports, videos, PDFs, and more without needing to transform them first.

- Lower costs at scale: With Amazon S3, businesses only pay for the storage they use. They can use Amazon S3 Intelligent-Tiering to reduce costs as data becomes less frequently accessed.

- Access to advanced analytics: Businesses can run SQL queries with Amazon Athena, train machine learning models with Amazon SageMaker, or build dashboards with Amazon QuickSight directly on their Amazon data lake, without moving the data.

With the rise of generative AI (GenAI), businesses can unlock even greater value from their Amazon data lake.

Amazon Bedrock enables SMBs to build and scale AI applications without managing underlying infrastructure. By integrating Bedrock with your data lake, you can use pre-trained foundation models to generate insights, automate data summarization, and drive smarter decision-making, all while maintaining control over your data security and compliance.

10 best practices to build a smart, scalable data lake on AWS

A successful Amazon data lake is more than just a storage bucket. It’s a living, evolving system that supports growth, analysis, and security at every stage. These 10 best practices will help businesses avoid costly mistakes and build a data lake that delivers real, measurable results.

1. Design a tiered storage architecture

Start by separating data into three functional zones:

- Raw zone: This is the original data, untouched and unfiltered. Think IoT sensor feeds, app logs, or CRM exports.

- Staging zone: Store cleaned or transformed versions here. It’s used by data engineers for processing and QA.

- Curated zone: Only high-quality, production-ready datasets go here, which are used by business teams for reporting and analytics.

This setup ensures data flows cleanly through the pipeline, reduces errors, and keeps teams from working on outdated or duplicate files.

2. Use open, compressed data formats

Amazon S3 supports many file types, but not all formats perform the same. For analytical workloads, use columnar formats like Parquet or ORC.

- They compress better than CSV or JSON, saving you storage costs.

- Tools like Amazon Athena, Amazon Redshift Spectrum, and AWS Glue process them much faster.

- You only scan the columns you need, which speeds up queries and reduces compute charges.

Example: Converting JSON logs to Parquet can cut query costs by more than 70% when running regular reports.

3. Apply fine-grained access controls

SMBs might have fewer users than large enterprises, but data access still needs control. Broad admin roles or shared credentials should be avoided.

- Roles and permissions should be defined with AWS IAM. Additionally, AWS Lake Formation provides advanced capabilities for data governance, allowing businesses to restrict access at the column or row level. For example, HR may have access to employee IDs but not salaries.

- When using AWS IAM roles within the context of AWS Lake Formation, it is crucial to tailor permissions carefully to restrict access, especially when column/row-level access controls are implemented.

- Enable audit trails so you can track who accessed what and when.

- Use AWS CloudTrail for continuous monitoring of access and changes, and Amazon Macie to automatically discover and classify sensitive data, helping maintain security and compliance.

This protects sensitive data, helps you stay compliant (HIPAA, GDPR, etc.), and reduces internal risk.

4. Tag data for lifecycle and access management

Tags are more than just labels; they are powerful tools for automation, organization, and cost tracking. By assigning metadata tags, businesses can:

- Automatically manage the lifecycle of data, ensuring that old data is archived or deleted at the right time.

- Apply granular access controls, ensuring that only the right teams or individuals have access to sensitive information.

- Track usage and generate reports based on team, project, or department.

- Tags can also feed into cost allocation reports, enabling granular tracking of storage and processing costs by project or department.

For SMBs with lean IT teams, tagging streamlines data management and reduces the need for constant manual intervention, helping to keep the data lake organized and cost-efficient.

5. Use Amazon S3 storage classes to control costs

Storage adds up, especially when you're keeping logs, backups, and historical data. Here's how to keep costs in check:

- Use Amazon S3 Standard for active data.

- Switch to Amazon S3 Intelligent-Tiering for unpredictable access.

- Amazon Glacier is intended for infrequent access, and Amazon Glacier Deep Archive is specifically designed for very long-term archival at a lower price point.

- Consider using Amazon S3 One Zone-IA (One Zone-Infrequent Access) for data that doesn't require multi-AZ resilience but needs to be accessed infrequently. This storage class offers potential cost savings.

- Set up Amazon S3 Lifecycle policies to automate transitioning data between Standard, Intelligent-Tiering, Glacier, and Deep Archive tiers, balancing cost and access needs efficiently.

Set up lifecycle policies that automatically move files based on age or access frequency. This approach helps businesses avoid unnecessary costs and ensures old data is properly managed without manual intervention.

6. Catalog everything with AWS Glue

A data lake without a catalog is like a warehouse without a map. Businesses may store vast amounts of data, but without proper organization, finding specific information becomes a challenge. For SMBs, quick access to trusted data is essential.

Businesses should use the AWS Glue Data Catalog to:

- Register and track all datasets stored in Amazon S3.

- Maintain schema history for evolving data structures.

- Enable SQL-based querying with Amazon Athena or SageMaker.

- Simplify governance by organizing data into searchable tables and databases.

7. Automate ingestion and processing

Manual uploads and data preparation do not scale. If businesses spend time moving files, they aren't focusing on analyzing them. Automating this step keeps the data lake up to date and the team focused on deriving insights.

Here’s how businesses can streamline data ingestion and processing:

- Trigger workflows using Amazon S3 event notifications when new files arrive.

- Use AWS Lambda to validate, clean, or transform data in real time.

- For larger workloads, businesses may want to consider AWS Glue or Amazon Kinesis for streaming or batch data processing in real-time, as Lambda has execution time limits that might not be ideal for large-scale data processing.

- Schedule recurring ETL jobs with AWS Glue for batch data processing.

- Reduce operational overhead and ensure data freshness without daily oversight.

- Utilize infrastructure as code tools like AWS CloudFormation or Terraform to automate data lake infrastructure provisioning, ensuring repeatability and easy updates.

8. Partition the data strategically

As data grows, so do the costs and time required to scan it. Partitioning helps businesses limit what queries need to touch, which improves performance and reduces costs.

To partition effectively:

- Organize data by logical keys like year/month/day, customer ID, or region

- Ensure each partition folder follows a consistent naming convention

- Query tools like Amazon Athena or Amazon Redshift Spectrum will scan only what’s needed

- For example, querying one month of data instead of an entire year saves time and computing cost

- Use AWS Glue Data Catalog partitions to optimize query performance, and address the small files problem by periodically compacting data files to speed up Amazon Athena and Redshift Spectrum queries.

9. Encrypt data at rest and in transit

Whether businesses are storing customer records or financial reports, security is non-negotiable. Encryption serves as the first line of defense, both in storage and during transit.

Protect your Amazon data lake with:

- S3 server-side encryption to secure data at rest

- HTTPS enforcement to prevent data from being exposed during transfer

- AWS Key Management Service (KMS) for managing, rotating, and auditing encryption keys

- Compliance with standards like HIPAA, SOC2, and PCI without adding heavy complexity

10. Monitor and audit the data lake

Businesses cannot fix what they cannot see. Monitoring and logging provide insights into data access, usage patterns, and potential issues before they impact teams or customers.

To keep their Amazon data lake accountable, businesses can use:

- AWS CloudTrail will log all API calls, access attempts, and bucket-level activity.

- Amazon CloudWatch is used to monitor usage patterns and performance issues and trigger alerts.

- AWS Config, which tracks AWS resource configurations and serves as a useful tool for auditing purposes.

- Dashboards and logs that help businesses prove compliance and optimize operations.

- Visibility that supports continuous improvement and risk management.

Common mistakes SMBs make (and how to avoid them)

Building a smart, scalable Amazon data lake requires more than just uploading data. SMBs often make critical mistakes that impact performance, costs, and security. Here’s what to avoid:

1. Dumping all data with no structure

Throwing data into a lake without organization is one of the quickest ways to create chaos. Without structure, your data becomes hard to navigate and prone to errors. This leads to wasted time, incorrect insights, and potential security risks.

How to avoid it:

- Implement a tiered architecture (raw, staging, curated) to keep data clean and organized.

- Use metadata tagging for easy tracking, access, and management.

- Set up partitioning strategies so you can quickly query the relevant data.

2. Ignoring cost control features

Without proper oversight, a data lake’s costs can spiral out of control. Amazon S3 storage, data transfer, and analytics services can add up quickly if businesses don’t set boundaries.

How to avoid it:

- Use Amazon S3 Intelligent-Tiering for unpredictable access patterns, and Amazon Glacier for infrequent access or archival data.

- Set up lifecycle policies to automatically archive or delete old data.

- Regularly audit storage and analytics usage to ensure costs are kept under control.

3. Lacking role-based access

Without role-based access control (RBAC), a data lake can become a security risk. Granting blanket access to all users increases the likelihood of accidental data exposure or malicious activity.

How to avoid it:

- Use AWS IAM roles to define who can access what data.

- Implement AWS Lake Formation to manage permissions at the table, column, or row level.

- Regularly audit who has access to sensitive data and ensure permissions are up to date.

4. Overcomplicating the tech stack

It’s tempting to integrate every cool tool and service, but complexity doesn’t equal value; it often leads to confusion and poor performance. For SMBs, simplicity and efficiency are key.

How to avoid it:

- Start with basic services (like Amazon S3, AWS Glue, and Athena) before adding layers.

- Keep integrations minimal, and make sure each service adds clear value to your data pipeline.

- Prioritize usability and scalability over over-engineering.

- Additionally, Amazon Redshift Spectrum could be an important service for SMBs who need SQL-based querying over Amazon S3 data, especially for larger datasets. While it's not an error, it’s a suggestion to consider.

These common mistakes are easy for businesses to fall into, but once they are understood, they are simple to avoid. By staying focused on simplicity, cost control, and security, businesses can ensure that their Amazon data lake serves their needs effectively.

Checklist for businesses ensuring the health of an Amazon data lake

Use this checklist to quickly evaluate the health of an Amazon data lake. Regularly checking these points ensures the data lake is efficient, secure, and cost-effective.

Zones created?

- Has data been organized into raw, staging, and curated zones?

- Are data types and access needs clearly defined for each zone?

Access policies in place?

- Are AWS IAM roles properly defined for users with specific access needs?

- Has AWS Lake Formation been set up for fine-grained permissions?

Data formats optimized?

- Is columnar format like Parquet or ORC being used for performance and cost efficiency?

- Have large files been compressed to reduce storage costs?

Costs tracked?

- Are Amazon S3's intelligent-tiering and Amazon Glacier being used to minimize storage expenses?

- Is there a regular review of Amazon S3 storage usage and lifecycle policies?

Query performance healthy?

- Has partitioning been implemented for faster and cheaper queries?

- Are queries running efficiently with services like Amazon Athena or Amazon Redshift Spectrum?

By using this checklist regularly, businesses will be able to keep their Amazon data lake running smoothly and cost-effectively, while ensuring security and performance remain top priorities.

Conclusion

Implementing best practices for an Amazon data lake offers clear benefits. By structuring data into organized zones, automating processes, and using cost-efficient storage, businesses gain control over their data pipeline. Encryption and fine-grained access policies ensure security and compliance, while optimized queries and cost management turn the data lake into an asset that drives growth, rather than a burden.

Cloud modernization is within reach for SMBs, and it doesn’t have to be a complex, resource-draining project. With the right guidance and tools, businesses can build a scalable and secure data lake that grows alongside their needs. Cloudtech specializes in helping SMBs modernize AWS environments through secure, scalable, and optimized data lake strategies—without requiring full platform migrations.

SMBs interested in improving their AWS data infrastructure can consult Cloudtech for tailored guidance on modernization, security, and cost optimization.

FAQs

1. How do businesses migrate existing on-premises data to their Amazon data lake?

Migrating data to an Amazon data lake can be done using tools like AWS DataSync for efficient transfer from on-premises to Amazon S3, or AWS Storage Gateway for hybrid cloud storage. For large-scale data, AWS Snowball offers a physical device for transferring large datasets when bandwidth is limited.

2. What are the best practices for data ingestion into an Amazon data lake?

To ensure seamless data ingestion, businesses can use Amazon Kinesis Data Firehose for real-time streaming, AWS Glue for ETL processing, and AWS Database Migration Service (DMS) to migrate existing databases into their data lake. These tools automate and streamline the process, ensuring that data remains up-to-date and ready for analysis.

3. How can businesses ensure data security and compliance in their data lake?

For robust security and compliance, businesses should use AWS IAM to define user permissions, AWS Lake Formation to enforce data access policies, and ensure data is encrypted with Amazon S3 server-side encryption and AWS KMS. Additionally, enabling AWS CloudTrail will allow businesses to monitor access and track changes for audit purposes, ensuring full compliance.

4. What are the cost implications of building and maintaining a data lake?

While Amazon S3 is cost-effective, managing costs requires businesses to utilize Amazon S3 Intelligent-Tiering for unpredictable access patterns and Amazon Glacier for infrequent data. Automating data transitions with lifecycle policies and managing data transfer costs, especially across regions, will help keep expenses under control.

5. How do businesses integrate machine learning and analytics with their data lake?

Integrating Amazon Athena for SQL queries, Amazon SageMaker for machine learning, and Amazon QuickSight for visual analytics will help businesses unlock the full value of their data. These AWS services enable seamless querying, model training, and data visualization directly from their Amazon data lake.

Get started on your cloud modernization journey today!

Let Cloudtech build a modern AWS infrastructure that’s right for your business.