Resources

Find the latest news & updates on AWS

Cloudtech Has Earned AWS Advanced Tier Partner Status

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network!

We’re honored to announce that Cloudtech has officially secured AWS Advanced Tier Partner status within the Amazon Web Services (AWS) Partner Network! This significant achievement highlights our expertise in AWS cloud modernization and reinforces our commitment to delivering transformative solutions for our clients.

As an AWS Advanced Tier Partner, Cloudtech has been recognized for its exceptional capabilities in cloud data, application, and infrastructure modernization. This milestone underscores our dedication to excellence and our proven ability to leverage AWS technologies for outstanding results.

A Message from Our CEO

“Achieving AWS Advanced Tier Partner status is a pivotal moment for Cloudtech,” said Kamran Adil, CEO. “This recognition not only validates our expertise in delivering advanced cloud solutions but also reflects the hard work and dedication of our team in harnessing the power of AWS services.”

What This Means for Us

To reach Advanced Tier Partner status, Cloudtech demonstrated an in-depth understanding of AWS services and a solid track record of successful, high-quality implementations. This achievement comes with enhanced benefits, including advanced technical support, exclusive training resources, and closer collaboration with AWS sales and marketing teams.

Elevating Our Cloud Offerings

With our new status, Cloudtech is poised to enhance our cloud solutions even further. We provide a range of services, including:

- Data Modernization

- Application Modernization

- Infrastructure and Resiliency Solutions

By utilizing AWS’s cutting-edge tools and services, we equip startups and enterprises with scalable, secure solutions that accelerate digital transformation and optimize operational efficiency.

We're excited to share this news right after the launch of our new website and fresh branding! These updates reflect our commitment to innovation and excellence in the ever-changing cloud landscape. Our new look truly captures our mission: to empower businesses with personalized cloud modernization solutions that drive success. We can't wait for you to explore it all!

Stay tuned as we continue to innovate and drive impactful outcomes for our diverse client portfolio.

10 Best practices for building a scalable and secure AWS data lake for SMBs

Data is the backbone of all business decisions, especially when organizations operate with tight margins and limited resources. For SMBs, having data scattered across spreadsheets, apps, and cloud folders can hinder efficiency.

According to Gartner, poor data quality costs businesses an average of $12.9 million annually. SMBs cannot afford such inefficiency. This is where an Amazon Data Lake proves invaluable.

It offers a centralized and scalable storage solution, enabling businesses to store all their structured and unstructured data in one secure and searchable location. It also simplifies data analysis. In this guide, businesses will discover 10 practical best practices to help them build an AWS data lake that aligns with their specific goals.

What is an Amazon data lake, and why is it important for SMBs?

An Amazon Data Lake is a centralized storage system built on Amazon S3, designed to hold all types of data, whether it comes from CRM systems, accounting software, IoT devices, or customer support logs. Businesses do not need to convert or structure the data beforehand, which saves time and development resources. This makes data lakes particularly suitable for SMBs that gather data from multiple sources but lack large IT teams.

Traditional databases and data warehouses are more rigid. They require pre-defining data structures and often charge based on compute power, not just storage. A data lake, on the other hand, flips that model. It gives businesses more control, scales with growth, and facilitates advanced analytics, all without the high overhead typically associated with traditional systems.

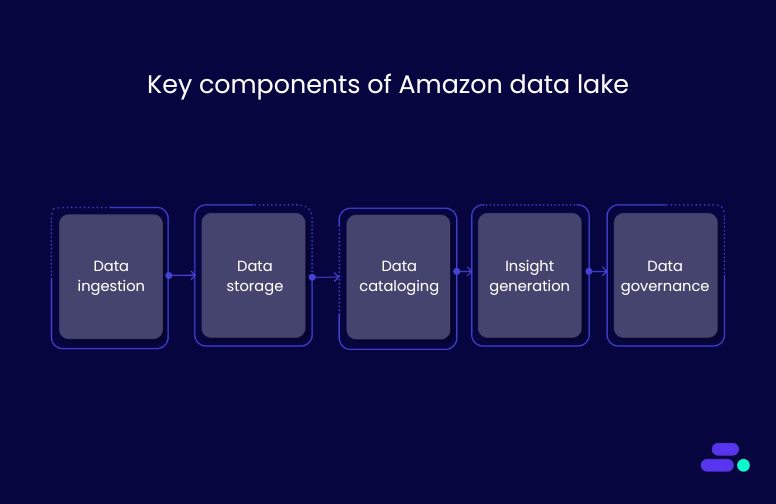

To understand how an Amazon data lake works, it helps to know the five key components that support data processing at scale:

- Data ingestion: Businesses can bring in data from both cloud-based and on-premises systems using tools designed to efficiently move data into Amazon S3.

- Data storage: All data is stored in Amazon S3, a highly durable and scalable object storage service.

- Data cataloging: Services like AWS Glue automatically index and organize data, making it easier for businesses to search, filter, and prepare data for analysis.

- Data analysis and visualization: Data lakes can be connected to tools like Amazon Athena or QuickSight, enabling businesses to query, visualize, and uncover insights directly without needing to move data elsewhere.

- Data governance: Built-in controls such as access permissions, encryption, and logging help businesses manage data quality and security. Amazon S3 access logs can track user actions, and permissions can be enforced using AWS IAM roles or AWS Lake Formation.

Why an Amazon data lake matters for business

- Centralized access: Businesses can store all their data from product inventory to customer feedback in one place, accessible by teams across departments.

- Flexibility for all data types: Businesses can keep JSON files, CSV exports, videos, PDFs, and more without needing to transform them first.

- Lower costs at scale: With Amazon S3, businesses only pay for the storage they use. They can use Amazon S3 Intelligent-Tiering to reduce costs as data becomes less frequently accessed.

- Access to advanced analytics: Businesses can run SQL queries with Amazon Athena, train machine learning models with Amazon SageMaker, or build dashboards with Amazon QuickSight directly on their Amazon data lake, without moving the data.

With the rise of generative AI (GenAI), businesses can unlock even greater value from their Amazon data lake.

Amazon Bedrock enables SMBs to build and scale AI applications without managing underlying infrastructure. By integrating Bedrock with your data lake, you can use pre-trained foundation models to generate insights, automate data summarization, and drive smarter decision-making, all while maintaining control over your data security and compliance.

10 best practices to build a smart, scalable data lake on AWS

A successful Amazon data lake is more than just a storage bucket. It’s a living, evolving system that supports growth, analysis, and security at every stage. These 10 best practices will help businesses avoid costly mistakes and build a data lake that delivers real, measurable results.

1. Design a tiered storage architecture

Start by separating data into three functional zones:

- Raw zone: This is the original data, untouched and unfiltered. Think IoT sensor feeds, app logs, or CRM exports.

- Staging zone: Store cleaned or transformed versions here. It’s used by data engineers for processing and QA.

- Curated zone: Only high-quality, production-ready datasets go here, which are used by business teams for reporting and analytics.

This setup ensures data flows cleanly through the pipeline, reduces errors, and keeps teams from working on outdated or duplicate files.

2. Use open, compressed data formats

Amazon S3 supports many file types, but not all formats perform the same. For analytical workloads, use columnar formats like Parquet or ORC.

- They compress better than CSV or JSON, saving you storage costs.

- Tools like Amazon Athena, Amazon Redshift Spectrum, and AWS Glue process them much faster.

- You only scan the columns you need, which speeds up queries and reduces compute charges.

Example: Converting JSON logs to Parquet can cut query costs by more than 70% when running regular reports.

3. Apply fine-grained access controls

SMBs might have fewer users than large enterprises, but data access still needs control. Broad admin roles or shared credentials should be avoided.

- Roles and permissions should be defined with AWS IAM. Additionally, AWS Lake Formation provides advanced capabilities for data governance, allowing businesses to restrict access at the column or row level. For example, HR may have access to employee IDs but not salaries.

- When using AWS IAM roles within the context of AWS Lake Formation, it is crucial to tailor permissions carefully to restrict access, especially when column/row-level access controls are implemented.

- Enable audit trails so you can track who accessed what and when.

- Use AWS CloudTrail for continuous monitoring of access and changes, and Amazon Macie to automatically discover and classify sensitive data, helping maintain security and compliance.

This protects sensitive data, helps you stay compliant (HIPAA, GDPR, etc.), and reduces internal risk.

4. Tag data for lifecycle and access management

Tags are more than just labels; they are powerful tools for automation, organization, and cost tracking. By assigning metadata tags, businesses can:

- Automatically manage the lifecycle of data, ensuring that old data is archived or deleted at the right time.

- Apply granular access controls, ensuring that only the right teams or individuals have access to sensitive information.

- Track usage and generate reports based on team, project, or department.

- Tags can also feed into cost allocation reports, enabling granular tracking of storage and processing costs by project or department.

For SMBs with lean IT teams, tagging streamlines data management and reduces the need for constant manual intervention, helping to keep the data lake organized and cost-efficient.

5. Use Amazon S3 storage classes to control costs

Storage adds up, especially when you're keeping logs, backups, and historical data. Here's how to keep costs in check:

- Use Amazon S3 Standard for active data.

- Switch to Amazon S3 Intelligent-Tiering for unpredictable access.

- Amazon Glacier is intended for infrequent access, and Amazon Glacier Deep Archive is specifically designed for very long-term archival at a lower price point.

- Consider using Amazon S3 One Zone-IA (One Zone-Infrequent Access) for data that doesn't require multi-AZ resilience but needs to be accessed infrequently. This storage class offers potential cost savings.

- Set up Amazon S3 Lifecycle policies to automate transitioning data between Standard, Intelligent-Tiering, Glacier, and Deep Archive tiers, balancing cost and access needs efficiently.

Set up lifecycle policies that automatically move files based on age or access frequency. This approach helps businesses avoid unnecessary costs and ensures old data is properly managed without manual intervention.

6. Catalog everything with AWS Glue

A data lake without a catalog is like a warehouse without a map. Businesses may store vast amounts of data, but without proper organization, finding specific information becomes a challenge. For SMBs, quick access to trusted data is essential.

Businesses should use the AWS Glue Data Catalog to:

- Register and track all datasets stored in Amazon S3.

- Maintain schema history for evolving data structures.

- Enable SQL-based querying with Amazon Athena or SageMaker.

- Simplify governance by organizing data into searchable tables and databases.

7. Automate ingestion and processing

Manual uploads and data preparation do not scale. If businesses spend time moving files, they aren't focusing on analyzing them. Automating this step keeps the data lake up to date and the team focused on deriving insights.

Here’s how businesses can streamline data ingestion and processing:

- Trigger workflows using Amazon S3 event notifications when new files arrive.

- Use AWS Lambda to validate, clean, or transform data in real time.

- For larger workloads, businesses may want to consider AWS Glue or Amazon Kinesis for streaming or batch data processing in real-time, as Lambda has execution time limits that might not be ideal for large-scale data processing.

- Schedule recurring ETL jobs with AWS Glue for batch data processing.

- Reduce operational overhead and ensure data freshness without daily oversight.

- Utilize infrastructure as code tools like AWS CloudFormation or Terraform to automate data lake infrastructure provisioning, ensuring repeatability and easy updates.

8. Partition the data strategically

As data grows, so do the costs and time required to scan it. Partitioning helps businesses limit what queries need to touch, which improves performance and reduces costs.

To partition effectively:

- Organize data by logical keys like year/month/day, customer ID, or region

- Ensure each partition folder follows a consistent naming convention

- Query tools like Amazon Athena or Amazon Redshift Spectrum will scan only what’s needed

- For example, querying one month of data instead of an entire year saves time and computing cost

- Use AWS Glue Data Catalog partitions to optimize query performance, and address the small files problem by periodically compacting data files to speed up Amazon Athena and Redshift Spectrum queries.

9. Encrypt data at rest and in transit

Whether businesses are storing customer records or financial reports, security is non-negotiable. Encryption serves as the first line of defense, both in storage and during transit.

Protect your Amazon data lake with:

- S3 server-side encryption to secure data at rest

- HTTPS enforcement to prevent data from being exposed during transfer

- AWS Key Management Service (KMS) for managing, rotating, and auditing encryption keys

- Compliance with standards like HIPAA, SOC2, and PCI without adding heavy complexity

10. Monitor and audit the data lake

Businesses cannot fix what they cannot see. Monitoring and logging provide insights into data access, usage patterns, and potential issues before they impact teams or customers.

To keep their Amazon data lake accountable, businesses can use:

- AWS CloudTrail will log all API calls, access attempts, and bucket-level activity.

- Amazon CloudWatch is used to monitor usage patterns and performance issues and trigger alerts.

- AWS Config, which tracks AWS resource configurations and serves as a useful tool for auditing purposes.

- Dashboards and logs that help businesses prove compliance and optimize operations.

- Visibility that supports continuous improvement and risk management.

Common mistakes SMBs make (and how to avoid them)

Building a smart, scalable Amazon data lake requires more than just uploading data. SMBs often make critical mistakes that impact performance, costs, and security. Here’s what to avoid:

1. Dumping all data with no structure

Throwing data into a lake without organization is one of the quickest ways to create chaos. Without structure, your data becomes hard to navigate and prone to errors. This leads to wasted time, incorrect insights, and potential security risks.

How to avoid it:

- Implement a tiered architecture (raw, staging, curated) to keep data clean and organized.

- Use metadata tagging for easy tracking, access, and management.

- Set up partitioning strategies so you can quickly query the relevant data.

2. Ignoring cost control features

Without proper oversight, a data lake’s costs can spiral out of control. Amazon S3 storage, data transfer, and analytics services can add up quickly if businesses don’t set boundaries.

How to avoid it:

- Use Amazon S3 Intelligent-Tiering for unpredictable access patterns, and Amazon Glacier for infrequent access or archival data.

- Set up lifecycle policies to automatically archive or delete old data.

- Regularly audit storage and analytics usage to ensure costs are kept under control.

3. Lacking role-based access

Without role-based access control (RBAC), a data lake can become a security risk. Granting blanket access to all users increases the likelihood of accidental data exposure or malicious activity.

How to avoid it:

- Use AWS IAM roles to define who can access what data.

- Implement AWS Lake Formation to manage permissions at the table, column, or row level.

- Regularly audit who has access to sensitive data and ensure permissions are up to date.

4. Overcomplicating the tech stack

It’s tempting to integrate every cool tool and service, but complexity doesn’t equal value; it often leads to confusion and poor performance. For SMBs, simplicity and efficiency are key.

How to avoid it:

- Start with basic services (like Amazon S3, AWS Glue, and Athena) before adding layers.

- Keep integrations minimal, and make sure each service adds clear value to your data pipeline.

- Prioritize usability and scalability over over-engineering.

- Additionally, Amazon Redshift Spectrum could be an important service for SMBs who need SQL-based querying over Amazon S3 data, especially for larger datasets. While it's not an error, it’s a suggestion to consider.

These common mistakes are easy for businesses to fall into, but once they are understood, they are simple to avoid. By staying focused on simplicity, cost control, and security, businesses can ensure that their Amazon data lake serves their needs effectively.

Checklist for businesses ensuring the health of an Amazon data lake

Use this checklist to quickly evaluate the health of an Amazon data lake. Regularly checking these points ensures the data lake is efficient, secure, and cost-effective.

Zones created?

- Has data been organized into raw, staging, and curated zones?

- Are data types and access needs clearly defined for each zone?

Access policies in place?

- Are AWS IAM roles properly defined for users with specific access needs?

- Has AWS Lake Formation been set up for fine-grained permissions?

Data formats optimized?

- Is columnar format like Parquet or ORC being used for performance and cost efficiency?

- Have large files been compressed to reduce storage costs?

Costs tracked?

- Are Amazon S3's intelligent-tiering and Amazon Glacier being used to minimize storage expenses?

- Is there a regular review of Amazon S3 storage usage and lifecycle policies?

Query performance healthy?

- Has partitioning been implemented for faster and cheaper queries?

- Are queries running efficiently with services like Amazon Athena or Amazon Redshift Spectrum?

By using this checklist regularly, businesses will be able to keep their Amazon data lake running smoothly and cost-effectively, while ensuring security and performance remain top priorities.

Conclusion

Implementing best practices for an Amazon data lake offers clear benefits. By structuring data into organized zones, automating processes, and using cost-efficient storage, businesses gain control over their data pipeline. Encryption and fine-grained access policies ensure security and compliance, while optimized queries and cost management turn the data lake into an asset that drives growth, rather than a burden.

Cloud modernization is within reach for SMBs, and it doesn’t have to be a complex, resource-draining project. With the right guidance and tools, businesses can build a scalable and secure data lake that grows alongside their needs. Cloudtech specializes in helping SMBs modernize AWS environments through secure, scalable, and optimized data lake strategies—without requiring full platform migrations.

SMBs interested in improving their AWS data infrastructure can consult Cloudtech for tailored guidance on modernization, security, and cost optimization.

FAQs

1. How do businesses migrate existing on-premises data to their Amazon data lake?

Migrating data to an Amazon data lake can be done using tools like AWS DataSync for efficient transfer from on-premises to Amazon S3, or AWS Storage Gateway for hybrid cloud storage. For large-scale data, AWS Snowball offers a physical device for transferring large datasets when bandwidth is limited.

2. What are the best practices for data ingestion into an Amazon data lake?

To ensure seamless data ingestion, businesses can use Amazon Kinesis Data Firehose for real-time streaming, AWS Glue for ETL processing, and AWS Database Migration Service (DMS) to migrate existing databases into their data lake. These tools automate and streamline the process, ensuring that data remains up-to-date and ready for analysis.

3. How can businesses ensure data security and compliance in their data lake?

For robust security and compliance, businesses should use AWS IAM to define user permissions, AWS Lake Formation to enforce data access policies, and ensure data is encrypted with Amazon S3 server-side encryption and AWS KMS. Additionally, enabling AWS CloudTrail will allow businesses to monitor access and track changes for audit purposes, ensuring full compliance.

4. What are the cost implications of building and maintaining a data lake?

While Amazon S3 is cost-effective, managing costs requires businesses to utilize Amazon S3 Intelligent-Tiering for unpredictable access patterns and Amazon Glacier for infrequent data. Automating data transitions with lifecycle policies and managing data transfer costs, especially across regions, will help keep expenses under control.

5. How do businesses integrate machine learning and analytics with their data lake?

Integrating Amazon Athena for SQL queries, Amazon SageMaker for machine learning, and Amazon QuickSight for visual analytics will help businesses unlock the full value of their data. These AWS services enable seamless querying, model training, and data visualization directly from their Amazon data lake.

Top 5 cloud services for small businesses: A complete guide

Cloud services for small businesses are no longer just optional but necessary for staying competitive. A recent survey found that 94% of businesses say cloud computing has improved their ability to scale and boost productivity.

For small and medium-sized businesses (SMBs), cloud solutions provide the flexibility to grow without the heavy costs and complexity of traditional IT systems.

By adopting cloud services, SMBs can improve security, enhance collaboration, and scale operations more easily. This guide will explore the different types of cloud services, the key benefits for SMBs, and practical tips for implementation.

What are cloud services?

Cloud services refer to computing resources and software hosted online and accessed over the internet, rather than stored on physical hardware within a business. These services include storage, processing power, and applications that allow businesses to scale quickly and efficiently without investing in expensive infrastructure.

Businesses can manage everything from data storage to software solutions through cloud services instead of maintaining in-house IT systems.

Types of cloud services for small businesses

There are three main types of cloud services: Public, private, and hybrid clouds. Each has its own advantages depending on business needs, ranging from cost-effectiveness to security.

1. Public cloud: Sharing platform and cost benefits

The public cloud is a shared platform where third-party providers like AWS, Microsoft Azure, or Google Cloud provide resources over the internet. It is cost-effective because businesses only pay for what they use and don’t need to invest in expensive hardware.

This model is ideal for businesses looking for scalable resources without the overhead. For example, a company might use the public cloud to host websites or store customer data.

2. Private cloud: Control and security compliance

A private cloud provides a dedicated environment for a business, offering greater control over performance and configuration. This solution allows businesses to customize their cloud infrastructure to meet specific needs, whether it's handling sensitive data or optimizing performance for particular tasks.

For example, businesses in industries with unique infrastructure requirements may opt for a private cloud to maintain a more tailored setup for their operations. While it provides greater control, the private cloud is one of many cloud options businesses can consider based on their requirements for flexibility, security, and scalability.

3. Hybrid cloud: Combination of public and private resources

A hybrid cloud combines both public and private cloud services, giving businesses the best of both worlds. They can store sensitive data on a private cloud while using public cloud resources for less critical tasks. This setup provides flexibility, security, and scalability.

For example, an SMB might use a hybrid model to store healthcare or patient data on a private cloud while running customer-facing applications on the public cloud.

Each cloud type offers unique benefits, allowing businesses to select the one that best suits their needs for scalability, security, and cost management.

How do cloud services work?

Cloud services operate through multiple technologies and principles that make them flexible, scalable, and efficient for businesses. Here’s a simplified breakdown:

- Remote servers: Cloud services run on powerful remote servers located in data centers. These servers handle all the heavy lifting, processing, storing, and managing data.

- Internet access: Users connect to these servers via the internet, accessing business applications and files without requiring physical hardware.

- Resource allocation: Cloud providers dynamically allocate resources such as storage and computing power based on user demand.

- Data security and management: Cloud providers manage security, backups, and updates to protect data and maintain system stability, while customers share responsibility for secure usage.

Cloud services come in different models, like the infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), giving SMBs flexibility in how much they manage.

For example, IaaS like Amazon EC2 provides raw computing resources, PaaS such as AWS Elastic Beanstalk offers managed development environments, and SaaS delivers ready-to-use applications, helping SMBs reduce IT overhead.

AWS operates multiple data centers grouped into regions and availability zones, which ensures fault tolerance and low latency. This geographic distribution helps SMBs maintain business continuity and quickly recover from outages.

Additionally, features like auto-scaling automatically adjust compute capacity in response to real-time demand, effectively balancing cost and performance.

Plus, its elasticity allows businesses to scale resources up or down based on demand, while giving comprehensive control over infrastructure with advanced management and security tools. It's designed for businesses that need more flexibility and specialized services to run complex operations smoothly.

It’s also important for SMBs to understand the shared responsibility model. While AWS secures the physical infrastructure and network, businesses are responsible for managing their data, access controls, and application security. To keep cloud spending under control, AWS offers tools like AWS Cost Explorer, AWS Budgets, and Amazon EC2 Reserved Instances, empowering SMBs to optimize costs as they scale.

Cloudtech, an AWS partner, helps SMBs modernize their IT environments. By helping organizations optimize cloud infrastructure and adopt scalable, secure AWS services, Cloudtech ensures ongoing performance, governance, and efficiency aligned with business growth.

Top 5 cloud storage solutions for small businesses

Choosing the right cloud storage solution is key to keeping data secure, accessible, and organized. Whether a business is growing its team or handling sensitive information, the right platform can simplify operations and help the business scale smoothly. Here are some top-rated options to consider in 2025:

1. Amazon Web Services (AWS)

AWS is a solid choice for flexibility and reliability. With services like Amazon S3 and Amazon Elastic Block Store (EBS), businesses can store everything safely and at scale. Additionally, AWS offers a range of other services, including Amazon EC2, Amazon RDS, AWS Lambda, Amazon CloudFront, Amazon VPC, and AWS IAM.

Cloudtech helps businesses use these AWS solutions to scale operations securely and efficiently.

Key features:

- Pay only for the storage used

- Strong encryption and compliance certifications

- Seamless integration with other AWS tools

- Global infrastructure for fast access and backups

AWS works well for businesses planning to scale and requiring full control over how they manage their data.

2. Microsoft Azure

Azure is Microsoft’s cloud platform, providing businesses with integrated solutions for data storage, analytics, and machine learning. It offers hybrid cloud capabilities and seamless integration with Microsoft’s enterprise tools like Office 365 and Windows Server. Azure is known for its advanced security and compliance features, making it a reliable choice for enterprise-level businesses.

Key features:

- Hybrid cloud capabilities to integrate on-premises systems with cloud

- Advanced security features, including multi-factor authentication and encryption

- Scalable storage with low-latency global data centers

- Extensive analytics and machine learning tools for data-driven insights

Businesses are looking for an enterprise-level solution that integrates seamlessly with Microsoft tools and provides a high degree of flexibility for various workloads.

3. Google Workspace (Google Drive)

Google Workspace offers secure cloud storage with seamless collaboration, perfect for SMBs focused on teamwork and productivity. Its integration with Google Docs, Sheets, and Slides facilitates real-time editing and sharing.

Key features:

- Real-time collaboration and file sharing

- Advanced search powered by AI

- Robust security controls and compliance certifications

- Easy integration with numerous third-party apps

4. Dropbox Business

Dropbox Business is a trusted cloud storage service offering flexible sharing and collaboration tools suited for SMBs. It features smart sync to save local disk space and advanced admin controls for security.

Key features:

- Granular permissions and version history

- Seamless integration with Microsoft 365 and Slack

- Strong encryption and compliance support

- Smart sync for efficient file access

5. IBM Cloud Object Storage

IBM Cloud Object Storage is a scalable and secure cloud storage solution designed to meet the growing needs of SMBs. It offers robust data protection and compliance features, making it suitable for businesses requiring reliable and cost-effective storage.

Key features:

- Flexible scalability with multi-cloud support

- Enterprise-grade encryption and compliance certifications (HIPAA, GDPR, SOC 2)

- Global data center presence for fast, reliable access

- Integration with various backup, analytics, and AI tools for enhanced data management

Businesses that deal with sensitive data require a secure, compliant, and user-friendly cloud storage solution.

These cloud services offer real value depending on specific business needs, security, collaboration, media storage, or backup. Businesses should take a moment to think about what matters most and choose the solution that best supports their way of working.

Steps to successfully implement cloud services

Moving to the cloud doesn’t have to be complicated. With the right steps, businesses can shift their systems smoothly, avoid disruptions, and get their teams fully onboard. Here’s how to get started:

Step 1: Identify the needs

Start by reviewing current tools and workflows. Pinpoint areas where time, money, or efficiency are being lost; these are the areas where cloud services can have the most significant impact.

Step 2: Select a cloud provider

Choose a provider that aligns with business goals and technical requirements. Amazon Web Services (AWS) offers scalable, secure, and cost-friendly solutions for small businesses, making it a solid choice for businesses looking to scale efficiently.

Step 3: Set up and migrate

Once a provider is chosen, set up the cloud environment and plan the migration. Files, apps, and systems should be moved gradually to avoid downtime. Testing each part ensures everything works smoothly before the full migration is complete.

Step 4: Train the team

Cloud tools are only as effective as the team's ability to use them. Training should be provided to ensure that everyone knows how to access files, use new apps, and follow security protocols.

Step 5: Keep it smooth and simple

Use checklists, assign responsibilities, and communicate clearly throughout the process. Starting with one department or system first can help ease the transition and build confidence before scaling up.

Considerations for choosing a cloud service

When choosing a cloud service for a business, it’s easy to get caught up in features and pricing. However, what truly matters is how well the service supports business goals, both now and as the business grows. A strong cloud platform should align with five core principles that ensure stability, security, and long-term value. These pillars should guide the decision-making process to find the right fit for a business.

- Operational excellence: A platform should help run day-to-day operations smoothly and adapt quickly when changes occur. Businesses should look for tools that support automation, monitoring, and quick recovery from errors to improve how operations are managed.

- Security: Protecting data is non-negotiable. The right provider will offer strong encryption, access controls, and compliance with standards like HIPAA or GDPR. If a business handles sensitive data, built-in security features should be a top priority.

- Reliability: The cloud service should remain operational even during demand spikes or unexpected issues. Providers with a track record of uptime, automatic backups, and clear guarantees around service availability should be prioritized.

- Performance efficiency: As businesses grow, their technology should keep pace. A cloud platform that offers scalable resources is essential, whether the business is expanding its team, launching new products, or managing increased traffic.

- Cost optimization: A good cloud solution helps businesses control spending. Clear pricing, usage tracking, and the ability to scale up or down without locking into long-term costs should be key considerations. Businesses should only pay for what they use when they use it.

A platform should help run day-to-day operations smoothly and quickly adapt to changes. Businesses should look for tools that support automation, monitoring, and quick recovery from errors to improve how operations are managed.

For example, services like AWS CloudFormation enable SMBs to automate the provisioning and management of cloud resources through code, ensuring consistent and repeatable infrastructure deployments. Additionally, AWS Config helps monitor and evaluate resource configurations continuously, alerting teams to deviations from best practices or compliance requirements, which supports proactive governance and operational resilience.

Benefits & challenges of cloud services

Here’s a quick overview of the benefits and potential challenges SMBs face when using cloud services:

Conclusion

Cloud services for small businesses offer tools to work smarter, scale faster, and stay secure without the high costs of traditional IT. From storage and collaboration to security and performance, the right solution can streamline operations and support long-term growth.

If a business hasn't made the shift yet, SMBs can evaluate their current technology stack to identify areas where AWS services may support more efficient operations and future scalability. Whether better data protection, easier access for remote teams, or room to grow is needed, cloud services can provide the competitive edge.

For businesses looking to modernize their AWS environment, Cloudtech provides expert support in optimizing infrastructure, aligning with AWS best practices, and improving long-term cloud performance.

FAQs

1. Which cloud is better for small businesses?

The best cloud for small businesses depends on their specific needs. Amazon Web Services (AWS) offers scalability and reliability, making it ideal for growing businesses. Microsoft Azure integrates well with existing Microsoft products, while Google Cloud is great for collaboration. If security and simplicity are a priority, Box and Carbonite are also excellent choices for secure file storage and backup solutions.

2. How much do cloud services cost for a small business?

Cloud costs vary by service provider and resources provisioned. On average, small businesses can expect to pay anywhere from $20 to $500 per month, depending on compute, storage, and other services needed. For basic storage and compute services, AWS offers affordable pricing options. It's crucial to choose a plan that fits the usage to avoid unnecessary expenses.

3. Is the cloud good for small businesses?

Yes, cloud services are excellent for small businesses. They offer cost-effective solutions, allowing businesses to scale up or down based on needs, without the heavy costs of traditional IT infrastructure. Cloud services also improve security, enhance collaboration, and provide remote access, making them ideal for modern business needs.

AWS cost optimization strategies and best practices

Managing AWS costs effectively is essential for small and medium-sized businesses (SMBs) seeking to scale their operations without overburdening their budgets. While AWS offers powerful cloud services, the complexity of its pricing models can quickly lead to unexpected expenses.

From storage to compute power, every service has its own pricing structure that can make cost management tricky. By adopting smart strategies, businesses can avoid unnecessary spending and make the most out of their AWS environment.

In this article, we'll explore how businesses can optimize their AWS costs, helping them maintain efficiency while ensuring financial sustainability.

Why is AWS cost optimization important for your business?

Cloud expenses can add up quickly, and managing every expense is crucial for SMBs. AWS charges based on usage, which sounds great in theory, but in practice, it is easy to over-provision resources or forget about services that continue running in the background. These unnoticed costs can quietly pile up over time.

Optimizing AWS costs isn’t just about spending less. It’s about spending smart. When businesses manage their cloud usage efficiently, they can redirect that saved budget toward innovation, hiring, or customer experience. Plus, a well-optimized setup often leads to better system performance, improved security, and more predictable bills each month.

AWS also offers a ton of services, and not all of them are priced the same way. Without a clear plan or understanding of what’s actually being used, teams often end up paying for features they don’t need or using expensive options when cheaper ones could do the job just fine.

In short, cost optimization helps businesses stay lean, focused, and in control, without sacrificing performance or flexibility.

What are the core principles of AWS cost optimization?

When it comes to optimizing AWS costs, it’s essential for businesses to understand the core principles that guide effective cost management. These principles help ensure that businesses don’t just reduce their spending, but also do so in a way that maintains the integrity and efficiency of their cloud operations.

- Right-sizing resources: One of the first steps to avoid overspending is choosing the correct instance types and sizes based on actual workload requirements. Right-sizing involves analyzing current resource usage and adjusting configurations to ensure businesses are not paying for unused or underutilized capacity.

- Reserved Instances and savings plans: Reserved Instances (RIs) and AWS Savings Plans can provide significant savings for businesses with predictable usage patterns. These plans offer discounts in exchange for committing to a certain level of usage over time. This principle helps businesses avoid the unpredictable costs associated with on-demand pricing.

- Auto scaling: Automatically scaling the resources up or down based on real-time demand can prevent overprovisioning and reduce idle costs. Auto scaling ensures that only the necessary resources are in use at any given time, helping optimize costs dynamically.

- Monitor and analyze usage continuously: AWS provides tools like AWS Cost Explorer and AWS Budgets to track spending and usage patterns. Regular monitoring enables businesses to identify cost spikes and areas where inefficiencies exist quickly. Establishing a habit of continuously analyzing cloud usage will help detect optimization opportunities over time.

- Utilize cost-effective services: AWS offers several cost-effective services designed to minimize costs without sacrificing performance. For example, using Amazon S3 for storage, instead of more expensive storage options, or utilizing AWS Lambda for event-driven applications, can significantly reduce operational expenses.

By sticking to these principles, businesses can build a robust framework for managing AWS costs in an ongoing, sustainable way. With consistent application of these practices, organizations can keep their cloud environments lean, agile, and cost-efficient, all while maintaining the flexibility and scalability that AWS offers.

How to utilize AWS pricing models to optimize cost

AWS provides various pricing models that help businesses manage costs and ensure they only pay for the resources they truly need. Understanding these pricing models and choosing the right one based on usage patterns is key to reducing cloud spending. By selecting the right pricing approach, businesses can realize substantial savings without compromising on performance or flexibility.

1. On-demand pricing

On-demand pricing is the most straightforward model where businesses pay for computing capacity by the hour or second, with no long-term commitments. This model provides flexibility, as businesses only pay for what they use. However, while convenient, on-demand pricing can be expensive for consistent, long-term usage. It is ideal for applications with unpredictable workloads or short-term projects, but it is not the most cost-effective option for businesses with steady, predictable needs.

- Pay-as-you-go pricing with no long-term commitments

- Flexibility to scale up or down based on demand

- Best for short-term or unpredictable workloads

- Higher costs compared to other pricing models for long-term usage

2. Reserved Instances (RIs)

Reserved Instances (RIs) allow businesses to commit to a particular instance type for one or three years, in return for substantial discounts of up to 75% compared to on-demand prices. This model is best for businesses with predictable workloads that require continuous use of Amazon EC2 instances. AWS offers three types of RIs: Standard RIs (best for steady-state usage), Convertible RIs (allowing flexibility to change instance types), and Scheduled RIs (reserved for specific time windows).

- Ideal for steady-state or continuous usage of Amazon EC2 instances

- Flexible options with Standard, Convertible, and Scheduled RIs

- Requires commitment to specific instance types or usage patterns

3. Spot instances

Spot instances let businesses purchase unused Amazon EC2 capacity at a much lower price, offering discounts that can reach up to 90% compared to standard on-demand rates. While this can result in massive cost savings, spot instances come with the risk of termination if AWS needs the capacity back. This pricing model is ideal for non-critical or flexible workloads, such as batch processing, scientific computations, and large-scale data analysis.

- Suitable for non-critical or flexible workloads

- Risk of termination if AWS requires the capacity

- Appropriate for tasks like batch processing or data analysis

4. AWS Savings Plans

AWS Savings Plans provide flexible pricing in return for a commitment to a consistent usage level over one or three years. Businesses can save up to 72% compared to on-demand pricing. There are two types of Savings Plans:

- Compute savings plans: These apply to Amazon EC2, AWS Lambda, and AWS Fargate, allowing businesses to save on a wide range of computing services without having to commit to specific instance types or regions.

- Amazon EC2 Instance savings plans: These are specific to Amazon EC2 instances, offering flexibility in terms of instance size and region, but requiring a commitment to a particular instance family.

AWS Savings Plans are a good option for businesses with predictable needs but who also want the flexibility to change instance types or regions within their plan. For example, AWS Lambda users can benefit from these plans to significantly reduce costs.

- Save up to 72% compared to on-demand prices

- Two types: Compute AWS Savings Plans and Amazon EC2 Instance Savings Plans

- Flexibility to scale across different compute services, like Amazon EC2, AWS Lambda, and AWS Fargate

- Ideal for businesses with predictable workloads but that need flexibility in services

By carefully selecting and leveraging these AWS pricing models, businesses can reduce their cloud spending while maintaining the performance and scalability of their applications.

Whether using Reserved Instances for predictable workloads or Spot Instances for flexible ones, understanding the right model for each use case ensures maximum savings. Additionally, AWS Savings Plans offer a flexible approach, combining discounts with the ability to scale across different compute services like Amazon EC2 and AWS Lambda.

Consider using Cloudtech's cloud modernization services to optimize the business's AWS costs effectively. With expertise in AWS infrastructure optimization and data management, Cloudtech helps businesses streamline operations and improve cost efficiencies while ensuring scalability and security.

Tools for monitoring and managing AWS costs

Managing AWS costs can be challenging without the right tools. Fortunately, AWS provides several powerful tools to help businesses track, analyze, and optimize their cloud spending. These tools offer visibility into usage patterns, enable businesses to set limits, and provide recommendations for improving efficiency.

1. AWS cost explorer

AWS Cost Explorer is a comprehensive tool for visualizing and analyzing AWS costs and usage. It allows businesses to explore their spending across different services, regions, and linked accounts, helping identify areas where cost savings are possible. With AWS Cost Explorer, businesses can break down their costs by service, linked accounts, and usage types, enabling better decision-making for cost optimization. Users can also track and forecast future spending, helping predict budget needs based on historical data.

- Provides detailed cost and usage reports with customizable filters.

- Allows businesses to visualize spending trends and forecast future costs.

- Offers recommendations for cost optimization based on historical data.

- Helps identify underutilized resources that could lead to savings.

2. AWS Budgets

AWS Budgets allows businesses to set custom spending limits and track costs against those limits in real-time. It helps to avoid unexpected charges by setting thresholds for cost and usage, providing alerts when spending is approaching or exceeding the defined limits. AWS Budgets can be set for overall costs, specific services, or individual accounts. This tool is particularly useful for businesses that want to ensure they don’t exceed their cloud budget while still optimizing performance.

- Enables businesses to set custom spending limits and usage thresholds.

- Sends alerts when spending nears or exceeds defined budgets.

- Helps track costs across services, linked accounts, and organizational units.

- Provides visibility into budget performance and forecasts future spending.

3. AWS Trusted Advisor

AWS Trusted Advisor offers resource optimization recommendations to help businesses lower costs, improve performance, and enhance security. It performs an ongoing analysis of AWS accounts and provides actionable insights related to areas such as underutilized resources, over-provisioned services, and opportunities for rightsizing instances. AWS Trusted Advisor evaluates AWS resources against AWS best practices and helps identify areas that can be optimized to reduce costs and improve efficiency.

- Provides resource optimization recommendations to reduce over-provisioned or unused resources.

- Identifies opportunities for rightsizing instances and reducing unnecessary costs.

- Offers insights for improving security, performance, and fault tolerance.

- Continuously scans accounts for opportunities to improve resource utilization.

4. Amazon CloudWatch

Amazon CloudWatch is a monitoring and observability service that allows businesses to keep track of their AWS resources and applications in real-time. While Amazon CloudWatch is primarily used for tracking system performance, it also plays a significant role in managing costs. By monitoring resource utilization and setting alarms based on usage thresholds, Amazon CloudWatch can alert businesses when they are approaching high-cost scenarios. This enables proactive cost management by helping identify and address inefficiencies before they result in unexpected charges.

- Monitors AWS resource utilization and performance in real-time.

- Sends alarms based on cost or usage thresholds to prevent overages.

- Helps identify underused resources that could be optimized.

- Provides valuable data for cost optimization through custom metrics and dashboards.

By utilizing these tools, businesses can effectively monitor their AWS spending and make informed decisions for cost optimization. AWS Cost Explorer helps visualize spending patterns, AWS Budgets sets spending limits, AWS Trusted Advisor offers ongoing resource optimization recommendations, and Amazon CloudWatch enables real-time monitoring

What are the best practices for AWS cost optimization?

Optimizing AWS costs is a continuous process that requires businesses to adopt several best practices. By identifying inefficiencies, leveraging cost-effective pricing models, and utilizing AWS’s native tools, businesses can significantly reduce their cloud spending without sacrificing performance. Let’s explore some of the best practices that can help optimize AWS costs effectively.

1. Identify and right-size Amazon EC2 Instances

One of the easiest ways to reduce AWS costs is by ensuring that the Amazon EC2 instances being used are appropriately sized for the workload. Right-sizing involves adjusting the instance type, size, or family to meet the actual needs of the application, eliminating the waste that comes with over-provisioning. AWS provides the Compute Optimizer tool that recommends the best instance types based on usage history, allowing businesses to make data-driven decisions.

- Right-size Amazon EC2 instances to better match workload requirements

- AWS Compute Optimizer can recommend the best instance types for optimal performance and cost

- Monitor performance and scale down unused instances to save costs

- Reevaluate instance size regularly as workload changes

- Tag resources for cost allocation and visibility

Applying consistent tags to AWS resources, such as by project, team, or environment, enables SMBs to track costs accurately and allocate expenses appropriately. Tagging helps identify cost centers, optimize budgets across departments, and provides greater transparency in cloud spending.

2. Use or sell underutilized Reserved Instances

Reserved Instances (RIs) provide up to 75% savings compared to on-demand pricing for customers who commit to using a specific instance type for a one or three-year term. However, businesses may end up with RIs that are underutilized, which leads to unnecessary spending. In such cases, businesses should explore selling unused RIs in the AWS Reserved Instance Marketplace or adjusting the capacity to better align with actual needs.

- Use AWS’s Reserved Instance Marketplace to sell unused RIs and recover some of the costs

- Regularly evaluate RI usage to ensure that the commitment aligns with actual demand

- Consider switching to Convertible RIs if workloads are likely to change over time

3. Use Amazon EC2 Spot Instances

Amazon EC2 Spot Instances are one of the most cost-effective options for workloads that are flexible in terms of execution time and can tolerate interruptions. Spot Instances allow businesses to bid on unused Amazon EC2 capacity at a discount of up to 90% off on-demand prices. They are ideal for batch processing, large-scale data analysis, and machine learning workloads that can be paused and resumed as needed.

- Spot instances can save up to 90% on Amazon EC2 instance costs

- Ideal for non-critical or interruptible workloads like batch jobs or data processing

- Use Amazon EC2 auto scaling with Spot Instances to improve cost efficiency and minimize disruptions

- Combine Spot Instances with On-Demand Instances for high-availability applications while saving costs.

4. Utilize Amazon S3 storage classes

Choosing the right storage class in Amazon S3 is another way to reduce costs. AWS offers several storage classes for different use cases, including Amazon S3 Standard, Amazon S3 Intelligent-Tiering, and Amazon S3 Glacier. By regularly reviewing the storage requirements and moving infrequently accessed data to lower-cost storage classes, businesses can achieve substantial savings.

- Amazon S3 Intelligent-Tiering automatically moves data to the most cost-effective storage class based on usage patterns.

- Store archival data in Amazon S3 Glacier or Amazon S3 Glacier Deep Archive for significant cost savings.

- Regularly audit Amazon S3 buckets to identify and remove unused or obsolete data.

5. Implement auto scaling

Auto Scaling automatically adjusts resource allocation to match actual demand, ensuring that businesses only pay for the capacity they need. By scaling EC2 instances based on traffic patterns and demand, businesses can reduce costs by eliminating underutilized resources. Auto Scaling is also applicable to other AWS services like Amazon RDS and Elastic Load Balancing.

- Automatically scale Amazon EC2 instances based on demand to reduce costs during low-traffic periods

- Implement auto scaling for databases like Amazon RDS to manage costs based on database load

- Use Elastic Load Balancing in conjunction with Auto Scaling to ensure efficient resource distribution and prevent over-provisioning

6. Regularly audit underutilized Amazon EBS volumes

Amazon Elastic Block Store (EBS) is used for persistent storage, but over time, businesses may accumulate unused or underutilized Amazon EBS volumes that still incur charges. Regularly auditing Amazon EBS volumes and deleting unused or orphaned volumes can significantly reduce unnecessary storage costs.

- Identify unused or underutilized Amazon EBS volumes through regular audits

- Delete orphaned Amazon EBS volumes that are no longer attached to any Amazon EC2 instances

- Use Amazon EBS Snapshots for backup instead of maintaining full volumes for inactive data.

7. Implement Elastic Load Balancing

Elastic Load Balancing (ELB) for optimizing resources helps businesses distribute incoming application traffic across multiple targets, such as Amazon EC2 instances, in multiple Availability Zones. By using Amazon ELB, businesses can ensure their resources are used efficiently, scaling the infrastructure based on real-time demand. This not only optimizes performance but also prevents over-provisioning by dynamically adjusting the resources.

- Elastic Load Balancing (ELB) distributes traffic evenly across Amazon EC2 instances, improving resource utilization

- Automatically scales with traffic spikes and dips, ensuring optimal performance at all times

- Helps prevent over-provisioning by distributing workload efficiently across available resources

- Reduces costs by ensuring that underutilized instances are not running unnecessarily.

Streamline AWS cost management with expert support

Effectively managing AWS costs can be a challenging task for many businesses. With the right expertise, however, businesses can optimize their cloud infrastructure to ensure cost efficiency without sacrificing performance. Cloudtech, an AWS Advanced Tier Partner, specializes in providing solutions that help businesses streamline their AWS environments. By focusing on infrastructure optimization, data management, and application modernization, Cloudtech supports companies in reducing unnecessary cloud spending while enhancing scalability and security.

Key strengths:

- AWS infrastructure optimization for improved cost-efficiency

- Customized solutions for industries like healthcare and fintech

- Expertise in data management and application modernization

- Focus on security, scalability, and high performance

- Helps businesses optimize cloud resources for sustainable growth

Conclusion

In conclusion, optimizing AWS costs is crucial for businesses aiming to maximize their cloud investment while ensuring performance and scalability. By implementing strategies such as right-sizing Amazon EC2 instances, leveraging Spot Instances, and utilizing cost-effective storage options, businesses can significantly reduce their AWS spending. Additionally, tools like AWS Cost Explorer and AWS Trusted Advisor provide valuable insights for ongoing cost management.

For businesses looking for expert guidance in optimizing AWS infrastructure, reach out to Cloudtech to streamline the AWS environment and achieve sustainable cloud growth.

FAQs

- What is the best way for SMBs to reduce AWS costs?

SMBs can reduce AWS costs by right-sizing their Amazon EC2 instances, leveraging Amazon EC2 Spot Instances for flexible workloads, and using AWS Savings Plans for long-term usage commitments. Additionally, regularly auditing underutilized resources and using cost-effective storage classes like Amazon S3 Glacier can lead to substantial savings.

- How can I monitor and control AWS spending?

AWS Cost Explorer and AWS Budgets are essential tools for tracking and managing spending. By setting custom budgets and monitoring usage patterns, businesses can identify areas of overspending and take proactive steps to stay within budget.

- How does Auto Scaling help in cost optimization?

Auto Scaling automatically adjusts the number of Amazon EC2 instances in use based on actual demand. By scaling resources up or down, businesses ensure they are only paying for the compute power they need, which helps avoid over-provisioning and reduces costs.

- How can SMBs benefit from AWS Savings Plans?

AWS Savings Plans offer significant discounts (up to 72%) in exchange for committing to a consistent level of usage. This is beneficial for SMBs with predictable workloads, as it helps them save on Amazon EC2, AWS Lambda, and Fargate costs while maintaining flexibility in resource management.

.jpg)

6 AWS well-architected framework pillars driving SMB success

According to Gartner (2024), 70% of SMBs that engaged in cloud modernization reported measurable improvements in operational efficiency and cost savings within the first year. This significant finding highlights why adopting cloud technology is no longer optional for small and medium businesses (SMBs). It is essential for maintaining competitiveness and enabling growth.

Yet modernizing cloud infrastructure comes with considerable challenges, particularly around security, compliance, and managing costs. Simply migrating to the cloud is not enough. The AWS well-architected framework offers SMBs a clear, proven approach to designing and operating cloud environments that are secure, scalable, and efficient while adhering to industry best practices.

This structured framework guides businesses beyond basic migration, helping them build resilient and compliant cloud solutions that align with their unique needs and industry requirements.

Key takeaways:

- AWS’s six Well-Architected Framework pillars help SMBs build secure, cost-efficient, and scalable cloud environments.

- SMBs must adopt the AWS Well-Architected Framework to balance performance, cost, and security in cloud modernization.

- Each of the six AWS Well-Architected pillars, from operational excellence to sustainability, has its real-world SMB impact.

- Cloudtech helps SMBs apply AWS best practices to modernize, secure, and optimize cloud environments for lasting success.

- Unlock the roadmap to smarter, safer, and leaner cloud operations with the AWS Well-Architected Framework pillars for SMBs.

Why do SMBs need the AWS Well-Architected Framework pillars?

For small and mid-sized businesses, every cloud decision carries weight. Unlike large enterprises with massive budgets and teams, SMBs need cloud systems that are secure, cost-efficient, and resilient by design, without the overhead of constant firefighting or rework. That’s exactly what the AWS Well-Architected Framework (WAF) delivers.

The framework’s six pillars help SMBs strike the right balance between agility and control. It ensures that their cloud investments drive business outcomes, not just infrastructure uptime.

Why it matters for SMBs:

1. Because small missteps have big consequences: For SMBs, one outage or security lapse can cause real financial and reputational damage. The Reliability and Security pillars ensure workloads stay protected, recover quickly, and meet compliance needs, without needing an enterprise-sized ops team.

2. Because cost efficiency is a survival factor: Unlike large organizations, SMBs can’t afford to overspend on idle resources. The Cost Optimization pillar helps right-size workloads, automate scaling, and use pricing models that match actual usage, freeing up budget for innovation.

3. Because scaling too fast can create chaos: Rapid growth is great, but it often exposes weak infrastructure foundations. The Operational Excellence and Performance pillars guide SMBs in building predictable, scalable systems that grow with customer demand instead of breaking under it.

4. Because security can’t be an afterthought: Many SMBs assume cloud security “just comes with AWS.” The truth is, it requires the right IAM policies, encryption standards, and monitoring, which are areas directly addressed by the Security pillar to protect customer data and ensure compliance.

5. Because innovation depends on stability: SMBs can’t modernize applications or experiment with AI if their foundation isn’t stable. By adhering to the WAF pillars, SMBs gain the confidence to innovate faster, adopt new AWS services, and continuously improve without disrupting existing operations.

SMBs don’t need enterprise-scale architectures. They need well-architected ones. The AWS Well-Architected Framework gives growing businesses the structure to operate smarter, safer, and leaner in the cloud.

With the right partner like Cloudtech, these pillars become a roadmap to sustainable modernization, one that balances performance, cost, and long-term growth.

Suggested Read: Effective Cloud Migration Strategies for Small Businesses

The 6 pillars of the AWS well-architected framework

The AWS well-architected framework is built around six core pillars that guide organizations in designing and operating cloud systems effectively. Each pillar addresses a key area critical to building secure, efficient, and resilient cloud environments.

1. Operational excellence

Operational excellence is about effectively running and managing cloud workloads while continuously improving processes to deliver business value. For SMBs, it means building adaptable operations that support growth, compliance, and agility.

Key aspects:

- Automate operations as code to reduce errors and increase consistency

- Make frequent, small, reversible changes to minimize risks

- Refine procedures regularly based on real-world feedback

- Design systems to anticipate and handle failures gracefully

- Learn from operational failures to improve processes

SMBs can use AWS Systems Manager to automate operational tasks and manage infrastructure as code. AWS CloudTrail for logging and auditing API activity. Amazon CloudWatch for monitoring and alerting on operational metrics.

Best practices: Operations teams should deeply understand business and customer needs to align procedures with desired outcomes. It’s essential to create, refine, and validate processes for handling operational events effectively while collecting metrics to measure success.

Operations should be designed to adapt over time, reflecting changes in business priorities, customer needs, and evolving processes. Lessons learned from past performance and failures should be incorporated continuously to improve efficiency and deliver consistent business value.

Tip: For SMBs looking to improve operational excellence, Cloudtech’s AWS foundations program offers a rapid, hands-on approach to build secure, compliant, and efficient AWS environments.

2. Security

For SMBs in healthcare, fintech, and other regulated sectors, security is a non-negotiable requirement in cloud modernization. The Security pillar ensures cloud environments protect sensitive data while meeting strict compliance standards, without slowing down business operations.

Key aspects:

- Enforce granular access controls with AWS Identity and Access Management (IAM) tailored to SMB team roles

- Implement end-to-end encryption using AWS Key Management Service (KMS) to protect data at rest and in transit

- Continuously monitor environments with AWS Security Hub and AWS CloudTrail for early threat detection

- Automate patch management and vulnerability scanning to reduce exposure

- Develop incident response workflows aligned with regulatory requirements

To strengthen security, SMBs can use AWS IAM for strict access controls, AWS KMS for managing encryption keys, Amazon GuardDuty for proactive threat detection, and AWS Security Hub to consolidate security alerts across their AWS environment.

Best practices: Security practices should focus on controlling access, monitoring for incidents, and protecting the confidentiality, integrity, and availability of data. Organizations should establish and regularly practice well-defined processes for responding to security events.

Automation should be leveraged to enforce security best practices and maintain system-wide visibility. Cloud-native security features should be used to reduce operational overhead, allowing teams to focus on securing workloads effectively and maintaining compliance.

3. Reliability

Reliability ensures that cloud systems can recover quickly from failures and continue operating smoothly. For SMBs, this means designing environments that minimize downtime, support business continuity, and scale with demand.

Key aspects:

- Automatically recover from failures using AWS services like AWS Auto Scaling and Elastic Load Balancing

- Regularly test recovery procedures to verify backup and failover effectiveness

- Scale horizontally to distribute load and avoid single points of failure

- Manage changes through automation tools such as AWS CloudFormation to reduce human errors

- Build resiliency directly into workloads to withstand disruptions without service impact

By using AWS Auto Scaling and Elastic Load Balancing, SMBs ensure their applications stay available under varying loads, while Route 53 supports DNS failover to maintain uptime during regional outages

Best practices: Reliable workloads begin with solid foundational requirements, such as sufficient network and compute capacity. Architects should minimize risk through loosely coupled components, graceful degradation, fault isolation, automated failover, and disaster recovery strategies.

Anticipating changes in workload demand and system updates is critical to maintaining consistent performance. Recovery procedures should be regularly tested, and horizontal scaling should be used to ensure high availability and resilience against failures.

4. Performance efficiency

Performance efficiency means building cloud solutions that deliver optimal speed and responsiveness while scaling seamlessly with business growth. For SMBs, it is about using AWS innovations, like serverless computing and global infrastructure, to maximize user experience and agility, without unnecessary cost or complexity.

Key aspects:

- Democratize advanced technologies by using managed AWS services that simplify complex infrastructure

- Expand globally within minutes by deploying applications across multiple AWS regions

- Adopt serverless architectures like AWS Lambda to reduce infrastructure management and scale automatically

- Experiment frequently with new features and architectures to innovate faster

- Consider mechanical sympathy, design systems that work in harmony with the underlying hardware for optimal performance

- Use data-driven insights to continuously optimize architecture and resource allocation

SMBs can boost performance and reduce costs by adopting serverless architectures with AWS Lambda, containerizing applications using Amazon EKS, and accelerating content delivery via Amazon CloudFront.

Best practices: Performance should be guided by continuous monitoring and data-driven insights. Regular reviews of architecture and configurations help take advantage of evolving cloud capabilities. Workloads should be optimized using caching, compression, and other trade-offs, balancing performance, consistency, and speed.

Experimentation with technologies and architectural approaches is encouraged to identify the most efficient solutions. Using serverless and managed services can further improve efficiency and reduce operational overhead.

Tip: Cloudtech supports SMBs in modernizing applications with performance-optimized AWS architectures through its application modernization services.

5. Cost optimization

Cost optimization means continuously aligning cloud spending with business priorities to get maximum value without overspending. For SMBs, it’s about managing usage smartly, paying only for what’s needed, avoiding waste, and balancing cost against speed and innovation demands.

Key aspects:

- Implement cloud financial management to monitor and control expenses accurately

- Adopt a consumption-based model to pay strictly for resources used, preventing overprovisioning

- Measure overall efficiency by tracking resource utilization and identifying waste

- Attribute costs across teams or projects to improve budgeting and accountability

- Optimize spending based on whether speed to market or cost savings is the priority

AWS Cost Explorer and Budgets provide SMBs with insights and alerts to control expenses, while Reserved Instances and Savings Plans offer savings for consistent workloads.

Best practices: Organizations should implement strong cloud financial management practices and continuously track spending. Adopting a consumption-based model ensures payment only for resources used, while efficiency metrics help identify cost-saving opportunities.

Using managed services reduces undifferentiated operational work. Design decisions should balance cost, performance, and speed to market, ensuring that spending aligns with business priorities without over-provisioning resources.

6. Sustainability

Sustainability in the cloud means minimizing environmental impact while maintaining performance and scalability. For SMBs, this involves understanding their cloud footprint and actively managing resources to support greener business practices without compromising growth.

Key aspects

- Understand the environmental impact of cloud usage by measuring carbon footprint and energy consumption

- Establish clear sustainability goals aligned with business values and regulatory expectations

- Maximize resource utilization to avoid waste and reduce energy consumption

- Using AWS managed services, which are designed for efficient, eco-friendly operation

- Reduce downstream impacts by optimizing data transfer, storage, and processing workloads

- Continuously optimize workload components that consume the most resources for better efficiency

SMBs can monitor their environmental impact using AWS’s Customer Carbon Footprint Tool and benefit from AWS’s commitment to running energy-efficient, renewable-powered data centers.

Best practices: Sustainable workloads start with understanding environmental impact and setting measurable goals. Resource usage should be optimized by scaling according to demand, removing unused assets, and using efficient hardware, software, and managed services.

Architectural patterns that improve utilization and reduce waste should be applied, while lifecycle automation for development, testing, and production environments helps minimize the environmental footprint. Continuous analysis of data and workload patterns can uncover additional opportunities to improve sustainability over time.

Cloudtech helps SMBs adopt sustainable cloud modernization strategies through its cloud infrastructure optimization services, balancing performance, cost, and environmental responsibility.

Also Read: The 7 Rs of cloud migration: a comprehensive guide for SMBs

Challenges of implementing AWS Well-Architected Framework pillars (and how to avoid them)

Adopting the AWS Well-Architected Framework (WAF) sounds straightforward until real-world constraints like limited staff, legacy workloads, and budget pressure come into play.

For SMBs, the biggest challenge isn’t understanding the six pillars. It’s operationalizing them consistently across cloud environments without losing agility.

Below are the most common pitfalls SMBs face, and how to sidestep them:

1. Treating WAF as a one-time checklist

Many SMBs approach WAF reviews as a compliance task done once a year. The problem? Cloud workloads evolve—what was “well-architected” six months ago may already be outdated.

How to avoid it: Make it an ongoing practice, not a project. Set quarterly reviews, automate audits using AWS Trusted Advisor, and build small, repeatable improvement cycles into the DevOps pipeline.

2. Limited in-house AWS expertise

Small teams often lack deep AWS architectural experience, leading to partial or inconsistent adoption of the pillars, especially around security and cost optimization.

How to avoid it: Partner with an AWS-certified consultancy like Cloudtech. Their architects can perform a Well-Architected Review, identify misalignments, and guide remediation aligned with AWS best practices without overwhelming the internal staff.

3. Balancing innovation with cost control

It’s easy to over-engineer for performance and reliability, but that often leads to cloud sprawl and runaway costs.

How to avoid it: Leverage AWS Cost Explorer and Savings Plans to track and optimize spend continuously. Use the Cost Optimization pillar as a guardrail; every new service or experiment should have a clear cost-performance tradeoff plan.

4. Legacy workloads and technical debt

SMBs often modernize in phases, which means legacy systems coexist with new cloud-native apps, creating inconsistencies in architecture, monitoring, and reliability.

How to avoid it: Adopt a phased modernization approach: start by containerizing or rehosting legacy workloads, then layer in automation, monitoring, and resilience patterns over time. Cloudtech’s modernization framework helps streamline this transition with minimal disruption.

5. Lack of measurable KPIs

Without defined success metrics, it’s hard to prove the ROI of implementing the framework or justify ongoing investment.

How to avoid it: Establish clear KPIs per pillar. For example:

- Uptime % for Reliability

- Average response time for Performance

- Monthly spend variance for Cost Optimization

- Mean time to recovery (MTTR) for Operational Excellence

Measure, iterate, and align these metrics with business outcomes, not just infrastructure goals.

The AWS Well-Architected Framework isn’t just technical, but it’s cultural. SMBs that see it as a continuous discipline, supported by automation and expert guidance, gain a cloud foundation that’s not only stable and secure but strategically aligned with growth and innovation.

Also Read: AWS business continuity and disaster recovery plan

Implementing the AWS Well-Architected Framework with Cloudtech

Building a modern cloud architecture is about creating a foundation that’s secure, scalable, and adaptable to change. For most SMBs, balancing performance, cost, and resilience can feel overwhelming without deep AWS expertise or large internal teams.