This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

Modernize your cloud. Maximize business impact.

Disjointed systems, manual data prep, and inconsistent pipelines continue to delay insights across many mid-sized and enterprise businesses. As data volumes scale and regulatory demands intensify, traditional extract, transform, load (ETL) tools often fall short, adding complexity rather than clarity.

AWS Glue addresses this need with a fully managed ETL service that simplifies data integration across Amazon RDS, Amazon S3, and other structured or semi-structured data sources. It enables teams to automate transformation workflows, enforce governance, and scale operations without manually provisioning infrastructure.

This guide outlines the core concepts, architectural components, and practical implementation strategies required to build production-grade ETL pipelines using AWS Glue. From job orchestration to cost monitoring, it provides a valuable foundation for building, optimizing, and scaling AWS Glue pipelines across modern data ecosystems.

Key takeaways

- Serverless ETL at scale: AWS Glue eliminates infrastructure management and auto-scales based on data volume and job complexity.

- Built-in cost control: Pay-per-use pricing, Flex jobs, and DPU right-sizing help teams stay within budget without sacrificing speed.

- Governance for regulated industries: Features such as column-level access, lineage tracking, and Lake Formation integration support compliance-intensive workflows.

- Smooth AWS integration: Native connectivity with Amazon S3, Redshift, RDS, and more enables unified analytics across the stack.

- Faster delivery with Cloudtech: Cloudtech designs AWS Glue pipelines that are secure, cost-efficient, and aligned with industry-specific demands.

What is AWS Glue?

AWS Glue is a serverless ETL service that automates data discovery, transformation, and loading across enterprise systems. It scales compute resources automatically and supports both visual interfaces and custom Python for configuring workflows.

Glue handles various data formats (Parquet, ORC, CSV, JSON, Avro) stored in Amazon S3 or traditional databases. It infers schemas and builds searchable metadata catalogs, enabling unified access to information. Finance teams can pull reports while operations teams access real-time metrics from the same datasets.

For security, AWS Glue integrates with AWS Identity and Access Management (IAM) for fine-grained access control, supports column-level security, Virtual Private Cloud (VPC)-based network isolation, and encryption both at rest and in transit. This ensures secure handling of sensitive business data.

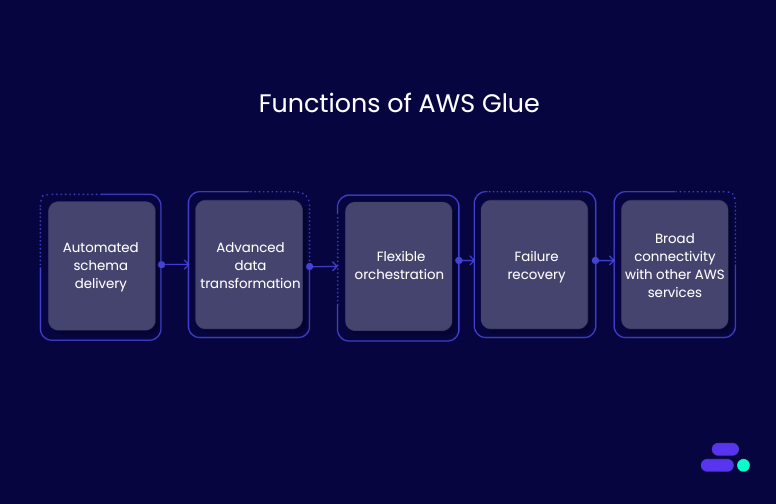

Key functions of AWS Glue

AWS Glue simplifies the ETL process with built-in automation and scalable data handling. Below are its key functions that support efficient data integration across modern cloud environments.

- Automated schema discovery: AWS Glue crawlers analyze new and existing data sources, such as databases, file systems, and data lakes, to detect table structures, column types, and relationships. This eliminates the need for manual schema definition, reducing setup time across ingestion pipelines.

- Advanced data transformation engine: Powered by Apache Spark, AWS Glue’s transformation layer handles complex logic, including multi-table joins, window functions, filtering, and format reshaping. Developers apply custom business logic at scale without building infrastructure from scratch.

- Flexible job orchestration: AWS Glue manages ETL job execution through a combination of time-based schedules, event triggers, and dependency chains. This orchestration ensures workflows run in the correct sequence and datasets remain synchronized across distributed systems.

- Built-in monitoring and failure recovery: Execution logs and metrics are automatically published to Amazon CloudWatch, supporting detailed performance tracking. Jobs include retry logic and failure isolation, helping teams debug efficiently and maintain pipeline continuity.

- Broad connectivity across enterprise systems: Out-of-the-box connectors enable integration with over 80 data sources, including Amazon RDS, Amazon Redshift, Amazon DynamoDB, and widely used third-party platforms, supporting centralized analytics across hybrid environments.

What are the core components of AWS Glue ETL?

Efficient ETL design with AWS Glue starts with understanding its key components. Each part is responsible for tasks like schema discovery, data transformation, and workflow orchestration, all running on fully managed serverless infrastructure.

1. AWS Glue console management interface

The AWS Glue console is the centralized control hub for all ETL activities. Data engineers use it to define ETL jobs, manage data sources, configure triggers, and monitor executions. It provides both visual tools and script editors, enabling rapid job development and debugging.

Integrated with AWS Secrets Manager and Amazon Virtual Private Cloud (VPC), the console facilitates secure credential handling and network isolation. It also displays detailed operational metrics, such as job run duration, processed data volume, and error rates, to help teams fine-tune their performance and troubleshoot issues in real-time. Role-based access controls ensure that users only see and modify information within their designated responsibilities.

2. Job scheduling and automation system

AWS Glue automates job execution through an event-driven and schedule-based trigger system. Engineers can configure ETL workflows to run on fixed intervals using cron expressions, initiate on-demand runs, or trigger jobs based on events from Amazon EventBridge.

This orchestration layer supports complex dependency chains, for instance, ensuring one ETL task begins only after the successful completion of another. All job runs are tracked in a detailed execution history, complete with success and failure logs, as well as integrated notifications via Amazon Simple Notification Service (SNS). These capabilities enable businesses to maintain reliable and repeatable ETL cycles across departments.

3. Script development and connection management

AWS Glue offers automatic script generation in Python or Scala based on visual job designs while also supporting custom code for specialized logic. Its built-in development environment includes syntax highlighting, auto-completion, real-time previews, and debugging support. Version control is integrated to maintain a complete change history and enable rollbacks when needed.

On the connection front, AWS Glue centralizes access to databases and storage layers. It securely stores connection strings, tokens, and certificates, with support for rotation, allowing for updates without service disruption. Through connection pooling, AWS Glue minimizes latency and reduces overhead by reusing sessions for parallel job runs. Combined, these scripting and connection features give developers control without compromising speed or security.

4. ETL engine and automated discovery crawler

At the heart of AWS Glue lies a scalable ETL engine based on Apache Spark. It automatically provisions compute resources based on workload size and complexity, allowing large jobs to run efficiently without manual tuning. During low-load periods, the system downscales to avoid over-provisioning, keeping costs predictable.

AWS Glue Crawlers are designed to discover new datasets and evolving schemas automatically. They scan Amazon S3 buckets and supported data stores to infer structure, detect schema drift, and update the AWS Glue Data Catalog. Crawlers can be configured on intervals or triggered by events, ensuring metadata remains current with minimal manual overhead.

5. Centralized metadata catalog repository

The AWS Glue Data Catalog is a central metadata store that organizes information about all datasets across the organization. It houses table schemas, column types, partition keys, and lineage information to support traceability, governance, and analytics.

This catalog integrates seamlessly with services such as Amazon Athena, Amazon Redshift Spectrum, and Amazon EMR, enabling users to run SQL queries on structured and semi-structured data without duplication. Tags, classifications, and column-level lineage annotations further support data sensitivity management and audit readiness. The catalog also enables fine-grained access control via AWS Lake Formation, making it essential for securing and compliant data operations.

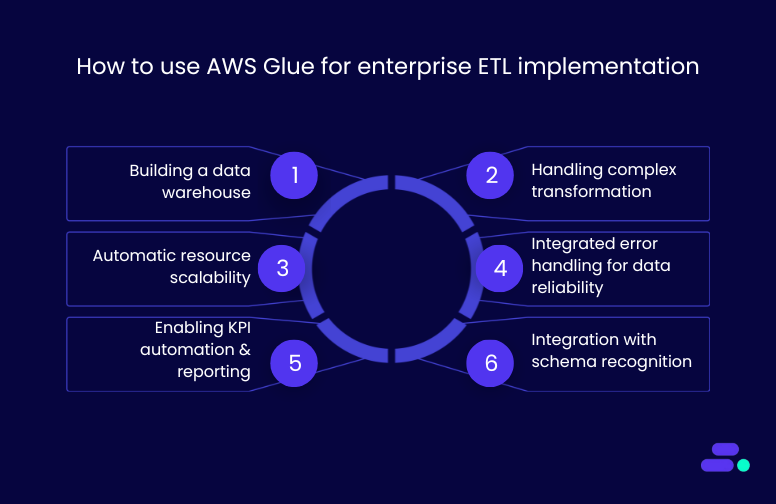

How to use AWS Glue for enterprise ETL implementation

AWS Glue supports end-to-end ETL pipelines that serve analytics, reporting, and operational intelligence needs across complex data ecosystems. Here’s how businesses use it in practice.

- Building enterprise-class data warehouses: Businesses extract data from ERP, CRM, and financial systems, transforming it into standardized formats for analytics. AWS Glue supports schema standardization, historical data preservation, and business rule enforcement across all sources.

- Handling complex transformation scenarios: The ETL engine manages slowly changing dimensions, aggregated fact tables, and diverse file types, from relational records to JSON logs. Data quality checks are applied across the pipeline to ensure compliance and analytics precision.

- Automatic resource scaling and performance tuning: AWS Glue provisions resources dynamically during high-load periods and scales down in low-activity windows. This reduces operational overhead and controls costs without manual intervention.

- Integrated error handling for data reliability: Failed records are handled with retry logic and routed to dead-letter queues. This ensures that mission-critical data is processed reliably, even in the event of intermittent network or system errors.

- Enabling KPI automation and reporting: AWS Glue enables the calculation of real-time metrics and updates dashboards. Businesses compute complex KPIs, such as lifetime value, turnover ratios, and operational performance, directly from ETL pipelines.

- Accelerating integration with schema recognition: When new systems are added, AWS Glue Crawlers automatically detect schemas and update the Data Catalog. This speeds up integration timelines and supports cross-team metadata governance.

How to optimize AWS Glue performance?

Maximizing AWS Glue efficiency requires systematic optimization approaches that balance performance requirements with cost considerations. Businesses should implement advanced optimization strategies to achieve optimal processing speed while controlling operational expenses and maintaining data quality standards.

- Data partitioning optimization: Implement strategic partitioning by date ranges, geographical regions, or business units to minimize data movement and improve query performance, reducing data scan volumes during transformation operations

- Connection pooling configuration: Maintain persistent database connections to operational systems, eliminating connection establishment delays and optimizing resource utilization across concurrent job executions

- Memory allocation tuning: Configure optimal memory settings based on data volume analysis and transformation complexity to balance processing performance with cost-efficiency requirements

- Parallel processing configuration: Distribute workloads across multiple processing nodes to maximize throughput while avoiding resource contention and bottlenecks during peak processing periods

- Job scheduling optimization: Coordinate ETL execution timing to minimize resource conflicts and take advantage of off-peak pricing while ensuring data availability meets business requirements

- Resource right-sizing strategies: Monitor processing metrics to determine optimal Data Processing Unit (DPU) allocation for different workload types and data volumes

- Error handling refinement: Implement intelligent retry logic and dead letter queue management to handle transient failures efficiently while maintaining data integrity

- Output format optimization: Select appropriate file formats, such as Parquet or OR, C, for downstream processing to improve query performance and reduce storage costs

After implementing performance optimization strategies, businesses should assess the broader strengths and trade-offs of AWS Glue to guide informed adoption decisions.

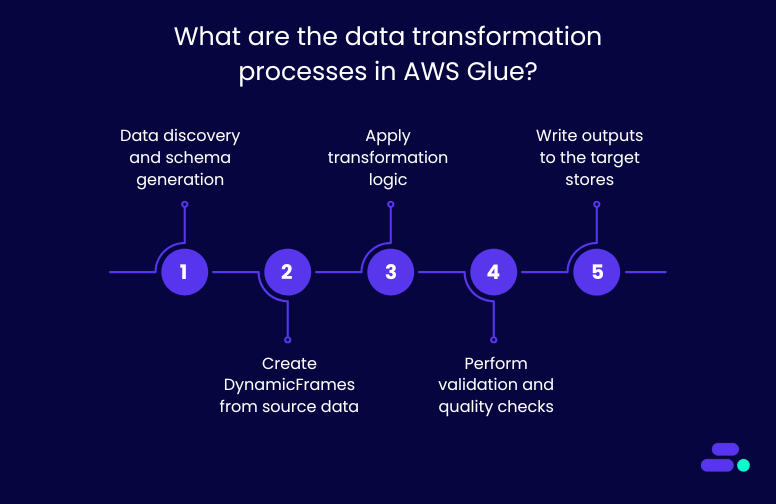

What are the data transformation processes in AWS Glue?

AWS Glue transformations follow a structured flow, from raw data ingestion to schema-aware output generation. The process involves discovery, dynamic schema handling, rule-based transformations, and targeted output delivery, all of which are built on Apache Spark.

Step 1: Data discovery and schema generation

AWS Glue Crawlers scan source systems (e.g., Amazon S3, Amazon RDS) and automatically infer schema definitions. These definitions are registered in the AWS Glue Data Catalog and used as the input schema for the transformation job.

Step 2: Create DynamicFrames from source data

Jobs start by loading data as DynamicFrames, AWS Glue’s schema-flexible abstraction over Spark DataFrames. These allow for schema drift, nested structures, and easier transformations without rigid schema enforcement.

Step 3: Apply transformation logic

Users apply built-in transformations or Spark code to clean and modify the data. Common steps include:

- Mapping input fields to a standardized schema (ApplyMapping)

- Removing empty or irrelevant records (DropNullFields)

- Handling ambiguous types or multiple formats (ResolveChoice)

- Implementing conditional business rules using Spark SQL or built-in AWS Glue transformations

- Enriching datasets with external joins (e.g., reference data, APIs)

Step 4: Perform validation and quality checks

Before writing outputs, AWS Glue supports custom validation logic and quality rules (via Data Quality or manual scripting) to ensure referential integrity, format correctness, and business rule conformance.

Step 5: Write outputs to the target stores

Transformed data is saved to destinations such as Amazon S3, Amazon Redshift, or Amazon RDS. Jobs support multiple output formats, such as Parquet, ORC, or JSON, based on downstream analytical needs.

Once transformation workflows are established, businesses must turn their attention to cost oversight to ensure scalable ETL operations remain within budget as usage expands.

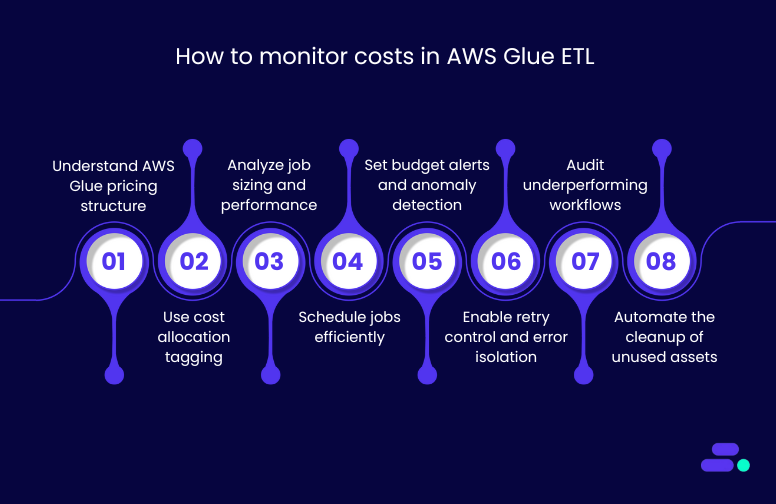

How to monitor costs in AWS Glue ETL

As businesses scale AWS Glue implementations across departments and data products, cost management becomes critical. Enterprise teams implement detailed cost monitoring strategies to ensure data processing performance aligns with operational budgets.

- Understand AWS Glue pricing structure: AWS Glue costs are based on Data Processing Units (DPUs), crawler runtime, and AWS Glue Data Catalog storage. ETL jobs, crawlers, and Python shell jobs are billed at $0.44 per DPU-Hour, per second with a 1-minute minimum (e.g., 6 DPUs for 15 minutes = $0.66). The Data Catalog offers 1M objects and requests free, then charges $1.00 per 100,000 objects and $1.00 per 1M requests monthly. Data Quality requires 2+ DPUs at the same rate. Zero-ETL ingestion costs $1.50 per GB.

- Use cost allocation tagging: Businesses should tag jobs by project, department, or business unit using AWS resource tags. This enables granular tracking in AWS Cost Explorer, supporting usage-based chargebacks and budget attribution.

- Analyze job sizing and performance: Teams adjust job sizing to match the complexity of the workload. Oversized ETL jobs consume unnecessary DPUs; undersized jobs can extend runtimes. Businesses monitor metrics such as DPU hours and stage execution time to right-size their workloads.

- Schedule jobs efficiently: Staggering non-urgent jobs during low-traffic hours reduces competition for resources and avoids peak-time contention. Intelligent scheduling also helps identify idle patterns and optimize job orchestration.

- Set budget alerts and anomaly detection: Administrators set thresholds with AWS Budgets to receive alerts when projected spend exceeds expectations. For broader trends, AWS Cost Anomaly Detection highlights irregular spikes tied to configuration errors or volume surges.

- Enable retry control and error isolation: Jobs that fail repeatedly due to upstream issues inflate costs. Businesses configure intelligent retry patterns and dead-letter queues (DLQs) to isolate bad records and avoid unnecessary full-job retries.

- Audit underperforming workflows: Regularly reviewing job performance helps identify inefficient transformations, outdated logic, and redundant steps. This allows teams to refactor code, streamline logic, and reduce processing overhead.

- Automate the cleanup of unused assets: Stale crawlers, old development jobs, and unused table definitions in the Data Catalog can generate costs over time. Automation scripts using the AWS Software Development Kit (SDK) or the AWS Command Line Interface (CLI) can clean up unused resources programmatically.

While these strategies help maintain performance and cost control, implementing them correctly, especially at enterprise scale, often requires specialized expertise. That’s where partners like Cloudtech come in, helping businesses design, optimize, and secure AWS Glue implementations tailored to their unique operational needs.

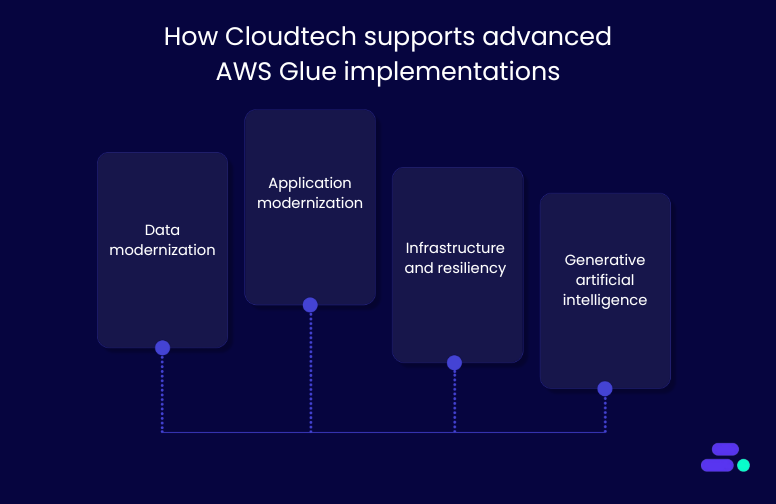

How Cloudtech supports advanced AWS Glue implementations

Cloudtech is an AWS Advanced Tier Services Partner focused on helping small and mid-sized businesses modernize data pipelines using AWS Glue and other AWS-native services. The team works closely with clients in regulated sectors such as healthcare and finance to ensure data workflows are secure, efficient, and audit-ready.

Cloudtech’s core service areas directly support scalable, production-grade AWS Glue implementations:

- Data modernization: Cloudtech helps businesses build efficient ETL pipelines using AWS Glue to standardize, validate, and prepare data for analytics. This includes designing schema-aware ingestion workflows and maintaining metadata consistency across data lakes and warehouses.

- Application modernization: Legacy systems are rearchitected to support Glue-native jobs, serverless execution, and Spark-based transformations, enabling faster, more flexible data processing tied to evolving business logic.

- Infrastructure and resiliency: Cloudtech configures secure, compliant Glue deployments within VPC boundaries, applying IAM controls, encryption, and logging best practices to maintain availability and data protection across complex ETL environments.

- Generative artificial intelligence: With integrated Glue pipelines feeding clean, labeled datasets into AI/ML workflows, Cloudtech enables businesses to power recommendation engines, forecasting tools, and real-time decision-making systems, all backed by trusted data flows.

Cloudtech ensures every AWS Glue implementation is tightly aligned with business needs, delivering performance, governance, and long-term scalability without legacy overhead.

Conclusion

AWS Glue delivers scalable ETL capabilities for businesses handling complex, high-volume data processing. With serverless execution, built-in transformation logic, and seamless integration across the AWS ecosystem, it simplifies data operations while maintaining performance and control.

Cloudtech helps SMBs design and scale AWS Glue pipelines that meet strict performance, compliance, and governance needs. From custom Spark logic to cost-efficient architecture, every implementation is built for long-term success. Get in touch to plan your AWS Glue strategy with Cloudtech’s expert team.

FAQ’s

1. What is ETL in AWS Glue?

ETL in AWS Glue refers to the extract, transform, and load processes executed on a serverless Spark-based engine. It enables automatic schema detection, complex data transformation, and loading into destinations like Amazon S3, Redshift, or RDS without manual infrastructure provisioning.

2. What is the purpose of AWS Glue?

AWS Glue is designed to simplify and automate data integration. It helps organizations clean, transform, and move data between sources using managed ETL pipelines, metadata catalogs, and job orchestration, supporting analytics, reporting, and compliance in scalable, serverless environments.

3. What is the difference between Lambda and Glue?

AWS Lambda is ideal for lightweight, real-time processing tasks with limited execution time. AWS Glue is purpose-built for complex ETL jobs involving large datasets, schema handling, and orchestration. Glue supports Spark-based workflows; Lambda is event-driven with shorter runtime constraints.

4. When to use Lambda or Glue?

Use Lambda for real-time data triggers, lightweight transformations, or microservices orchestration. Use AWS Glue for batch ETL, large-scale data processing, or schema-aware workflows across varied sources. Glue suits data lakes; Lambda fits event-driven or reactive application patterns.

5. Is AWS Glue the same as Spark?

AWS Glue is not the same as Apache Spark, but it runs on Spark under the hood. Glue abstracts Spark with serverless management, job automation, and metadata handling, enabling scalable ETL without requiring direct Spark infrastructure or cluster configuration.

Get started on your cloud modernization journey today!

Let Cloudtech build a modern AWS infrastructure that’s right for your business.