This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

Modernize your cloud. Maximize business impact.

Data breaches cost organizations an average of $4.45 million per incident according to Statista. In this backdrop, it wouldn’t be far-fetched to say that backups are the primary defense against modern cyber threats.

But how secure are the data backups? Common gaps like unencrypted data, poor role isolation, or storing backups in the same account as production can expose critical assets and make recovery difficult.

Although services like AWS Backup offer a centralized, policy-driven way to protect data with encryption, immutability, access control, and cross-region isolation, securing backups requires more than just turning it on.

This guide breaks down proven best practices to help SMBs secure AWS Backup, ensure compliance, and strengthen long-term resilience.

Key takeaways:

- Backup isolation: AWS Backup uses vaults and immutability to prevent tampering and support recovery from ransomware, corruption, or human error.

- Core AWS methods: Native tools, such as EBS snapshots, RDS PITR, and cross-region replication, enable structured, policy-driven backups across services.

- Security controls: Utilize KMS encryption, role-based IAM, cross-account storage, and Vault Lock in compliance mode to achieve a hardened security posture.

- Testing and monitoring: Run regular restore tests and use CloudTrail, Config, CloudWatch, and Security Hub for backup visibility and drift detection.

Why is it important for SMBs to secure their data backups?

Data backups are more than just insurance for SMBs. They're often the last line of defense against downtime, ransomware, and accidental loss. But without proper security, backups can quickly become a liability rather than a safeguard.

Unlike large enterprises with dedicated security teams and redundant systems, SMBs have to contend with limited resources, making them prime targets for attackers. A poorly secured backup, such as one stored in the same account as production, or without proper encryption, can be exploited to:

- Delete or encrypt backups during a ransomware attack, leaving no clean recovery option.

- Gain lateral access to other resources through misconfigured roles or access permissions.

- Expose sensitive customer or financial data, leading to regulatory fines and reputational damage.

For SMBs, such incidents can result in weeks of downtime, lost customer trust, or even permanent closure. On the other hand, well-secured backups can help SMBs:

- Recover quickly and confidently after accidental deletions, application failures, or cyber incidents.

- Maintain compliance with regulations like HIPAA, PCI-DSS, or GDPR that mandate secure data handling and retention.

- Reduce business risk by ensuring data is encrypted, immutable, and isolated from day-to-day operations.

For example, consider a regional healthcare provider storing both primary and backup data in the same AWS account, without immutability or access restrictions. When a misconfigured script deletes production data, the backup is compromised too. They might have prevented this by implementing cross-account backups with AWS Backup Vault Lock.

In contrast, another SMB uses AWS Backup with encryption, vault isolation, and lifecycle policies. They can easily recover from a ransomware attack within hours, without paying a ransom or losing customer data.

Securing backups isn’t just a security best practice, but a business continuity decision. For SMBs, the difference between recovery and ruin often lies in how well their backups are protected.

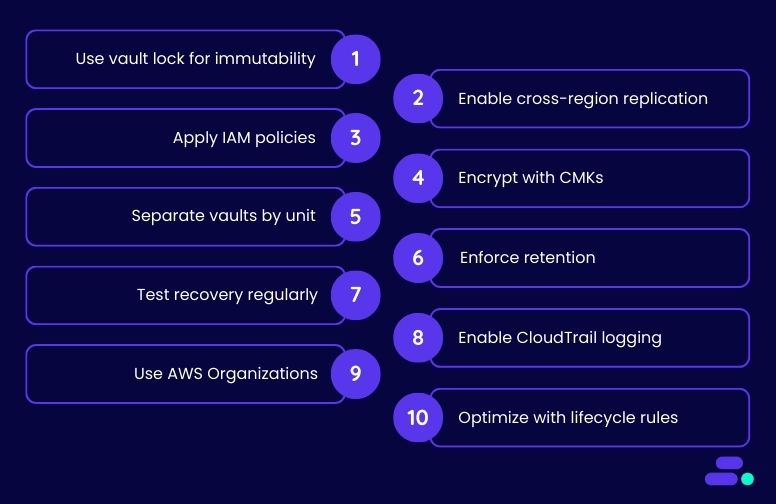

10 right ways to secure AWS Backup and avoid downtime

Setting up backups feels like the final checkbox in a cloud deployment, something done after workloads go live. But this mindset overlooks the fact that backups are a prime target in modern cyberattacks. Ransomware groups increasingly aim to encrypt or delete backup copies first, knowing it cripples recovery efforts.

A backup is only as good as its security. Without immutability, isolation, and proper permissions, even well-intentioned backup plans can fail when they're needed most. By securing AWS Backup from day one using features like vault locking, cross-region replication, and role-based access, SMBs can turn their backups into a resilient, trustable layer of defense, not a hidden point of failure. These practices form the foundation of a dependable backup security posture.

1. Use Backup Vault Lock for immutability

Backup Vault Lock is a feature in AWS Backup that enforces write-once, read-many (WORM) protection on backups stored in a vault. Once configured, backups cannot be deleted or modified, neither by admins nor malicious actors, until their defined retention period expires. This immutability is critical for protecting backups from ransomware, human error, and internal threats.

Why this matters:

- Prevents malicious deletion: Even if an attacker gains privileged access, they cannot erase or overwrite locked backups.

- Meets regulatory compliance: Immutability supports financial, healthcare, and legal mandates that require tamper-proof data retention (e.g., SEC Rule 17a-4(f)).

- Reduces insider risk: Vault Lock disables even root-level deletions, mitigating threats from disgruntled or careless admins.

How to implement with AWS: To enable immutability, configure Backup Vault Lock via the AWS Backup console, CLI, or SDK. Set a min and max retention period for each vault. Once the Vault Lock configuration is in place and finalized, it becomes immutable, and no one can shorten retention or disable WORM protection. It's recommended to test the policy before finalizing using AWS Backup’s --lock-configuration flags, ensuring alignment with compliance and data lifecycle needs.

Use case: A mid-sized healthcare provider uses AWS Backup Vault Lock to protect patient records stored across Amazon RDS and EBS volumes. Given HIPAA compliance requirements and increasing ransomware risks, the team configures a 7-year retention policy that cannot be shortened. Even if attackers breach an IAM role or a new admin misconfigures access, their backups remain secure and unaltered, supporting both legal mandates and recovery readiness.

2. Enable cross-region backup replication

Cross-region backup replication in AWS Backup allows automatic copying of backups to a different AWS Region. This creates geographic redundancy, ensuring that backup data remains available even if an entire region faces an outage, disaster, or security incident. For SMBs, it’s a crucial step toward a more resilient and compliant disaster recovery strategy.

Why this matters:

- Protects against regional outages: If a primary AWS Region experiences a service disruption or natural disaster, backups in a secondary region remain safe and accessible.

- Strengthens ransomware resilience: Cross-region copies isolate backups from the production environment, limiting the blast radius of an attack.

- Supports compliance and BCDR mandates: Many regulatory frameworks and business continuity plans require off-site or off-region copies of critical data.

How to implement with AWS: Enable cross-region replication by configuring a backup plan in AWS Backup and selecting a destination Region for replication. Businesses can apply this to supported resources like EC2, RDS, DynamoDB, and EFS. Lifecycle rules can be defined to transition backups between storage classes in both the source and destination regions to manage costs. AWS Backup Vault Lock in both regions adds immutability and ensures that IAM roles and encryption keys (KMS) are properly configured in each region.

Use case: A regional financial services firm uses AWS Backup to secure its transaction logs and customer data stored in Amazon DynamoDB and Amazon RDS. To meet internal business continuity goals and regulatory guidelines under RBI norms, the company configures cross-region replication to a secondary AWS Region. In the event of a primary region disruption or data breach, IT teams can initiate recovery from the replicated backups with minimal downtime, ensuring operational continuity and compliance.

3. Apply fine-grained IAM policies

Fine-grained AWS Identity and Access Management (IAM) policies help organizations control who can access, modify, or delete backup resources. In AWS Backup, enforcing tightly scoped permissions reduces the attack surface and ensures that only authorized identities interact with critical backup infrastructure.

Why this matters:

- Minimizes accidental or malicious actions: By assigning the least privilege necessary, organizations prevent unauthorized users from deleting or altering backup data.

- Improves auditability and governance: Defined access boundaries make it easier to track actions, comply with audits, and meet regulatory requirements.

- Enforces separation of duties: Segregating permissions between backup operators, security teams, and administrators strengthens internal controls and limits potential abuse.

How to implement with AWS: Organizations can use AWS IAM to create custom permission sets that control specific backup actions such as backup:StartBackupJob, backup:DeleteBackupVault, and backup:PutBackupVaultAccessPolicy. These policies are attached to IAM roles based on team responsibilities, such as restoration-only access for support staff or read-only access for auditors.

To further tighten control, service control policies (SCPs) can be applied at the AWS Organizations level, and multi-factor authentication (MFA) should be enabled for privileged accounts.

Use case: A fintech startup managing critical transaction data across Amazon DynamoDB and EC2 volumes implements fine-grained IAM controls to reduce security risks. Developers can restore from backups for testing, but cannot delete or modify vault settings.

Backup configuration and policy changes are reserved for a small security operations team. This approach enforces operational discipline, limits exposure, and ensures consistent backup governance across environments.

4. Encrypt backups using customer-managed keys (CMKs)

Encryption protects backup data from unauthorized access, both at rest and in transit. AWS Backup integrates with AWS Key Management Service (KMS), allowing organizations to encrypt backups using Customer-Managed Keys (CMKs) instead of default AWS-managed keys, providing stronger control and visibility over data security.

Why this matters:

- Centralizes control over encryption: CMKs give businesses direct authority over key policies, usage permissions, and rotation schedules.

- Enables audit and compliance visibility: All encryption and decryption operations are logged via AWS CloudTrail, supporting regulatory and internal audit requirements.

- Strengthens incident response: If a breach is suspected, access to the CMK can be revoked immediately, rendering associated backups inaccessible to attackers.

How to implement with AWS: In the AWS Backup console or via API/CLI, users can specify a CMK when creating or editing a backup plan or vault. CMKs are created and managed in AWS KMS, where administrators can define key policies, enable key rotation, and set usage conditions.

It's best practice to restrict CMK usage to specific roles or services, monitor activity through CloudTrail logs, and regularly review key policies to ensure alignment with least privilege access.

Use case: A regional law firm backing up case files and email archives to Amazon S3 via AWS Backup uses CMKs to comply with legal confidentiality obligations. The security team creates distinct keys per department, applies granular key policies, and enables rotation every 12 months. If a paralegal’s IAM role is compromised, access to the key can be revoked without impacting other backups, ensuring client data remains encrypted and inaccessible to unauthorized users.

5. Separate backup vaults by environment or business unit

Organizing AWS Backup vaults based on environments (e.g., dev, staging, prod) or business units (e.g., HR, finance, engineering) allows teams to apply tailored access controls, retention policies, and encryption settings. This reduces the blast radius of misconfigurations or attacks.

Why this matters:

- Improves access control: Different IAM permissions can be applied per vault, ensuring only authorized users or services can manage backups within their scope.

- Simplifies compliance and auditing: Clear separation helps track backup behavior, retention policies, and recovery events by organizational boundary.

- Limits cross-impact risk: If one vault is misconfigured or compromised, others remain unaffected—preserving backup integrity for the rest of the business.

How to implement with AWS: Using the AWS Backup console, CLI, or APIs, teams can create multiple backup vaults and assign them logically, such as prod-vault, hr-vault, or analytics-dev-vault. IAM policies should be scoped to allow or deny access to specific vaults. Tags can further categorize vaults for billing or automation. Ensure each vault has appropriate retention settings and uses dedicated encryption keys if isolation is required at the cryptographic level.

Use case: A fintech startup separates backups for its production payment systems, internal HR apps, and test environments into distinct vaults. The production vault uses stricter IAM roles, a longer retention period, and a unique CMK. When a staging misconfiguration leads to an overly permissive role, only the staging vault is affected. Production backups remain protected, isolated, and compliant with PCI-DSS requirements.

6. Define and enforce retention policies

Retention policies in AWS Backup ensure that backups are kept only as long as they’re needed, no longer, no less. By defining and enforcing these policies, organizations reduce unnecessary storage costs, stay compliant with data regulations, and avoid the risks associated with overly long or inconsistent backup lifecycles.

Why this matters:

- Controls data sprawl: Unused backups take up space and increase costs. Automated retention ensures data is removed when no longer needed.

- Supports compliance: Regulatory requirements often dictate how long data must be retained, and automated policies help meet those timelines reliably.

- Reduces manual oversight: By enforcing lifecycle policies, teams avoid accidental deletions or missed cleanup tasks, reducing human error.

How to implement with AWS: AWS Backup lets users define backup plans with lifecycle rules, including retention duration. In the AWS Backup console or via CLI/SDK, admins can set retention periods per backup rule (e.g., 30 days for daily backups, 1 year for monthly snapshots).

Lifecycle settings can also transition backups to cold storage to optimize costs before deletion. Ensure these policies align with both internal data governance and external regulatory needs.

Use case: A regional insurance company configures automated retention for daily, weekly, and monthly backups across Amazon RDS and DynamoDB. Daily backups are kept for 35 days, while monthly backups are retained for 7 years to comply with regulatory audits. This setup ensures consistency, eliminates manual deletion tasks, and prevents accidental retention of outdated data, keeping the backup environment lean, compliant, and efficient.

7. Regularly test backup recovery (disaster recovery drills)

Backups are only as good as the ability to restore them. Regularly testing recovery through disaster recovery (DR) drills ensures that backups are functional, recoverable within required timelines, and aligned with business continuity plans. It’s a critical but often overlooked part of a good backup security strategy.

Why this matters:

- Validates backup integrity: Testing helps confirm that backups are not corrupted, misconfigured, or missing key data.

- Reveals recovery gaps: Simulated drills uncover overlooked dependencies, access issues, or timing failures in recovery workflows.

- Improves incident response: Practicing restores ensures teams can act quickly and confidently during real outages or ransomware events.

How to implement with AWS: AWS Backup supports point-in-time restores for services like Amazon RDS, EFS, DynamoDB, and EC2. Admins can simulate recovery by restoring backups to isolated test environments using the AWS Backup console or CLI.

For full DR simulations, include other AWS services like Route 53, IAM, and security groups in the drill. Document recovery time objectives (RTO) and recovery point objectives (RPO), and automate validation steps using AWS Systems Manager Runbooks.

Use case: A financial tech startup conducts quarterly DR drills to validate recovery of its Amazon Aurora databases and Amazon EC2-based applications. The team restores snapshots in a staging VPC, tests application availability, and verifies data integrity. These drills help refine RTOs, identify hidden misconfigurations, and give stakeholders confidence that the business can withstand outages or data loss events.

8. Enable logging with AWS CloudTrail and AWS Config

Visibility into backup activities is essential for detecting threats, auditing changes, and maintaining compliance. By enabling logging through AWS CloudTrail and AWS Config, businesses gain continuous insight into backup operations, configuration changes, and access patterns. All of these are vital for a secure and accountable backup strategy.

Why this matters:

- Detects unauthorized activity: Logs help identify suspicious actions like unexpected deletion attempts or policy changes.

- Supports forensic analysis: In the event of an incident, detailed logs provide the audit trail necessary to investigate and respond.

- Ensures compliance: Many regulations mandate detailed logging of backup access and configuration for audit purposes.

How to implement with AWS: Enable AWS CloudTrail to log all API activity related to AWS Backup, including backup creation, deletion, and restore events. AWS Config tracks configuration changes to backup vaults, plans, and related resources, ensuring changes are recorded and reviewable.

Use Amazon CloudWatch to create alerts based on log patterns. For example, alerting if a backup job fails or if someone attempts to change retention settings.

Use case: A digital marketing agency uses CloudTrail and AWS Config to monitor backup activity across its AWS accounts. When a contractor mistakenly attempts to delete backup plans, CloudTrail logs the action and triggers an alert through CloudWatch. The security team reviews the logs, confirms the mistake, and updates IAM permissions to prevent recurrence, all without compromising data availability or compliance standing.

9. Use AWS Organizations for centralized backup management

Managing backups across multiple accounts becomes complex without a centralized strategy. AWS Backup integrates with AWS Organizations, allowing businesses to manage backup policies, monitor compliance, and enforce security standards consistently across all accounts from a single management point. This centralization simplifies operations and improves governance.

Why this matters:

- Streamlines policy enforcement: Backup plans can be automatically applied across accounts, reducing manual errors and inconsistency.

- Improves visibility: Admins can monitor backup activity and compliance across the organization in one place.

- Supports scalable governance: Centralized control makes it easier to scale securely as the business adds new AWS accounts.

How to implement with AWS: Enable AWS Organizations and designate a management account. From AWS Backup, turn on organizational backup policies, and define backup plans that apply to organizational units (OUs) or linked accounts. These plans can include schedules, lifecycle rules, and backup vaults. Ensure trusted access is enabled between AWS Backup and Organizations to allow seamless policy distribution and monitoring.

Use case: A growing edtech company manages development, staging, and production workloads across separate AWS accounts. By using AWS Organizations, the operations team centrally enforces backup policies across all environments. They define separate plans for each OU, ensuring that production data has longer retention and replication, while dev environments follow shorter, cost-optimized policies, all without logging into individual accounts.

10. Use backup lifecycle rules to optimize storage and security

AWS Backup lifecycle rules automate the transition of backups between storage tiers, such as from warm storage to cold storage (e.g., AWS Backup Vault and AWS Glacier). This not only reduces long-term storage costs but also ensures that backup data follows a structured lifecycle that aligns with business and compliance needs. Lifecycle rules add predictability and security to backup management.

Why this matters:

- Optimizes costs: Automatically moving older backups to cold storage reduces storage bills without manual intervention.

- Enforces data lifecycle compliance: Ensures backups are retained and archived according to regulatory and business requirements.

- Reduces operational burden: Lifecycle automation reduces the need for manual data classification, transition, and deletion efforts.

How to implement with AWS: When creating a backup plan in AWS Backup, define lifecycle rules specifying when to transition backups from warm to cold storage (e.g., after 30 days) and when to expire them (e.g., after 365 days). Use AWS Backup console, CLI, or APIs to define these settings at the plan level. AWS handles the transitions automatically, maintaining backup integrity and security throughout the lifecycle.

Use case: An accounting firm backs up client data daily using AWS Backup across Amazon EFS and RDS. To balance retention requirements and storage costs, the IT team sets lifecycle rules to transition backups to cold storage after 45 days and delete them after 7 years. This ensures long-term availability for audits while keeping expenses predictable, all with zero manual oversight.

How can Cloudtech help SMBs secure their AWS Backups?

SMBs looking to secure their AWS backups face increasing risks from ransomware, misconfigurations, and compliance complexity. Cloudtech, an AWS Advanced Tier Partner, brings specialized capabilities that go beyond basic AWS setup, helping businesses build resilient, secure, and fully auditable backup environments tailored to their size and risk profile.

Why SMBs choose Cloudtech:

- Built-in security by design: Cloudtech architects resilient, multi-AZ, immutable, and auditable backup systems from the ground up. This includes applying Vault Lock, cross-region replication, and cold storage lifecycle strategies with strict compliance mapping (HIPAA, SOC 2, PCI-DSS).

- Ongoing validation and monitoring: Rather than relying on manual checks, Cloudtech sets up continuous backup monitoring using AWS CloudTrail, AWS Config, and CloudWatch. They also schedule and validate disaster recovery drills, ensuring that restore paths actually work when needed.

- Centralized, compliant governance: From IAM policy enforcement to secure key management and cross-account vault separation, Cloudtech builds governance into the backup architecture. Their AWS-certified architects help SMBs enforce least privilege, define retention policies, and meet audit requirements, all without hiring in-house AWS experts.

In summary, Cloudtech brings strategic AWS depth and operational maturity to SMBs that can’t afford backup failures. Their security-first, compliance-ready approach helps organizations confidently protect their data, avoid costly breaches, and simplify long-term governance.

Conclusion

Securing AWS backups requires deliberate strategies around encryption, access control, and automated enforcement. Features like Vault Lock, cross-account isolation, and lifecycle policies must work in concert to guard against both operational failures and malicious threats.

Regular recovery testing, configuration monitoring, and compliance validation ensure that backups remain dependable when it matters most. As ransomware and insider risks increasingly target backup infrastructure, immutability and automation are no longer optional.

For SMBs wanting to adopt these best practices and establish a secure, audit-ready, and resilient backup posture, Cloudtech brings the AWS-certified expertise, automation capabilities, and tailored support to help them get there.

Connect with Cloudtech or book a call to design a secure, compliant AWS backup solution tailored to your business.

FAQ’s

1. What is the difference between AWS Backup and EBS snapshot?

AWS Backup is a centralized backup service that supports multiple AWS resources. EBS snapshots are specific to volume-level backups. AWS Backup can manage EBS snapshots along with other services under a unified policy and compliance framework.

2. Is AWS Backup stored in S3?

Yes, AWS Backup stores backup data in Amazon S3 behind the scenes, using highly durable storage. However, users do not access these backups directly through S3; access and management occur through the AWS Backup console or APIs.

3. How much does an AWS backup cost?

AWS Backup costs vary by resource type, storage size, retention duration, and transfer between regions or accounts. Charges typically include backup storage, restore operations, and additional features like Vault Lock. Pricing is detailed per service on the AWS Backup pricing page.

4. When to use EBS vs EFS?

EBS is used for block-level storage, ideal for persistent volumes attached to EC2 instances. EFS provides scalable file storage accessed over NFS, suitable for shared workloads requiring parallel access, such as content management systems or data pipelines.

5. Is AWS Backup full or incremental?

AWS Backup performs incremental backups after the first complete copy. Only changes since the last backup are saved, reducing storage use and backup time while preserving restore consistency. The service handles this automatically without requiring user-side configuration.

Get started on your cloud modernization journey today!

Let Cloudtech build a modern AWS infrastructure that’s right for your business.